Question

In Python, for this problem, you will call the train_model function to train your model using the rental_prices training data, and assign the result of

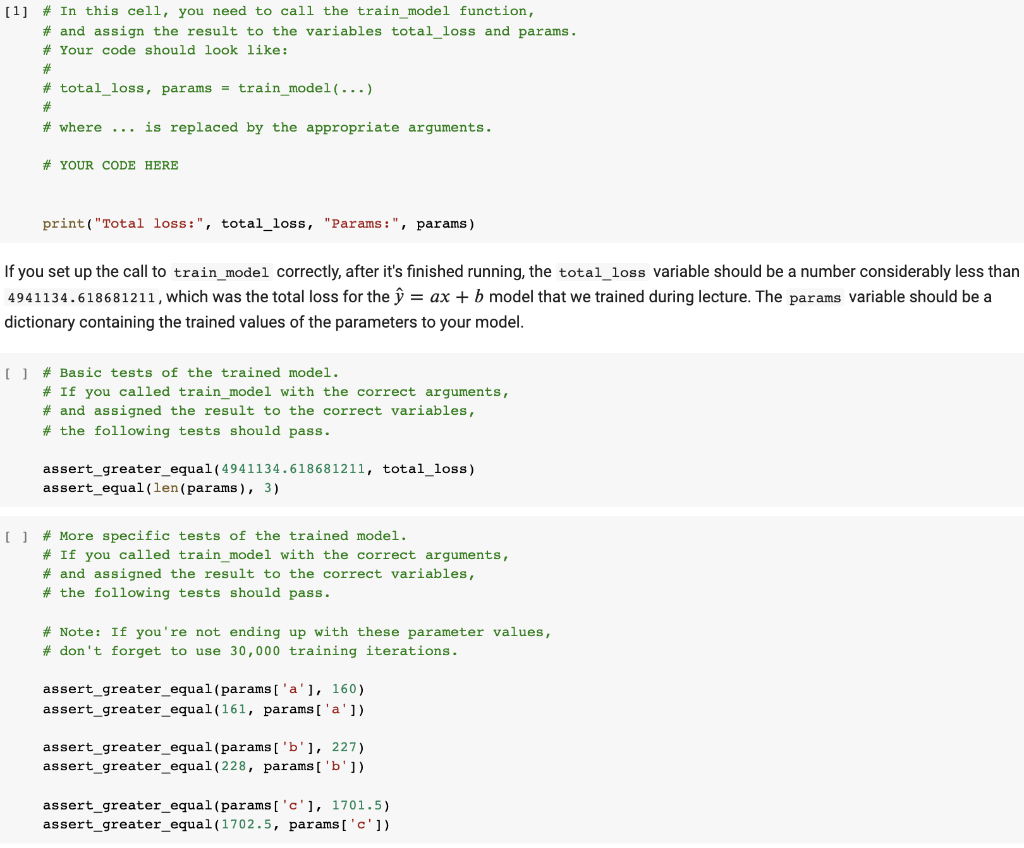

In Python, for this problem, you will call the train_model function to train your model using the rental_prices training data, and assign the result of calling train_model to the variables total_loss and params. The train_model function will perform gradient descent and learn the settings of the parameters that minimize the loss.

For reference, here was how we called the train_model function during lecture when we trained the =+ model (see the "Training a model that minimizes the loss" section of the lecture notebook):

total_loss, params = train_model(loss, rental_prices, ['a', 'b'], 'x', 'y')

You need to do the analogous thing, but for the model =(^2)++.

Important: pass the argument num_iterations=30000 to train_model. Otherwise, training will end too soon, before the loss has been reasonably minimized.

Do not pass a delta argument to train_model; the train_model function should just use the already-specified default value for delta.

After you've set up the call to train_model, you can run the following cell! Warning: if you've set things up correctly, train_model will take a few minutes to run.

*RENTAL PRICES DATA*

rental_prices = [

(2, 2700),

(0, 2045),

(2, 2845),

(2, 2950),

(1, 2100),

(2, 3100),

(2, 2600),

(0, 2200),

(2, 2650),

(2, 2200),

(2, 2100),

(3, 3675),

(3, 3800),

(2, 4300),

(2, 2500),

(1, 2000),

(0, 1300),

(1, 2500),

(1, 1800),

(0, 1250),

(3, 3850),

(3, 4000)

]

# TRAIN MODEL FUNCTION:

def train_model(loss, training_examples, params, var_x, var_y, delta=0.0001, num_iterations=10000):

env = {param:0 for param in params}

for iteration_idx in range(num_iterations):

gradient = {param:0 for param in params} # Set all gradients to 0

total_loss = 0.

for x_val, y_val in training_examples:

env[var_x] = x_val

env[var_y] = y_val

for param in params:

gradient[param] += loss.derivative(param).eval(env=env)

total_loss += loss.eval(env=env)

if (iteration_idx + 1) % 100 == 0:

print("Loss:", total_loss)

print("Params: ", {param:env[param] for param in params})

pass

for param in params:

env[param] = env[param] - (delta * gradient[param])

return total_loss, {param:env[param] for param in params}

import matplotlib

import matplotlib.pyplot as plt

matplotlib.rcParams['figure.figsize'] = (8.0, 8.0)

matplotlib_params = {'legend.fontsize': 'large',

'axes.labelsize': 'large',

'axes.titlesize':'large',

'xtick.labelsize':'large',

'ytick.labelsize':'large'}

matplotlib.rcParams.update(matplotlib_params)

import numpy as np

def plot_points_and_model(points, var_x, model, params, xlabel='x', ylabel='y'):

env = params.copy()

fig, ax = plt.subplots()

xs, ys = zip(*points)

ax.plot(xs, ys, 'r+')

x_min, x_max = np.min(xs), np.max(xs)

step = (x_max - x_min) / 100

x_list = list(np.arange(x_min, x_max + step, step))

y_list = []

for x in x_list:

env[var_x] = x

y_list.append(model.eval(env=env))

ax.plot(x_list, y_list)

plt.xlabel(xlabel)

plt.ylabel(ylabel)

plt.show()

[1] # In this cell, you need to call the train_model function, # and assign the result to the variables total_loss and params. # Your code should look like: total_loss, params = train_model(...) where ... is replaced by the appropriate arguments. # YOUR CODE HERE print("Total loss:", total_loss, "Params:", params) If you set up the call to train_model correctly, after it's finished running, the total_loss variable should be a number considerably less than 4941134.618681211, which was the total loss for the y = ax + b model that we trained during lecture. The params variable should be a dictionary containing the trained values of the parameters to your model. [] # Basic tests of the trained model. # If you called train_model with the correct arguments, # and assigned the result to the correct variables, # the following tests should pass. assert_greater_equal(4941134.618681211, total_loss) assert_equal(len (params), 3) U ] # More specific tests of the trained model. # If you called train_model with the correct arguments, # and assigned the result to the correct variables, # the following tests should pass. # Note: If you're not ending up with these parameter values, # don't forget to use 30,000 training iterations. assert_greater_equal(params['a'), 160) sert_greater_equal(161, params['a']) assert_greater_equal (params['b'], 227) assert_greater_equal(228, params['b']) assert_greater_equal(params['c'), 1701.5) assert_greater_equal(1702.5, params['c']) [1] # In this cell, you need to call the train_model function, # and assign the result to the variables total_loss and params. # Your code should look like: total_loss, params = train_model(...) where ... is replaced by the appropriate arguments. # YOUR CODE HERE print("Total loss:", total_loss, "Params:", params) If you set up the call to train_model correctly, after it's finished running, the total_loss variable should be a number considerably less than 4941134.618681211, which was the total loss for the y = ax + b model that we trained during lecture. The params variable should be a dictionary containing the trained values of the parameters to your model. [] # Basic tests of the trained model. # If you called train_model with the correct arguments, # and assigned the result to the correct variables, # the following tests should pass. assert_greater_equal(4941134.618681211, total_loss) assert_equal(len (params), 3) U ] # More specific tests of the trained model. # If you called train_model with the correct arguments, # and assigned the result to the correct variables, # the following tests should pass. # Note: If you're not ending up with these parameter values, # don't forget to use 30,000 training iterations. assert_greater_equal(params['a'), 160) sert_greater_equal(161, params['a']) assert_greater_equal (params['b'], 227) assert_greater_equal(228, params['b']) assert_greater_equal(params['c'), 1701.5) assert_greater_equal(1702.5, params['c'])Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started