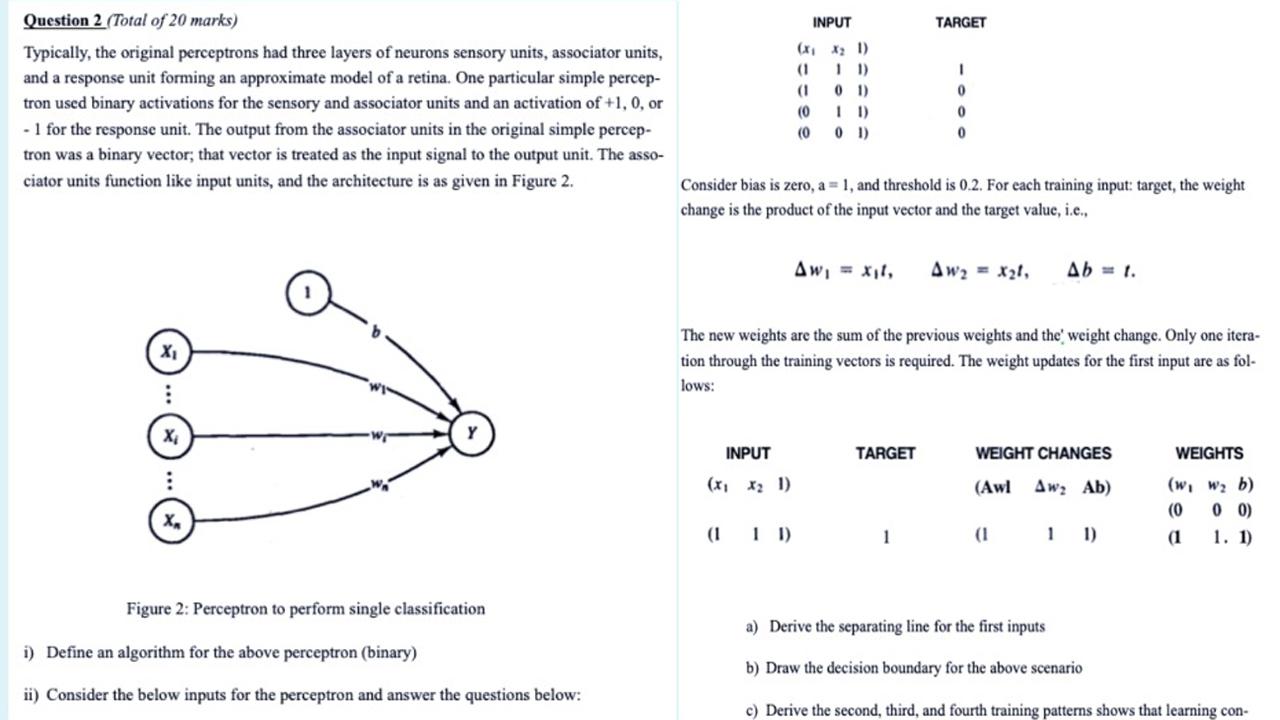

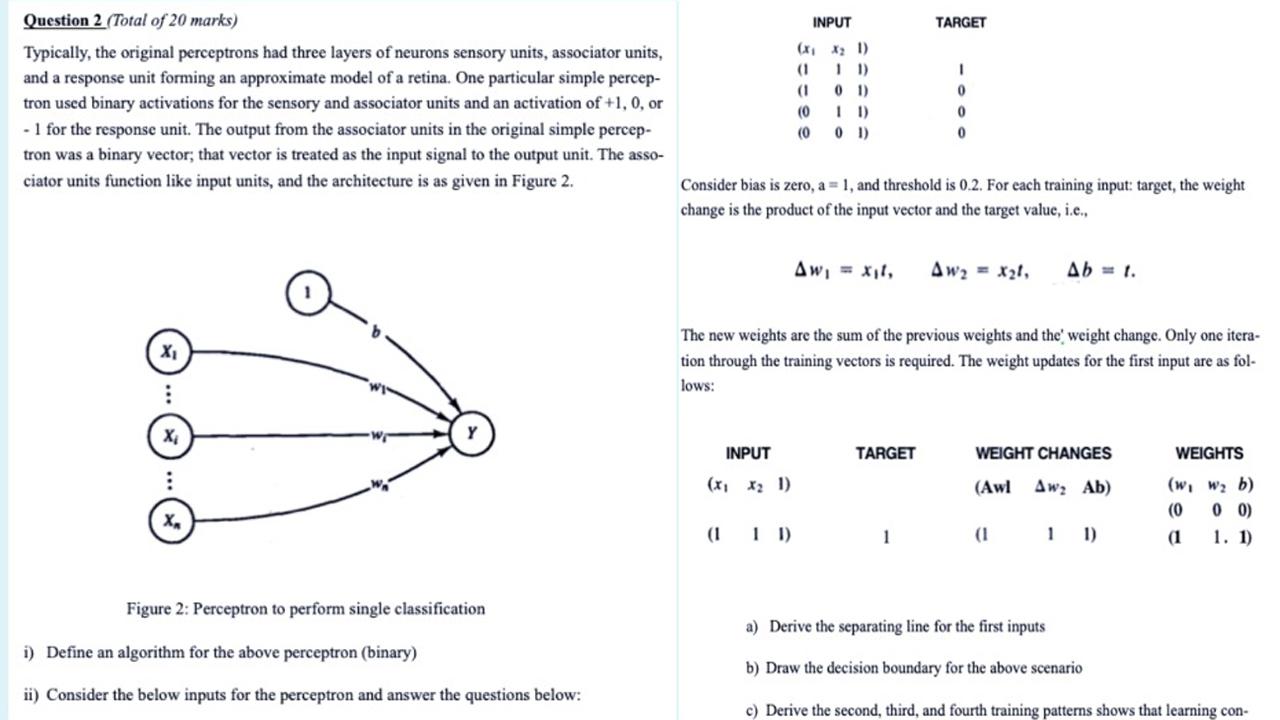

INPUT TARGET 1 01) Question 2 (Total of 20 marks) Typically, the original perceptrons had three layers of neurons sensory units, associator units, and a response unit forming an approximate model of a retina. One particular simple percep- tron used binary activations for the sensory and associator units and an activation of +1, 0, or - 1 for the response unit. The output from the associator units in the original simple percep- tron was a binary vector; that vector is treated as the input signal to the output unit. The asso- ciator units function like input units, and the architecture is as given in Figure 2. (1 (1 (0 (0 0 0 0 01) Consider bias is zero, a = 1, and threshold is 0.2. For each training input: target, the weight change is the product of the input vector and the target value, i.e.. Aw, = Xit, Aw2 = Xal, Ab 1. The new weights are the sum of the previous weights and the weight change. Only one itera- tion through the training vectors is required. The weight updates for the first input are as fol- lows: X INPUT TARGET WEIGHT CHANGES : ( xX21) (Awl Aw2 Ab) WEIGHTS (w, W2 b) (0 00) ( 11. 1) X (111) 1 (1 11) Figure 2: Perceptron to perform single classification a) Derive the separating line for the first inputs i) Define an algorithm for the above perceptron (binary) b) Draw the decision boundary for the above scenario ii) Consider the below inputs for the perceptron and answer the questions below: c) Derive the second third, and fourth training patterns shows that learning con- INPUT TARGET 1 01) Question 2 (Total of 20 marks) Typically, the original perceptrons had three layers of neurons sensory units, associator units, and a response unit forming an approximate model of a retina. One particular simple percep- tron used binary activations for the sensory and associator units and an activation of +1, 0, or - 1 for the response unit. The output from the associator units in the original simple percep- tron was a binary vector; that vector is treated as the input signal to the output unit. The asso- ciator units function like input units, and the architecture is as given in Figure 2. (1 (1 (0 (0 0 0 0 01) Consider bias is zero, a = 1, and threshold is 0.2. For each training input: target, the weight change is the product of the input vector and the target value, i.e.. Aw, = Xit, Aw2 = Xal, Ab 1. The new weights are the sum of the previous weights and the weight change. Only one itera- tion through the training vectors is required. The weight updates for the first input are as fol- lows: X INPUT TARGET WEIGHT CHANGES : ( xX21) (Awl Aw2 Ab) WEIGHTS (w, W2 b) (0 00) ( 11. 1) X (111) 1 (1 11) Figure 2: Perceptron to perform single classification a) Derive the separating line for the first inputs i) Define an algorithm for the above perceptron (binary) b) Draw the decision boundary for the above scenario ii) Consider the below inputs for the perceptron and answer the questions below: c) Derive the second third, and fourth training patterns shows that learning con