Answered step by step

Verified Expert Solution

Question

1 Approved Answer

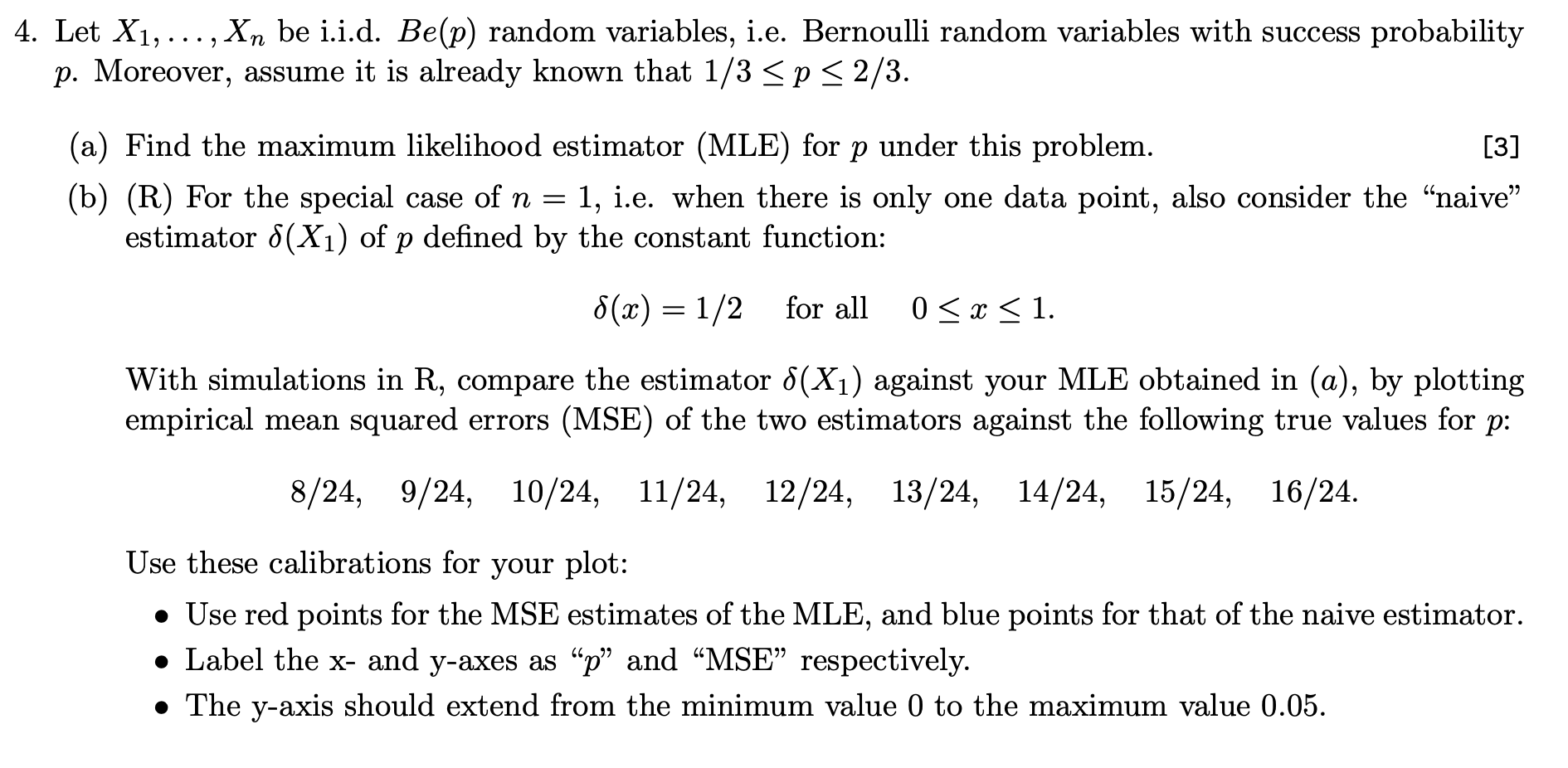

Let ( X_{1}, ldots, X_{n} ) be i.i.d. ( B e(p) ) random variables, i.e. Bernoulli random variables with success probability ( p ). Moreover,

Let \\( X_{1}, \\ldots, X_{n} \\) be i.i.d. \\( B e(p) \\) random variables, i.e. Bernoulli random variables with success probability \\( p \\). Moreover, assume it is already known that \\( 1 / 3 \\leq p \\leq 2 / 3 \\). (a) Find the maximum likelihood estimator (MLE) for \\( p \\) under this problem. [3] (b) (R) For the special case of \\( n=1 \\), i.e. when there is only one data point, also consider the \"naive\" estimator \\( \\delta\\left(X_{1}\ ight) \\) of \\( p \\) defined by the constant function: \\[ \\delta(x)=1 / 2 \\quad \\text { for all } \\quad 0 \\leq x \\leq 1 . \\] With simulations in R, compare the estimator \\( \\delta\\left(X_{1}\ ight) \\) against your MLE obtained in \\( (a) \\), by plotting empirical mean squared errors (MSE) of the two estimators against the following true values for \\( p \\) : \\[ 8 / 24, \\quad 9 / 24, \\quad 10 / 24, \\quad 11 / 24, \\quad 12 / 24, \\quad 13 / 24, \\quad 14 / 24, \\quad 15 / 24, \\quad 16 / 24 . \\] Use these calibrations for your plot: - Use red points for the MSE estimates of the MLE, and blue points for that of the naive estimator. - Label the \\( \\mathrm{x} \\) - and \\( \\mathrm{y} \\)-axes as \" \\( p \\) \" and \"MSE\" respectively. - The y-axis should extend from the minimum value 0 to the maximum value 0.05 . - Use 10000 instances of repeated samples to form the empirical MSE's. (Moral: In certain finite-sample situations, a principled estimator like the MLE is no better than a naive estimator.)

Let \\( X_{1}, \\ldots, X_{n} \\) be i.i.d. \\( B e(p) \\) random variables, i.e. Bernoulli random variables with success probability \\( p \\). Moreover, assume it is already known that \\( 1 / 3 \\leq p \\leq 2 / 3 \\). (a) Find the maximum likelihood estimator (MLE) for \\( p \\) under this problem. [3] (b) (R) For the special case of \\( n=1 \\), i.e. when there is only one data point, also consider the \"naive\" estimator \\( \\delta\\left(X_{1}\ ight) \\) of \\( p \\) defined by the constant function: \\[ \\delta(x)=1 / 2 \\quad \\text { for all } \\quad 0 \\leq x \\leq 1 . \\] With simulations in R, compare the estimator \\( \\delta\\left(X_{1}\ ight) \\) against your MLE obtained in \\( (a) \\), by plotting empirical mean squared errors (MSE) of the two estimators against the following true values for \\( p \\) : \\[ 8 / 24, \\quad 9 / 24, \\quad 10 / 24, \\quad 11 / 24, \\quad 12 / 24, \\quad 13 / 24, \\quad 14 / 24, \\quad 15 / 24, \\quad 16 / 24 . \\] Use these calibrations for your plot: - Use red points for the MSE estimates of the MLE, and blue points for that of the naive estimator. - Label the \\( \\mathrm{x} \\) - and \\( \\mathrm{y} \\)-axes as \" \\( p \\) \" and \"MSE\" respectively. - The y-axis should extend from the minimum value 0 to the maximum value 0.05 . - Use 10000 instances of repeated samples to form the empirical MSE's. (Moral: In certain finite-sample situations, a principled estimator like the MLE is no better than a naive estimator.) Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started