Answered step by step

Verified Expert Solution

Question

1 Approved Answer

Let's first refresh on the application of Bayes' Rule to models and data in ML. Given a training dataset D and a model 0,

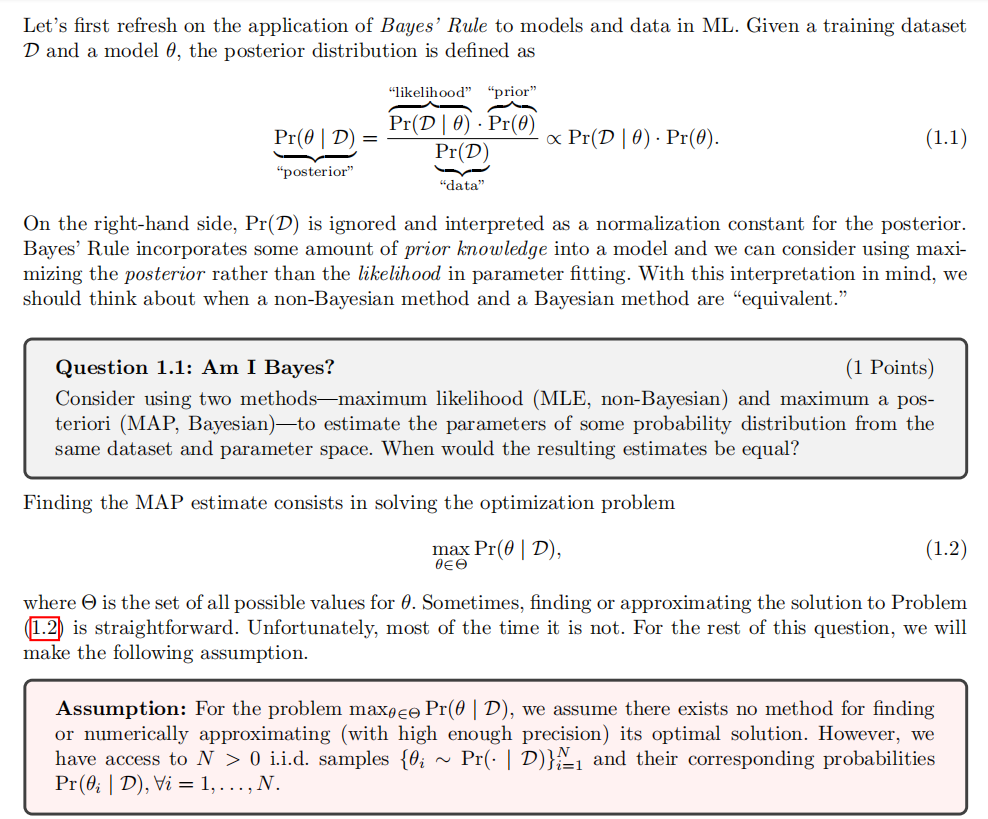

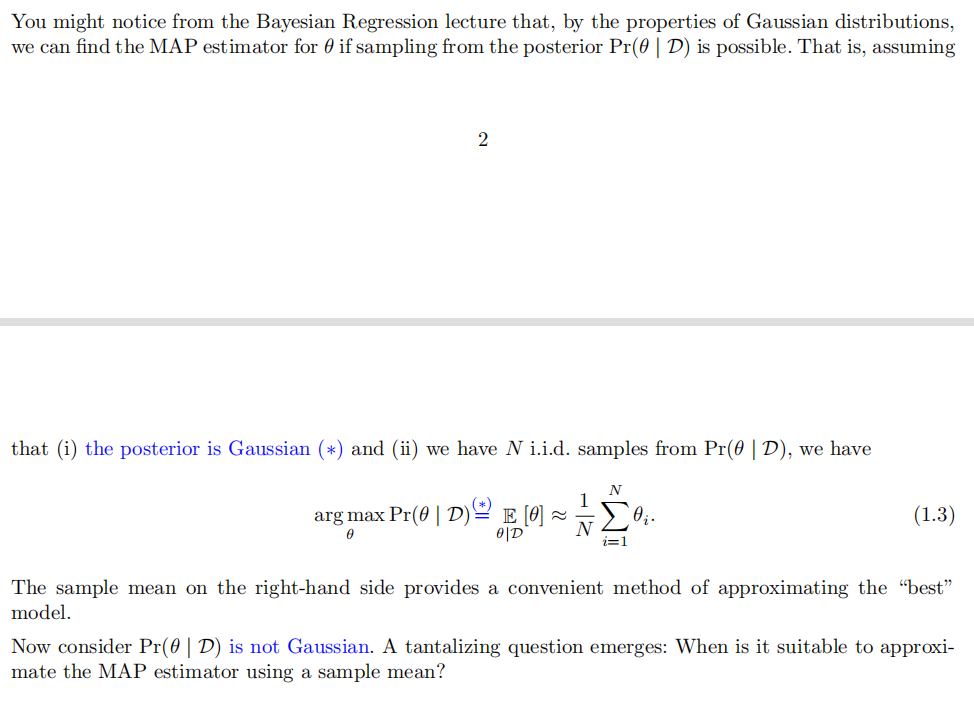

Let's first refresh on the application of Bayes' Rule to models and data in ML. Given a training dataset D and a model 0, the posterior distribution is defined as Pr(0 | D) = "posterior" "likelihood" "prior" Pr(D 0) Pr(0) Pr(D) "data" x Pr(D | 0) Pr(0). (1.1) On the right-hand side, Pr(D) is ignored and interpreted as a normalization constant for the posterior. Bayes' Rule incorporates some amount of prior knowledge into a model and we can consider using maxi- mizing the posterior rather than the likelihood in parameter fitting. With this interpretation in mind, we should think about when a non-Bayesian method and a Bayesian method are "equivalent." Question 1.1: Am I Bayes? (1 Points) Consider using two methods-maximum likelihood (MLE, non-Bayesian) and maximum a pos- teriori (MAP, Bayesian)-to estimate the parameters of some probability distribution from the same dataset and parameter space. When would the resulting estimates be equal? Finding the MAP estimate consists in solving the optimization problem max Pr( D), (1.2) where 0 is the set of all possible values for 0. Sometimes, finding or approximating the solution to Problem (1.2) is straightforward. Unfortunately, most of the time it is not. For the rest of this question, we will make the following assumption. Assumption: For the problem maxe Pr(0 | D), we assume there exists no method for finding or numerically approximating (with high enough precision) its optimal solution. However, we have access to N > 0 i.i.d. samples {0i Pr(D) and their corresponding probabilities Pr(i | D), Vi = 1,..., N. ~ You might notice from the Bayesian Regression lecture that, by the properties of Gaussian distributions, we can find the MAP estimator for 0 if sampling from the posterior Pr(0 | D) is possible. That is, assuming 2 that (i) the posterior is Gaussian (*) and (ii) we have N i.i.d. samples from Pr(0 | D), we have N arg max Pr(0 | D) E [0] Oi- 0 0|D (1.3) The sample mean on the right-hand side provides a convenient method of approximating the "best" model. Now consider Pr(0 | D) is not Gaussian. A tantalizing question emerges: When is it suitable to approxi- mate the MAP estimator using a sample mean?

Step by Step Solution

There are 3 Steps involved in it

Step: 1

The resulting estimates from the maximum likelihood method MLE and the maximum ...

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started