Answered step by step

Verified Expert Solution

Question

1 Approved Answer

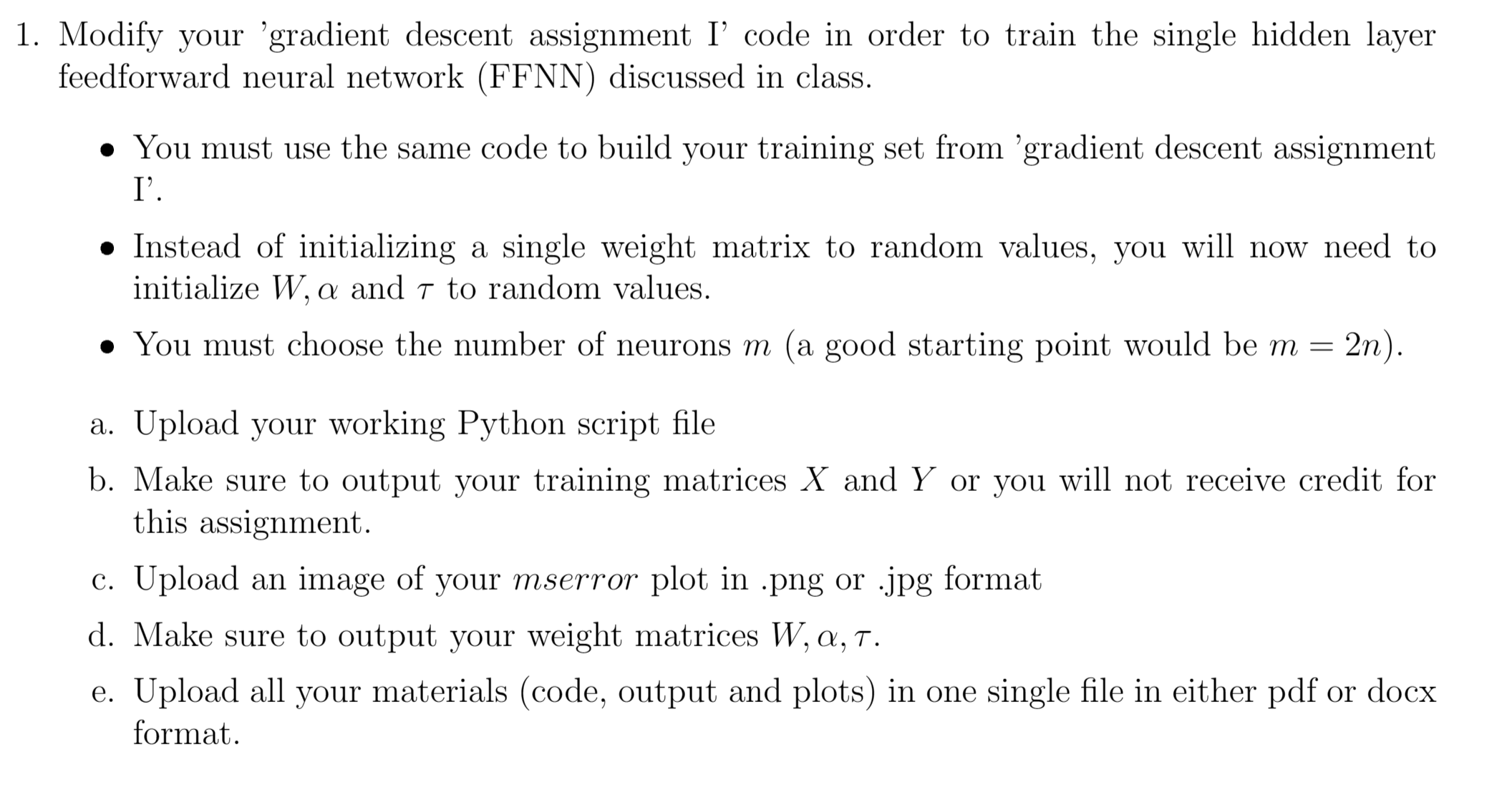

Modify your 'gradient descent assignment I ' code in order to train the single hidden layer feedforward neural network ( FFNN ) discussed in class.

Modify your 'gradient descent assignment I code in order to train the single hidden layer

feedforward neural network FFNN discussed in class.

You must use the same code to build your training set from 'gradient descent assignment

I

Instead of initializing a single weight matrix to random values, you will now need to

initialize and to random values.

You must choose the number of neurons a good starting point would be

a Upload your working Python script file

b Make sure to output your training matrices and or you will not receive credit for

this assignment.

c Upload an image of your mserror plot in png or jpg format

d Make sure to output your weight matrices

e Upload all your materials code output and plots in one single file in either pdf or docx

format.

import numpy as np

import matplotlib.pyplot as plt

def linearnnx y nits, eta:

# initialize dimensions

xshape xshape

yshape yshape

n xshape

N xshape

k yshape

# build the input data matrix

z npones N

a npconcatenatex z axis

# initialize random weights

w nprandom.normalsizek n

# initialize mserror storage

mserror npzeros nits

# train the network using gradient descent

for itcount in rangenits:

Section of code to update network weights

Compute the gradient of the mean squared error and store it in a variable named 'gradientofmse'

gradientofmse npdotnpdotw a y aT

Given the variable 'gradientofmsenorm', update the network weight matrix, w using the gradient descent formula given in class

w w eta gradientofmse

# calculate MSE

etemp

for trcount in rangeN:

# get fXk Yk column vector

avec npconcatenatex: trcount axis

vk npdotw avec y: trcount

# calculate the error

etemp etemp vktranspose vk

mserror itcount N etemp

# test the output

netout npdotw a

return netout, mserror, w

# 'main' starts here

# set up the training set

n

k

N

x nprandom.normalsizen N

y nprandom.normalsizek N

# compute the closed form solution

z npones N

a npconcatenatex z axis

wpinv npdoty nplinalg.pinva

printWeight matrix computed using the pseudoinverse:

printwpinv

print

Section of code to call the gradient descent function to computer the weight matrix

You must input a value for the number of iterations, nits

You must input a value for the learning rate, eta You should run numerical tests with a few different values

nits # Set the number of iterations

eta # Set the learning rate

netout, mserror, w linearnnx y nits, eta

printWeight matrix computed via gradient descent:

printw

print

Compute the sum of the absolute value of the difference between the weight matrices from pinv and gradient descent

wsumabsdiff npsumnpabsolutewpinv w

printSum of absolute value of the difference between weight matrices:

printwsumabsdiff

# plot the mean squared error

pltplotmserror :

plttitleMean Squared Error versus Iteration'

pltxlabelIteration #

pltylabelMSE

pltsavefigplotpng

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started