Question: Part 3 : Counting Sequences of ( N ) - grams ( 2 5 % ) Using WordLengthCount.java as a starting point,

Part : Counting Sequences of N grams

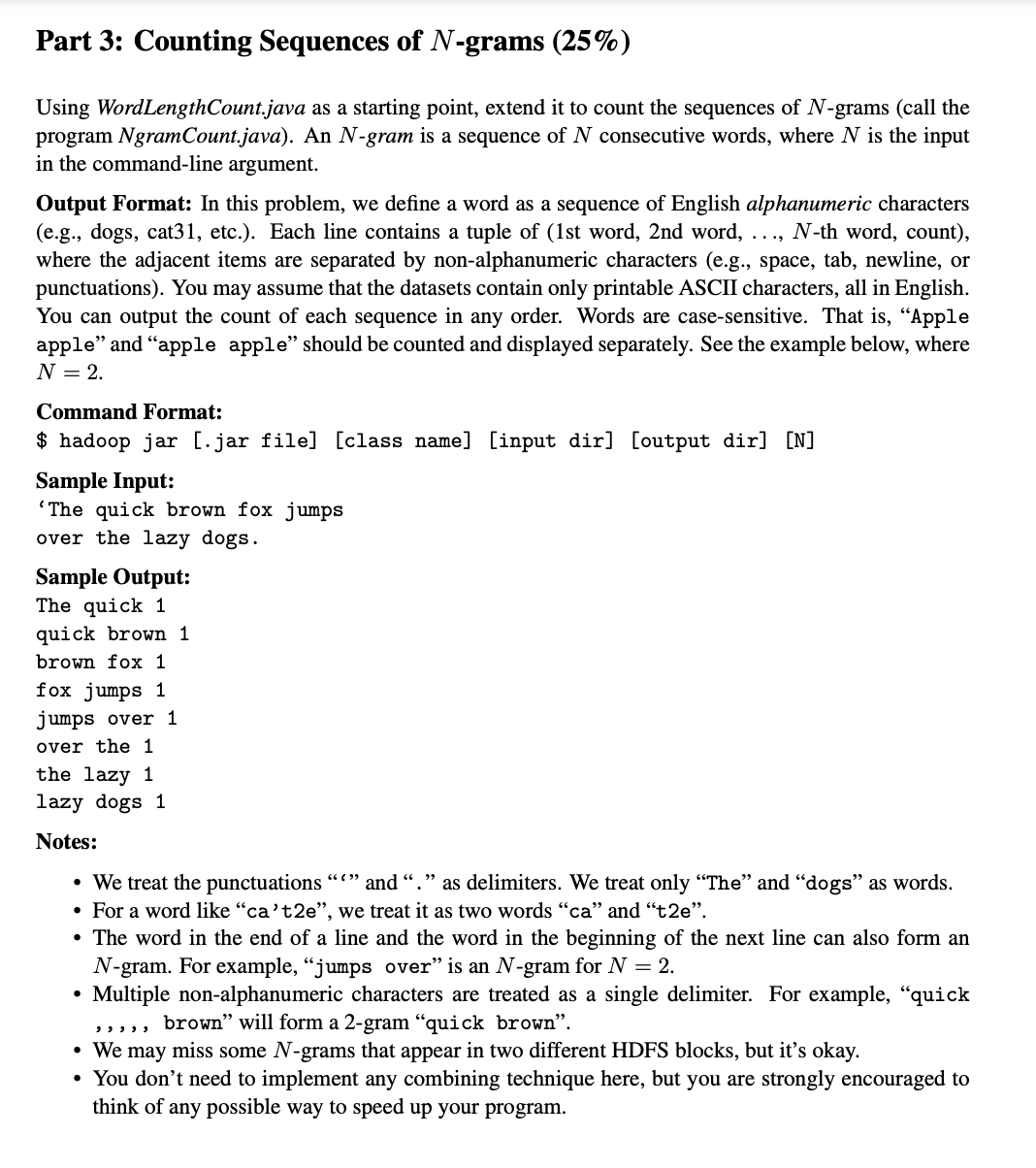

Using WordLengthCount.java as a starting point, extend it to count the sequences of N grams call the program NgramCount.java An N gram is a sequence of N consecutive words, where N is the input in the commandline argument.

Output Format: In this problem, we define a word as a sequence of English alphanumeric characters eg dogs, cat etc. Each line contains a tuple of st word, nd word, N th word, count where the adjacent items are separated by nonalphanumeric characters eg space, tab, newline, or punctuations You may assume that the datasets contain only printable ASCII characters, all in English. You can output the count of each sequence in any order. Words are casesensitive That is "Apple apple" and "apple apple" should be counted and displayed separately. See the example below, where N

Command Format:

$ hadoop jar jar fileclass nameinput diroutput dirN

Sample Input:

'The quick brown fox jumps

over the lazy dogs.

Sample Output:

The quick

quick brown

brown fox

fox jumps

jumps over

over the

the lazy

lazy dogs

Notes:

We treat the punctuations and as delimiters. We treat only "The" and "dogs" as words.

For a word like ca t e we treat it as two words ca and t e

The word in the end of a line and the word in the beginning of the next line can also form an N gram. For example, "jumps over" is an N gram for N

Multiple nonalphanumeric characters are treated as a single delimiter. For example, "quick brown" will form a gram "quick brown".

We may miss some N grams that appear in two different HDFS blocks, but it's okay.

You don't need to implement any combining technique here, but you are strongly encouraged to think of any possible way to speed up your program.

import java.ioIOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fsPath;

import org.apache.hadoop.ioIntWritable;

import org:apache.hadoop.ios.Text;

import org.apache.hadoop:mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop:mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FilleOutputFormat;

public class WordCount

public static class TokenizerMapper

extends Mapperbject, Text, Text, IntWritable

private final static IntWritable one new IntWritable;

private Text word new Text;

public void mapObject key, Text value, Context context

throws IOException, InterruptedException

StringTokenizer itr. new StringTokenizervaluetoString;

while itrhasMoreTokens

word.setitrnextToken;

context.writeword one;

public static class IntSumReducer

extends Reducer

private IntWritable result new IntWritable;

public void reduceText key, Iterable values,

Context context

throws IOException, InterruptedException

int sum ;

for IntWritable val : values

sum val.get;

result.setsum;

context.writekey result;

public static void mainString args throws Exception

Configuration conf new Configuration;

Job job Job.getInstanceconf "word count";

job.setJarByClassWordCountclass;

job.setMapperClassTokenizerMapperclass;

job.setCombinerClassIntSumReducerclass;

job.setReducerClassIntSumReducerclass;

job.setOutputKeyClassTextclass;

job.setOutputValueClassIntWritableclass;

FileInputFormat.addInputPathjob new Pathargs;

FileutputFormat.setOutputPathjob new Pathargs;

System.exitjobwaitForCompletiontrue : ;

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock