Please help me get the answer to question (c) to calculate the ELBO. Please see attached screenshots

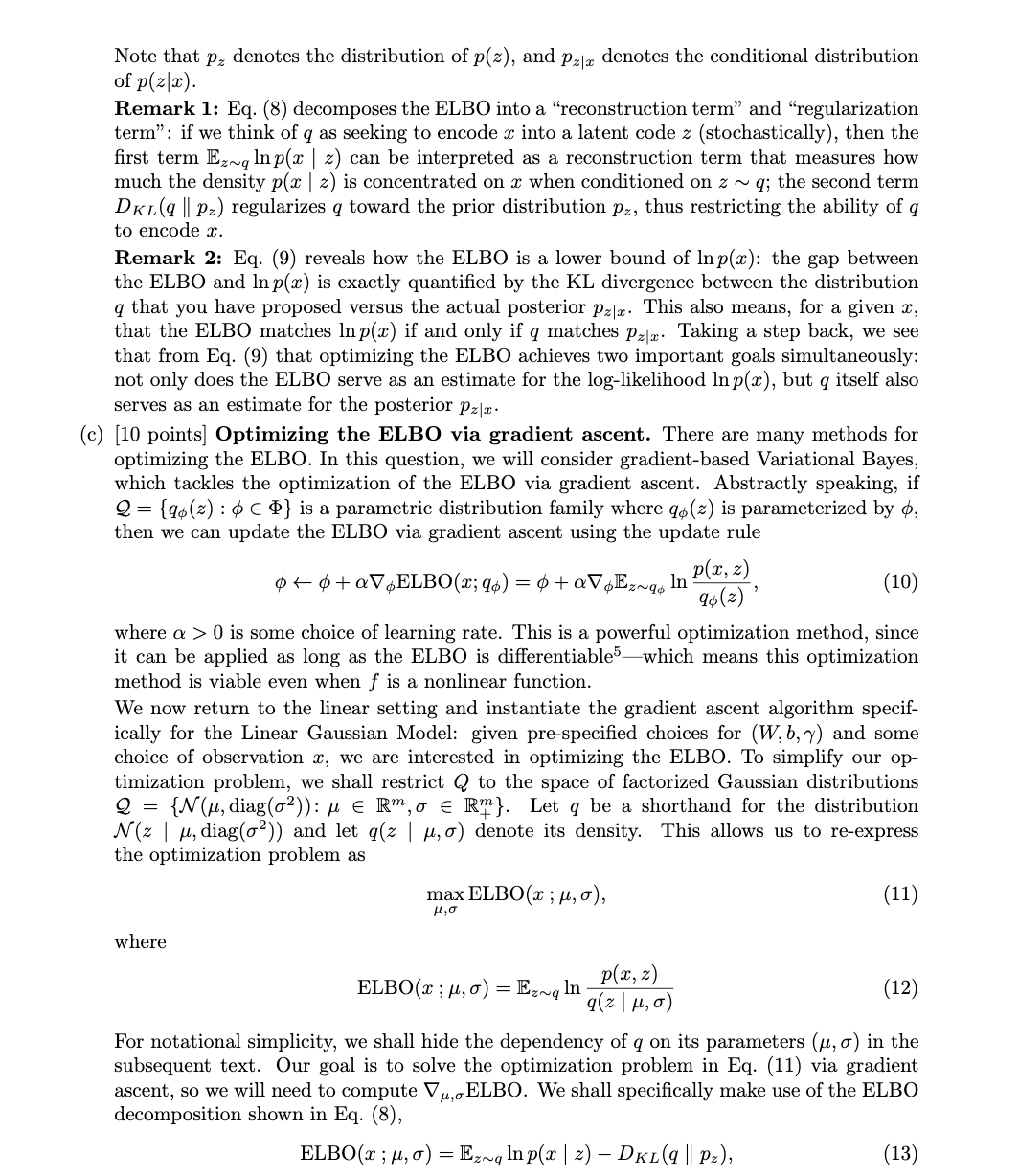

Note that pz denotes the distribution of p(z), and Par denotes the conditional distribution of p(z x). Remark 1: Eq. (8) decomposes the ELBO into a "reconstruction term" and "regularization term": if we think of q as seeking to encode x into a latent code z (stochastically), then the first term Ez~, Inp(x | z) can be interpreted as a reconstruction term that measures how much the density p(x | z) is concentrated on a when conditioned on z ~ q; the second term DEL(q l| Pz) regularizes q toward the prior distribution pz, thus restricting the ability of q to encode x. Remark 2: Eq. (9) reveals how the ELBO is a lower bound of Inp(x): the gap between the ELBO and In p(x) is exactly quantified by the KL divergence between the distribution g that you have proposed versus the actual posterior Par. This also means, for a given x, that the ELBO matches Inp(x) if and only if q matches Pzr. Taking a step back, we see that from Eq. (9) that optimizing the ELBO achieves two important goals simultaneously: not only does the ELBO serve as an estimate for the log-likelihood Inp(x), but q itself also serves as an estimate for the posterior Pzz. (c) [10 points] Optimizing the ELBO via gradient ascent. There are many methods for optimizing the ELBO. In this question, we will consider gradient-based Variational Bayes, which tackles the optimization of the ELBO via gradient ascent. Abstractly speaking, if 2 = {qo(z) : ED} is a parametric distribution family where qo (z) is parameterized by d, then we can update the ELBO via gradient ascent using the update rule o + 0 + aV, ELBO(x; 90) = $ +aVENq, In P(2, z) 94(2) (10) where o > 0 is some choice of learning rate. This is a powerful optimization method, since it can be applied as long as the ELBO is differentiable'-which means this optimization method is viable even when f is a nonlinear function. We now return to the linear setting and instantiate the gradient ascent algorithm specif- ically for the Linear Gaussian Model: given pre-specified choices for (W, b, ) and some choice of observation x, we are interested in optimizing the ELBO. To simplify our op- timization problem, we shall restrict Q to the space of factorized Gaussian distributions 2 = {N(u, diag(02)) : u E Rm,o E R"}. Let q be a shorthand for the distribution N(z | u, diag(o?)) and let q(z | u, ) denote its density. This allows us to re-express the optimization problem as max ELBO(x ; H, 5), H , 0 (11) where ELBO(x ; M, o) = EzNg In P(X, 2) 9 ( 2 | 1, 0 ) (12) For notational simplicity, we shall hide the dependency of q on its parameters (u, o) in the subsequent text. Our goal is to solve the optimization problem in Eq. (11) via gradient ascent, so we will need to compute VA,ELBO. We shall specifically make use of the ELBO decomposition shown in Eq. (8), ELBO(x ; M, 0) = Ez~q Inp(x | z) - DKL(q l| pz), (13)and tackle Vu,Ez~q Inp(x | z) and VA,a DKL(q ll Pz) separately. i. To simplify your task of determining Vu,DKL(q || pz), we have provided the KL divergence between q and pz for you as follows, DKL(q ll pz) = E - most ?(0? + u? - 1) . 1= 1 (14) Task: Provide closed-form expressions for VuDKL(q ll Pz) (15) Vo DKL(q ll Pz), (16 in terms of (u, o). You do not need to prove Eq. (14). ii. Calculating the gradient of Ezq Inp(x | z) with respect to the parameters of q is tricky since the expectation is dependent on q. We can circumvent this issue by observing that sampling z ~ q is equivalent to sampling e ~ M(0, Im) and then calculating z = utoo. Thus, Ez~qlnp(x | z) = EN(O, Im) Inp(x |u tooE). (17) Since the RHS expectation is not dependent on the parameters of q, we can push the gradient operator through the expectation as follows, ( 30 0 + # | x)duon ("roNH = (2 | x) dubzHonA (18) This technique is commonly referred to as the reparameterization trick. Task: Provide closed-form expressions for Vulnp(x | utoOE) (19) Volnp(x | " toOE). (20 in terms of (x, 7, W, b, z, (), where z = M to OE. Hint: First determine the closed-form expression for Vz Inp(x | z) (21) in terms of (x, 7, W, b, z). Then, using z = u to O, apply the chain rule to determine Vulnp(x | u to OE) and V. Inp(x | utoOE). Remark: Exact calculation of the expected gradient EEN(0, Im) Vu,. Inp(x | u too) is often intractable, and must instead be estimated via Monte Carlo sampling (thus the final algorithm's namesake Stochastic Gradient Variational Bayes). Although this expectation is actually tractable in the Linear Gaussian Model setting, we shall-for the sake of implementing SGVB faithfully-estimate the expectation via Monte Carlo sampling anyway in the coding problem Q3d