Please help with this Machine Learning question in detail , thank you !

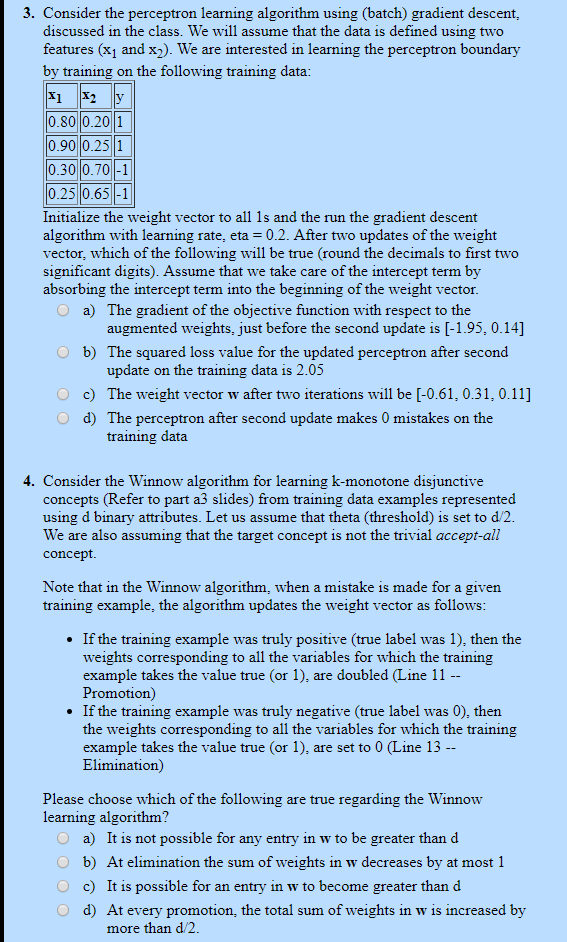

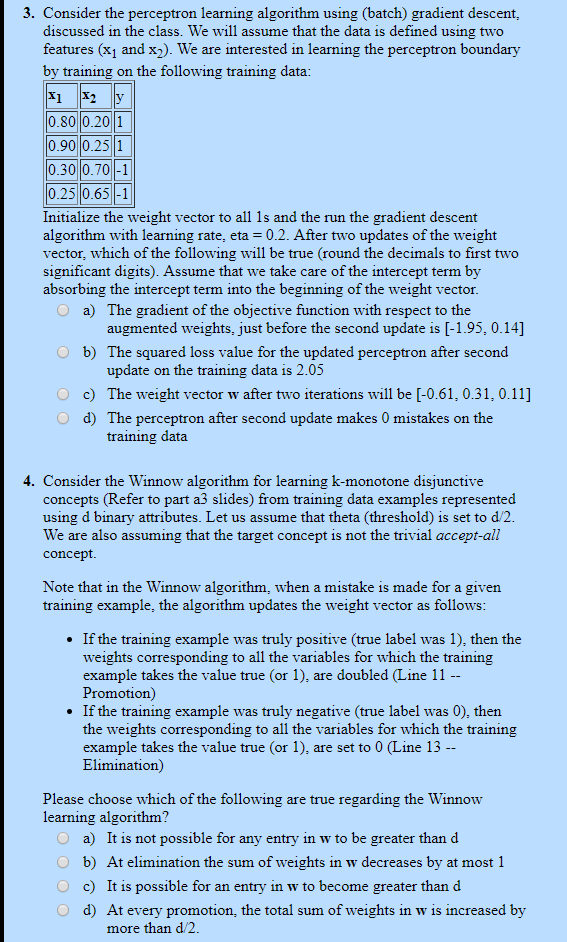

3. Consider the perceptron learning algorithm using (batch) gradient descent, discussed in the class. We will assume that the data is defined using two features (x1 and x)). We are interested in learning the perceptron boundary by training on the following training data: 0.800.20||1 0.90 0.251 |0.300.701 |0.25 0.65 -1 Initialize the weight vector to all ls and the run the gradient descent algorithm with learning rate, eta = 0.2. After two updates of the weight vector, which of the following will be true (round the decimals to first two significant digits). Assume that we take care of the intercept term by absorbing the intercept term into the beginning of the weight vector. O a) The gradient of the objective function with respect to the augmented weights, just before the second update is [-1.95, 0.14] O b) The squared loss value for the updated perceptron after second update on the training data is 2.05 O c) The weight vector w after two iterations will be (-0.61, 0.31, 0.11] 0 d) The perceptron after second update makes O mistakes on the training data 4. Consider the Winnow algorithm for learning k-monotone disjunctive concepts (Refer to part a3 slides) from training data examples represented using d binary attributes. Let us assume that theta (threshold) is set to d/2. We are also assuming that the target concept is not the trivial accept-all concept. Note that in the Winnow algorithm, when a mistake is made for a given training example, the algorithm updates the weight vector as follows: If the training example was truly positive (true label was 1), then the weights corresponding to all the variables for which the training example takes the value true (or 1), are doubled (Line 11 -- Promotion) If the training example was truly negative (true label was 0), then the weights corresponding to all the variables for which the training example takes the value true (or 1), are set to 0 (Line 13 -- Elimination) Please choose which of the following are true regarding the Winnow learning algorithm? O a) It is not possible for any entry in w to be greater than d O b) At elimination the sum of weights in w decreases by at most 1 O c) It is possible for an entry in w to become greater than d O d) At every promotion, the total sum of weights in w is increased by more than 1/2. 3. Consider the perceptron learning algorithm using (batch) gradient descent, discussed in the class. We will assume that the data is defined using two features (x1 and x)). We are interested in learning the perceptron boundary by training on the following training data: 0.800.20||1 0.90 0.251 |0.300.701 |0.25 0.65 -1 Initialize the weight vector to all ls and the run the gradient descent algorithm with learning rate, eta = 0.2. After two updates of the weight vector, which of the following will be true (round the decimals to first two significant digits). Assume that we take care of the intercept term by absorbing the intercept term into the beginning of the weight vector. O a) The gradient of the objective function with respect to the augmented weights, just before the second update is [-1.95, 0.14] O b) The squared loss value for the updated perceptron after second update on the training data is 2.05 O c) The weight vector w after two iterations will be (-0.61, 0.31, 0.11] 0 d) The perceptron after second update makes O mistakes on the training data 4. Consider the Winnow algorithm for learning k-monotone disjunctive concepts (Refer to part a3 slides) from training data examples represented using d binary attributes. Let us assume that theta (threshold) is set to d/2. We are also assuming that the target concept is not the trivial accept-all concept. Note that in the Winnow algorithm, when a mistake is made for a given training example, the algorithm updates the weight vector as follows: If the training example was truly positive (true label was 1), then the weights corresponding to all the variables for which the training example takes the value true (or 1), are doubled (Line 11 -- Promotion) If the training example was truly negative (true label was 0), then the weights corresponding to all the variables for which the training example takes the value true (or 1), are set to 0 (Line 13 -- Elimination) Please choose which of the following are true regarding the Winnow learning algorithm? O a) It is not possible for any entry in w to be greater than d O b) At elimination the sum of weights in w decreases by at most 1 O c) It is possible for an entry in w to become greater than d O d) At every promotion, the total sum of weights in w is increased by more than 1/2