Question

Please refer to the picture In the following two problems, we consider variants of the gradient descent algorithm for solving min x?Rn f(x), (1) where

Please refer to the picture

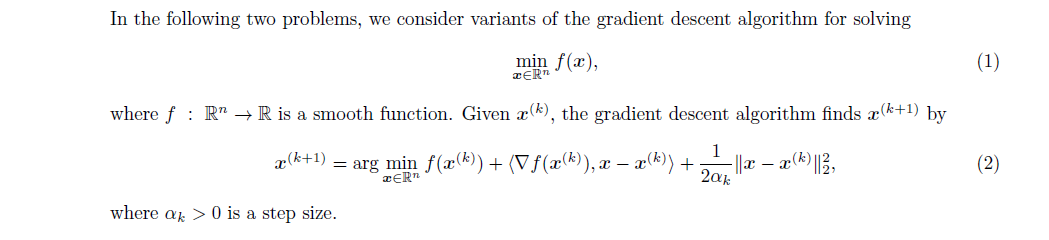

In the following two problems, we consider variants of the gradient descent algorithm for solving min x?Rn f(x), (1) where f : R n ? R is a smooth function. Given x (k) , the gradient descent algorithm finds x (k+1) by x (k+1) = arg min x?Rn f(x (k) ) + h?f(x (k) ), x ? x (k) i + 1 2?k kx ? x (k) k 2 2 ,

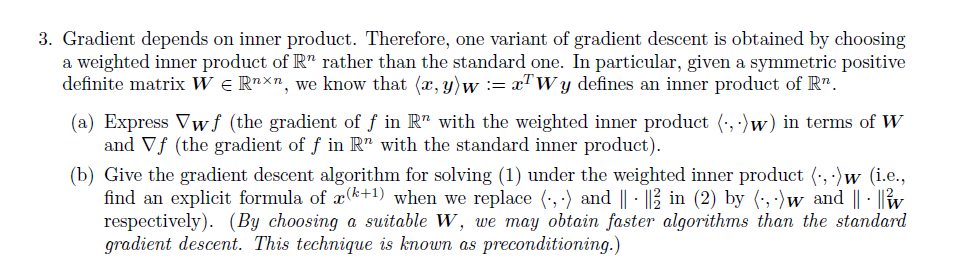

Gradient depends on inner product. Therefore, one variant of gradient descent is obtained by choosing a weighted inner product of R n rather than the standard one. In particular, given a symmetric positive definite matrix W ? R nn, we know that hx, yiW := x TW y defines an inner product of R n. (a) Express ?W f (the gradient of f in R n with the weighted inner product h, iW ) in terms of W and ?f (the gradient of f in R n with the standard inner product). (b) Give the gradient descent algorithm for solving (1) under the weighted inner product h, iW (i.e., find an explicit formula of x (k+1) when we replace h, i and k k2 2 in (2) by h, iW and k k2 W respectively). (By choosing a suitable W, we may obtain faster algorithms than the standard gradient descent. This technique is known as preconditioning.)

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started