Answered step by step

Verified Expert Solution

Question

1 Approved Answer

Problem 3. Let X = (X1,..., X) be iid samples from Po, where is a fixed, unknown, scalar-valued parameter. Suppose a Bayesian approach is

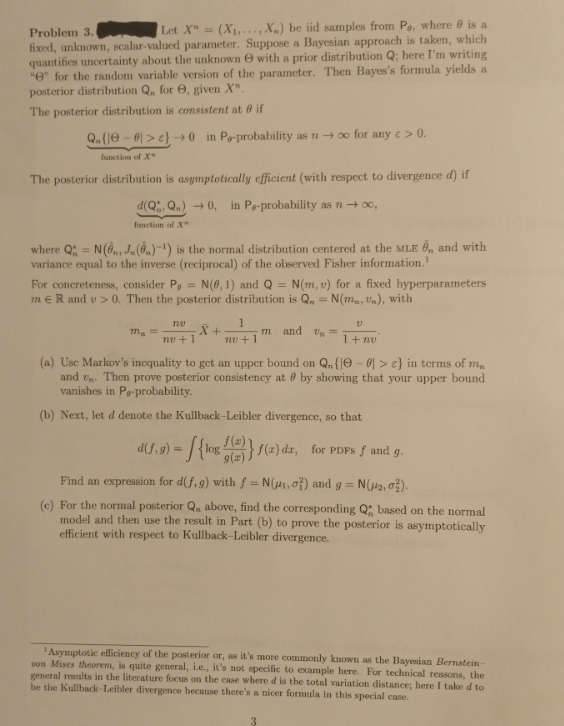

Problem 3. Let X" = (X1,..., X) be iid samples from Po, where is a fixed, unknown, scalar-valued parameter. Suppose a Bayesian approach is taken, which quantifies uncertainty about the unknown e with a prior distribution Q; here I'm writing "e" for the random variable version of the parameter. Then Bayes's formula yields a posterior distribution Q, for e, given X". The posterior distribution is consistent at 0 if Q.(e->c)0 in Po-probability as noo for any > 0. function of X" The posterior distribution is asymptotically efficient (with respect to divergence d) if where Q = d(Q, Q) 0, in Pe-probability as n 00, function of X" N(., J. ()-1) is the normal distribution centered at the MLE 6,, and with variance equal to the inverse (reciprocal) of the observed Fisher information.' For concreteness, consider P = N(0, 1) and Q = N(m, v) for a fixed hyperparameters mR and v>0. Then the posterior distribution is Q = N(m, n), with m X+ nu+1 1 nu+1 V m and v= 1+nu (a) Use Markov's incquality to get an upper bound on Q (e-01> c) in terms of m and . Then prove posterior consistency at 0 by showing that your upper bound vanishes in Po-probability. (b) Next, let d denote the Kullback-Leibler divergence, so that d(f,g){log)}f(x) dr, for PDFs f and g. = Find an expression for d(f,g) with f = N(1.0) and g= N(2,0). (c) For the normal posterior Q, above, find the corresponding Q, based on the normal model and then use the result in Part (b) to prove the posterior is asymptotically efficient with respect to Kullback-Leibler divergence. Asymptotic efficiency of the posterior or, as it's more commonly known as the Bayesian Bernstein- von Mises theorem, is quite general, i.e., it's not specific to example here. For technical reasons, the general results in the literature focus on the case where d is the total variation distance; here I take d to be the Kullback-Leibler divergence because there's a nicer formula in this special case. 3

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started