Answered step by step

Verified Expert Solution

Question

1 Approved Answer

provide python coding Question 4) Multi-Layer Perceptron: (30 points) Here we will study how to train an MLP with backpropagation. Using the same network architecture

provide python coding

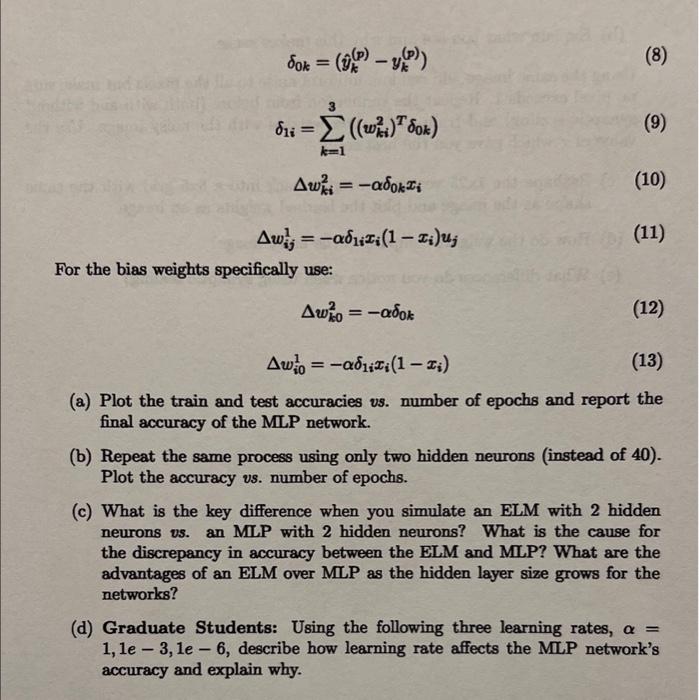

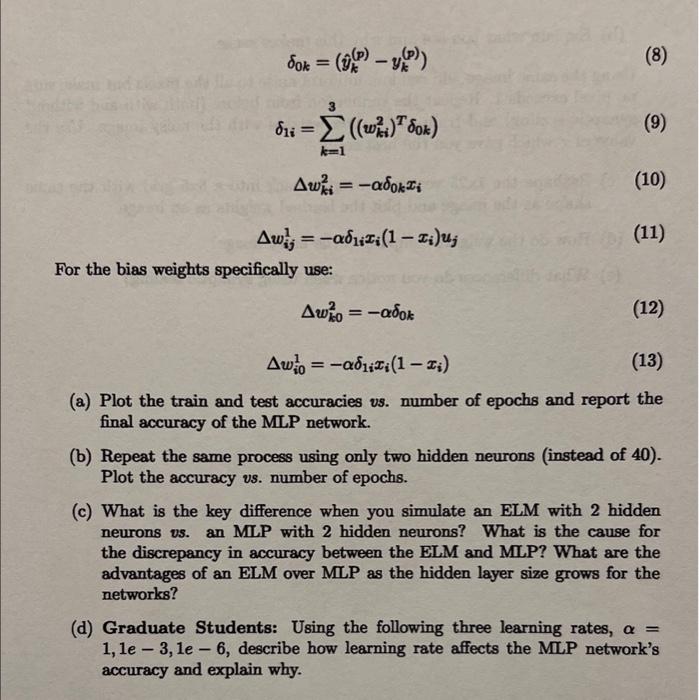

Question 4) Multi-Layer Perceptron: (30 points) Here we will study how to train an MLP with backpropagation. Using the same network architecture as for the ELM, you will now backpropagate the error to train both the first set of weights (from the input to hidden layer) and the second set of weights (from the hidden layer to the output layer). In the code provided, use the same forward pass implementation as in the ELM. For the backward pass, the weight update equations are 0k=(y^k(p)yk(p))1i=k=13((wki2)T0k)wki2=0kxiwij1=1ixi(1xi)uj For the bias weights specifically use: wk02=0kwi01=1ixi(1xi) (a) Plot the train and test accuracies vs. number of epochs and report the final accuracy of the MLP network. (b) Repeat the same process using only two hidden neurons (instead of 40). Plot the accuracy v. number of epochs. (c) What is the key difference when you simulate an ELM with 2 hidden neurons vs. an MLP with 2 hidden neurons? What is the cause for the discrepancy in accuracy between the ELM and MLP? What are the advantages of an ELM over MLP as the hidden layer size grows for the networks? (d) Graduate Students: Using the following three learning rates, = 1,1e3,1e6, describe how learning rate affects the MLP network's accuracy and explain why

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started