python language

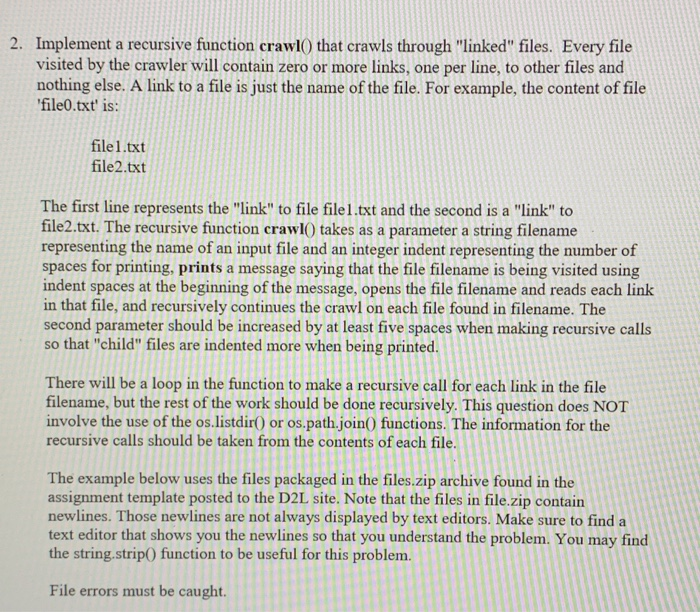

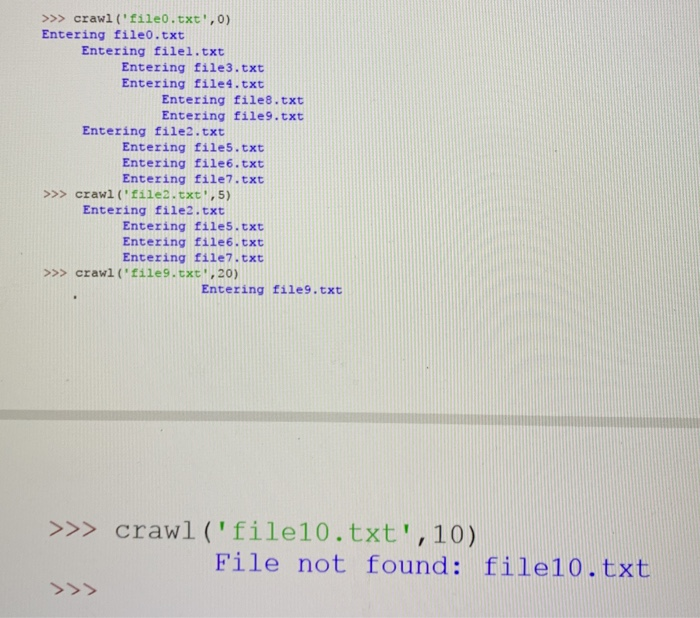

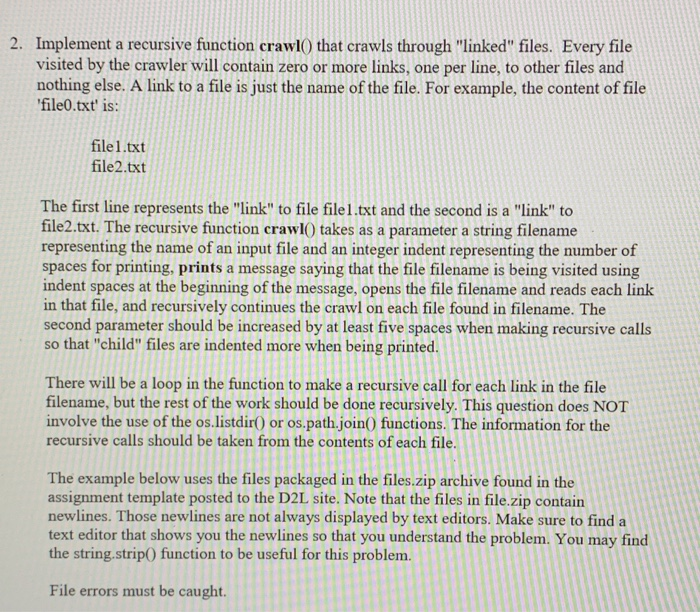

2. Implement a recursive function crawl0 that crawls through "linked" files. Every file visited by the crawler will contain zero or more links, one per line, to other files and nothing else. A link to a file is just the name of the file. For example, the content of file file0.txt' is: file1.txt file2.txt The first line represents the "link" to file file1.txt and the second is a "link" to file2.txt. The recursive function crawl0 takes as a parameter a string filename representing the name of an input file and an integer indent representing the number of spaces for printing, prints a message saying that the file filename is being visited using indent spaces at the beginning of the message, opens the file filename and reads each link in that file, and recursively continues the crawl on each file found in filename. The second parameter should be increased by at least five spaces when making recursive calls so that "child" files are indented more when being printed. There will be a loop in the function to make a recursive call for each link in the file filename, but the rest of the work should be done recursively. This question does NOT involve the use of the os.listdir0 or os.path.join0 functions. The information for the recursive calls should be taken from the contents of each file. The example below uses the files packaged in the files.zip archive found in the assignment template posted to the D2L site. Note that the files in file.zip contain newlines. Those newlines are not always displayed by text editors. Make sure to find a text editor that shows you the newlines so that you understand the problem. You may find the string.strip) function to be useful for this problenm File errors must be caught. >>> crawl('fileo.txt, o) Entering fileo.txt Entering filel.txt Entering file3.txt Entering file4.txt Entering file8.txt Entering file9.txt Entering file2.txt Entering files.txt Entering file6.txt Entering file7.txt >>> crawl ('file2.txt',5) Entering file2.txt 1 Entering files.txt Entering file6.txt Entering file7.txt >>> crawl'file9.txt',20) Entering file9.txt >>> crawl ('file10.txt,10) File not found: file10.txt