Answered step by step

Verified Expert Solution

Question

1 Approved Answer

python using pyspark question Alice is a data scientist at Networkly, a large social networking website where users can follow each other. Alice has a

python using pyspark question

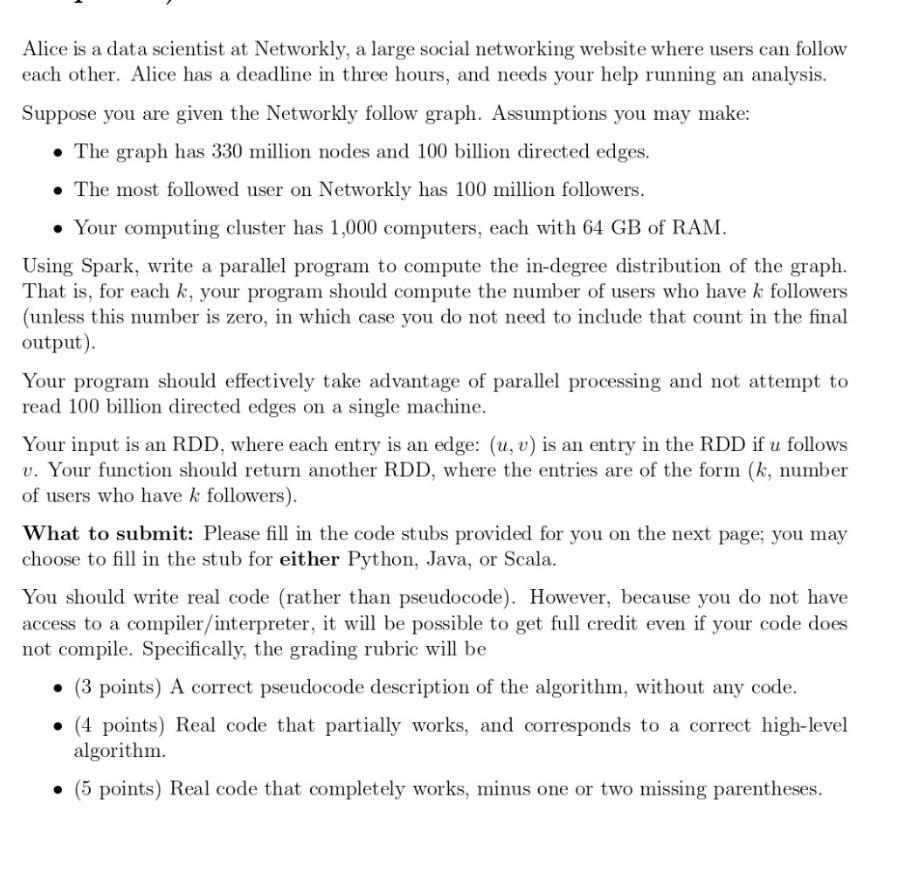

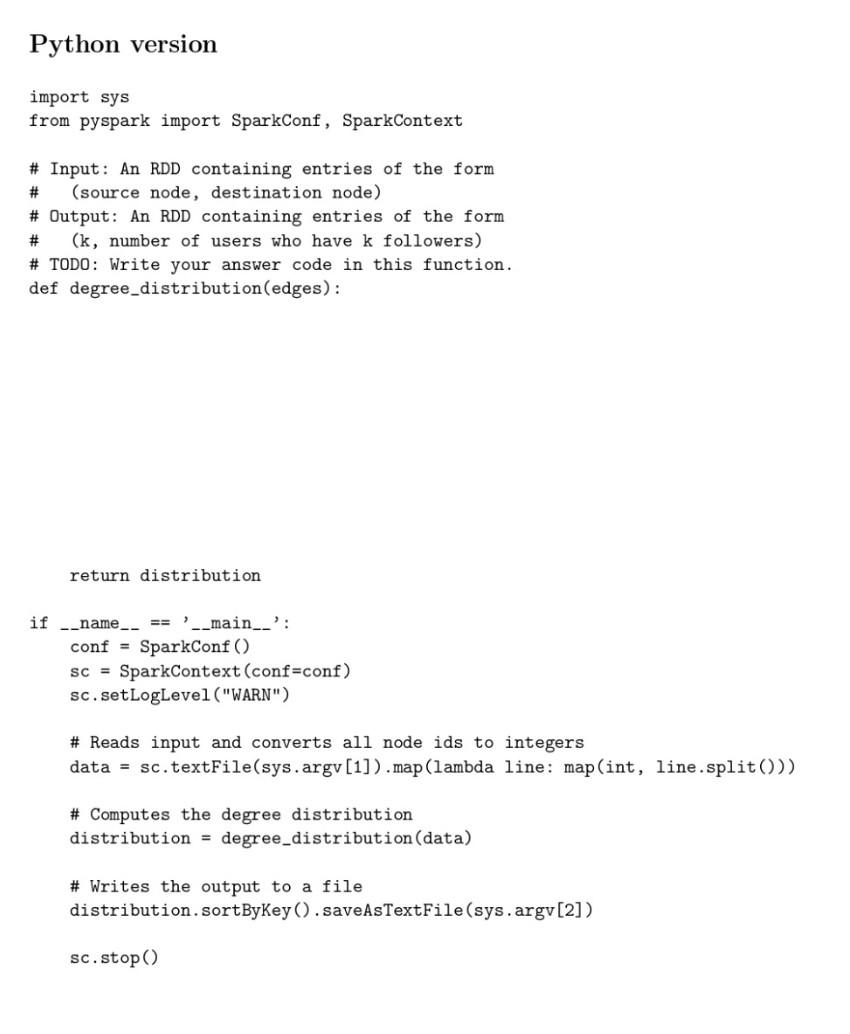

Alice is a data scientist at Networkly, a large social networking website where users can follow each other. Alice has a deadline in three hours, and needs your help running an analysis. Suppose you are given the Networkly follow graph. Assumptions you may make: The graph has 330 million nodes and 100 billion directed edges. . The most followed user on Networkly has 100 million followers. . Your computing cluster has 1,000 computers, each with 64 GB of RAM. Using Spark, write a parallel program to compute the in-degree distribution of the graph. That is, for each k, your program should compute the number of users who have k followers (unless this number is zero, in which case you do not need to include that count in the final output) Your program should effectively take advantage of parallel processing and not attempt to read 100 billion directed edges on a single machine. Your input is an RDD, where each entry is an edge: (u, v) is an entry in the RDD if u follows v. Your function should return another RDD, where the entries are of the form (k, number of users who have k followers). What to submit: Please fill in the code stubs provided for you on the next page; you may choose to fill in the stub for either Python, Java, or Scala. You should write real code (rather than pseudocode). However, because you do not have access to a compiler/interpreter, it will be possible to get full credit even if your code does not compile. Specifically, the grading rubric will be (3 points) A correct pseudocode description of the algorithm, without any code. (4 points) Real code that partially works, and corresponds to a correct high-level algorithm (5 points) Real code that completely works, minus one or two missing parentheses. Python version import sys from pyspark import SparkConf, SparkContext # # Input: An RDD containing entries of the form (source node, destination node) # Output: An RDD containing entries of the form # (k, number of users who have k followers) # TODO: Write your answer code in this function. def degree_distribution(edges): return distribution if __name__ == '__main__': conf = SparkConf () SC = SparkContext(conf=conf) sc.setLogLevel ("WARN") # Reads input and converts all node ids to integers data = sc.textFile(sys.argv[1]).map(lambda line: map(int, line.split())) # Computes the degree distribution distribution = degree_distribution (data) # Writes the output to a file distribution.sortByKey().saveAsTextFile(sys.argv[2]) Sc.stop() Alice is a data scientist at Networkly, a large social networking website where users can follow each other. Alice has a deadline in three hours, and needs your help running an analysis. Suppose you are given the Networkly follow graph. Assumptions you may make: The graph has 330 million nodes and 100 billion directed edges. . The most followed user on Networkly has 100 million followers. . Your computing cluster has 1,000 computers, each with 64 GB of RAM. Using Spark, write a parallel program to compute the in-degree distribution of the graph. That is, for each k, your program should compute the number of users who have k followers (unless this number is zero, in which case you do not need to include that count in the final output) Your program should effectively take advantage of parallel processing and not attempt to read 100 billion directed edges on a single machine. Your input is an RDD, where each entry is an edge: (u, v) is an entry in the RDD if u follows v. Your function should return another RDD, where the entries are of the form (k, number of users who have k followers). What to submit: Please fill in the code stubs provided for you on the next page; you may choose to fill in the stub for either Python, Java, or Scala. You should write real code (rather than pseudocode). However, because you do not have access to a compiler/interpreter, it will be possible to get full credit even if your code does not compile. Specifically, the grading rubric will be (3 points) A correct pseudocode description of the algorithm, without any code. (4 points) Real code that partially works, and corresponds to a correct high-level algorithm (5 points) Real code that completely works, minus one or two missing parentheses. Python version import sys from pyspark import SparkConf, SparkContext # # Input: An RDD containing entries of the form (source node, destination node) # Output: An RDD containing entries of the form # (k, number of users who have k followers) # TODO: Write your answer code in this function. def degree_distribution(edges): return distribution if __name__ == '__main__': conf = SparkConf () SC = SparkContext(conf=conf) sc.setLogLevel ("WARN") # Reads input and converts all node ids to integers data = sc.textFile(sys.argv[1]).map(lambda line: map(int, line.split())) # Computes the degree distribution distribution = degree_distribution (data) # Writes the output to a file distribution.sortByKey().saveAsTextFile(sys.argv[2]) Sc.stop()

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started