Question: Question 6 What underlying issue might cause an LLM to produce hallucinated responses? A . Excessive regularization leading to diminished capacity for complex tasks B

Question

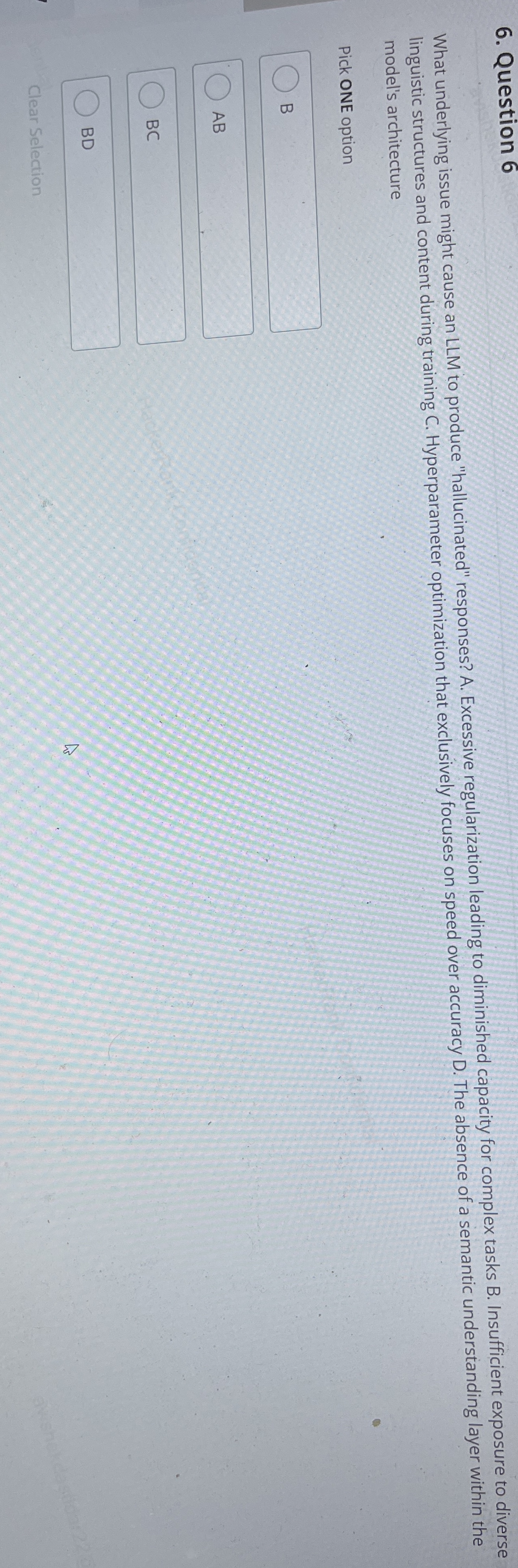

What underlying issue might cause an LLM to produce "hallucinated" responses? A Excessive regularization leading to diminished capacity for complex tasks B Insufficient exposure to diverse linguistic structures and content during training C Hyperparameter optimization that exclusively focuses on speed over accuracy The absence of a semantic understanding layer within the model's architecture

Pick ONE option

B

Clear Selection

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock