Question

Source documentclass[11pt]{article} usepackage[margin=1in]{geometry} usepackage{natbib} usepackage{mathpazo} bibpunct{(}{)}{;}{a}{,}{,} usepackage{chngpage} usepackage{stmaryrd} usepackage{amssymb} usepackage{amsmath} usepackage{amsthm} usepackage{graphicx} usepackage{lscape} usepackage{subfigure} usepackage{bbm} usepackage{url} usepackage{fancyhdr} usepackage{amssymb} usepackage{hyperref} pagestyle{fancy} setlength{parindent}{0pt} setlength{parskip}{3pt} lhead{textbf{Assignment 1}} head{COMP5800.

Source

Source

\documentclass[11pt]{article} \usepackage[margin=1in]{geometry} \usepackage{natbib} \usepackage{mathpazo} \bibpunct{(}{)}{;}{a}{,}{,} \usepackage{chngpage} \usepackage{stmaryrd} \usepackage{amssymb} \usepackage{amsmath} \usepackage{amsthm} \usepackage{graphicx} \usepackage{lscape} \usepackage{subfigure} \usepackage{bbm} \usepackage{url} \usepackage{fancyhdr} \usepackage{amssymb} \usepackage{hyperref} \pagestyle{fancy}

\setlength{\parindent}{0pt} \setlength{\parskip}{3pt}

\lhead{\textbf{Assignment 1}} head{COMP5800. Social Computing, UMASS Lowell}

\begin{document}

\section*{Twitter Scraper}

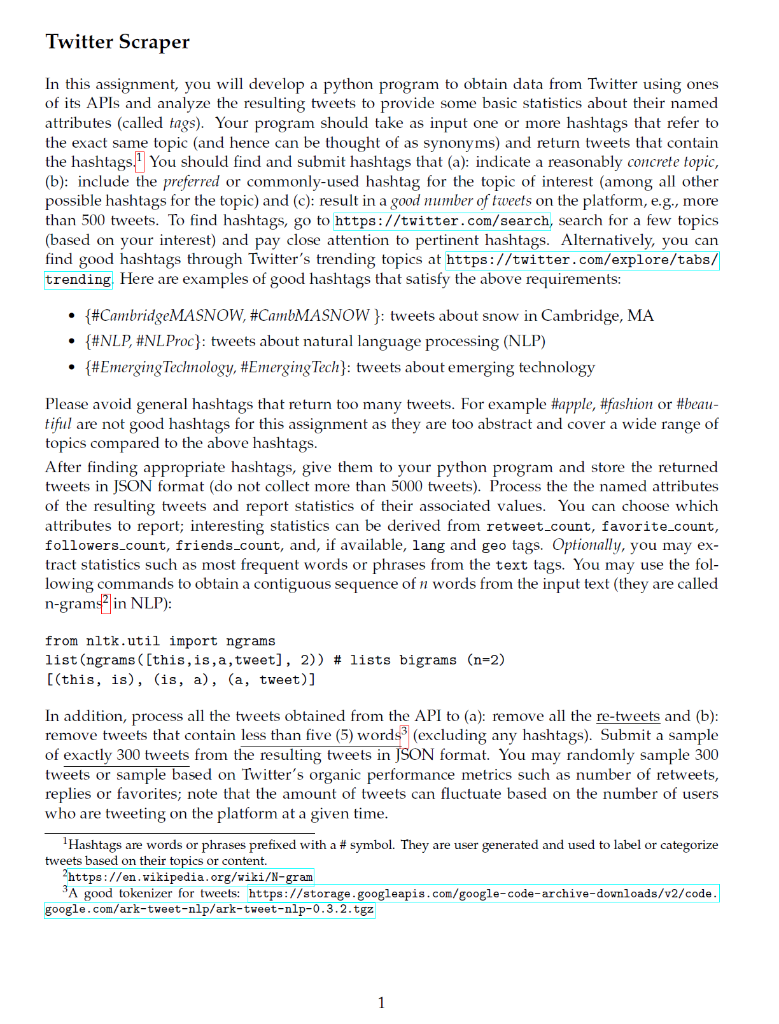

In this assignment, you will develop a python program to obtain data from Twitter using ones of its APIs and analyze the resulting tweets to provide some basic statistics about their named attributes (called {\em tags}). Your program should take as input one or more hashtags that refer to the exact same topic (and hence can be thought of as synonyms) and return tweets that contain the hashtags.\footnote{Hashtags are words or phrases prefixed with a \# symbol. They are user generated and used to label or categorize tweets based on their topics or content.} You should find and submit hashtags that (a): indicate a reasonably {\em concrete topic}, (b): include the {\em preferred} or commonly-used hashtag for the topic of interest (among all other possible hashtags for the topic) and (c): result in a {\em good number of tweets} on the platform, e.g., more than 500 tweets. To find hashtags, go to \url{https://twitter.com/search}, search for a few topics (based on your interest) and pay close attention to pertinent hashtags. Alternatively, you can find good hashtags through Twitter's trending topics at \url{https://twitter.com/explore/tabs/trending}. Here are examples of good hashtags that satisfy the above requirements: \begin{itemize} \setlength\itemsep{0pt} \item {\it \{\#CambridgeMASNOW, \#CambMASNOW} \}: tweets about snow in Cambridge, MA \item {\it \{\#NLP, \#NLProc}\}: tweets about natural language processing (NLP) \item {\it \{\#EmergingTechnology, \#EmergingTech}\}: tweets about emerging technology \end{itemize} Please avoid general hashtags that return too many tweets. For example {\it \#apple}, {\it \#fashion} or {\it \#beautiful} are not good hashtags for this assignment as they are too abstract and cover a wide range of topics compared to the above hashtags.

After finding appropriate hashtags, give them to your python program and store the returned tweets in JSON format (do not collect more than 5000 tweets). % Process the the named attributes of the resulting tweets and report statistics of their associated values. You can choose which attributes to report; % Note that attributes such as {\tt id}, {\tt created\_at}, {\tt user}, {\tt text} are the basic building blocks of a tweet and always exist. However, other tags may not always be present. For example, not all tweets contain URLs or image attachments. interesting statistics can be derived from {\tt retweet\_count}, {\tt favorite\_count}, {\tt followers\_count}, {\tt friends\_count}, and, if available, {\tt lang} and {\tt geo} tags. {\em Optionally}, you may extract statistics such as most frequent words or phrases from the {\tt text} tags. You may use the following commands to obtain a contiguous sequence of $n$ words from the input text (they are called n-grams\footnote{\url{https://en.wikipedia.org/wiki/N-gram}} in NLP): \begin{verbatim} from nltk.util import ngrams list(ngrams([this,is,a,tweet], 2)) # lists bigrams (n=2) [(this, is), (is, a), (a, tweet)] \end{verbatim}

In addition, process all the tweets obtained from the API to (a): remove all the \underline{re-tweets} and (b): remove tweets that contain \underline{less than five (5) words}\footnote{A good tokenizer for tweets: \url{https://storage.googleapis.com/google-code-archive-downloads/v2/code.google.com/ark-tweet-nlp/ark-tweet-nlp-0.3.2.tgz}} (excluding any hashtags). Submit a sample of \underline{exactly 300 tweets} from the resulting tweets in JSON format. You may randomly sample 300 tweets or sample based on Twitter's organic performance metrics such as number of retweets, replies or favorites; note that the amount of tweets can fluctuate based on the number of users who are tweeting on the platform at a given time.

\section*{Important Instructions} You must submit a single zip file named {\tt [STUDENTID].zip} that contains the following items in its root directory: \begin{enumerate} \setlength\itemsep{0pt} \item One script named {\tt scraper.py} to run your python code. \item One TXT file named {\tt [HASHTAG].txt} that contains the list of hashtags used to collect the data. Hashtags should be separated by new lines and the list should start with the preferred hashtag, which is the commonly-used form of these hashtags. \item One JSON file named {\tt [HASHTAG]\_full.json} that contains all tweets (a maximum of 5000 tweets) obtained from twitter. Replace [HASHTAG] with the preferred hashtag. \item One JSON file named {\tt [HASHTAG]\_sample.json} that contains 300 sampled tweets. Replace [HASHTAG] with the preferred hashtag. \item One PDF file named {\tt [HASHTAG].pdf} that reports tweet sampling strategy and data statistics. Replace [HASHTAG] with the preferred hashtag. \item One text file named {\tt README.txt} that briefly describes steps to run your program. \end{enumerate}

Your [HASHTAG\_sample].json file should contain exactly 300 tweets in the original format returned by the Twitter API. Do not add/omit any tag and do not change tag values. Your Zip file must be submitted to the link available on Blackboard, otherwise it will be ignored.\\

\end{document}

Please as soon as help me

Twitter Scraper In this assignment, you will develop a python program to obtain data from Twitter using ones of its APIs and analyze the resulting tweets to provide some basic statistics about their named attributes (called tags). Your program should take as input one or more hashtags that refer to the exact same topic (and hence can be thought of as synonyms) and return tweets that contain the hashtags! You should find and submit hashtags that (a): indicate a reasonably concrete topic, (b): include the preferred or commonly-used hashtag for the topic of interest (among all other possible hashtags for the topic) and (c): result in a good number of tweets on the platform, e.g., more than 500 tweets. To find hashtags, go to https://twitter.com/search, search for a few topics (based on your interest) and pay close attention to pertinent hashtags. Alternatively, you can find good hashtags through Twitter's trending topics at https://twitter.com/explore/tabs/ trending Here are examples of good hashtags that satisfy the above requirements: {#CambridgeMASNOW, #CambMASNOW }: tweets about snow in Cambridge, MA {#NLP, #NL Proc): tweets about natural language processing (NLP) {#Emerging Technology, #Emerging Tech}: tweets about emerging technology . . Please avoid general hashtags that return too many tweets. For example #apple, #fashion or #beau- tiful are not good hashtags for this assignment as they are too abstract and cover a wide range of topics compared to the above hashtags. After finding appropriate hashtags, give them to your python program and store the returned tweets in JSON format (do not collect more than 5000 tweets). Process the the named attributes of the resulting tweets and report statistics of their associated values. You can choose which attributes to report; interesting statistics can be derived from retweet_count, favorite_count, followers_count, friends_count, and, if available, lang and geo tags. Optionally, you may ex- tract statistics such as most frequent words or phrases from the text tags. You may use the fol- lowing commands to obtain a contiguous sequence of n words from the input text (they are called n-gram-2 in NLP): from nltk.util import ngrams list (ngrams ([this, is, a, tweet], 2)) # lists bigrams (n=2) [(this, is), (is, a), (a, tweet)] In addition, process all the tweets obtained from the API to (a): remove all the re-tweets and (b): remove tweets that contain less than five (5) words (excluding any hashtags). Submit a sample of exactly 300 tweets from the resulting tweets in JSON format. You may randomly sample 300 tweets or sample based on Twitter's organic performance metrics such as number of retweets, replies or favorites; note that the amount of tweets can fluctuate based on the number of users who are tweeting on the platform at a given time. Hashtags are words or phrases prefixed with a # symbol. They are user generated and used to label or categorize tweets based on their topics or content. https://en.wikipedia.org/wiki/N-gram 3A good tokenizer for tweets: https://storage.googleapis.com/google-code-archive-downloads/v2/code. google.com/ark-tweet-nlp/ark-tweet-nlp-0.3.2. tgz 1 Important Instructions You must submit a single zip file named [STUDENTID] .zip that contains the following items in its root directory: 1. One script named scraper.py to run your python code. 2. One TXT file named [HASHTAG].txt that contains the list of hashtags used to collect the data. Hashtags should be separated by new lines and the list should start with the preferred hashtag, which is the commonly-used form of these hashtags. 3. One JSON file named [HASHTAG]_full.json that contains all tweets (a maximum of 5000 tweets) obtained from twitter. Replace [HASHTAG] with the preferred hashtag. 4. One JSON file named [HASHTAG]_sample.json that contains 300 sampled tweets. Replace [HASHTAG] with the preferred hashtag. 5. One PDF file named [HASHTAG] .pdf that reports tweet sampling strategy and data statistics. Replace [HASHTAG] with the preferred hashtag. 6. One text file named README.txt that briefly describes steps to run your program. Your [HASHTAG_sample).json file should contain exactly 300 tweets in the original format re- turned by the Twitter API. Do not add/omit any tag and do not change tag values. Your Zip file must be submitted to the link available on Blackboard, otherwise it will be ignored. Good luck with the assignmentStep by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started