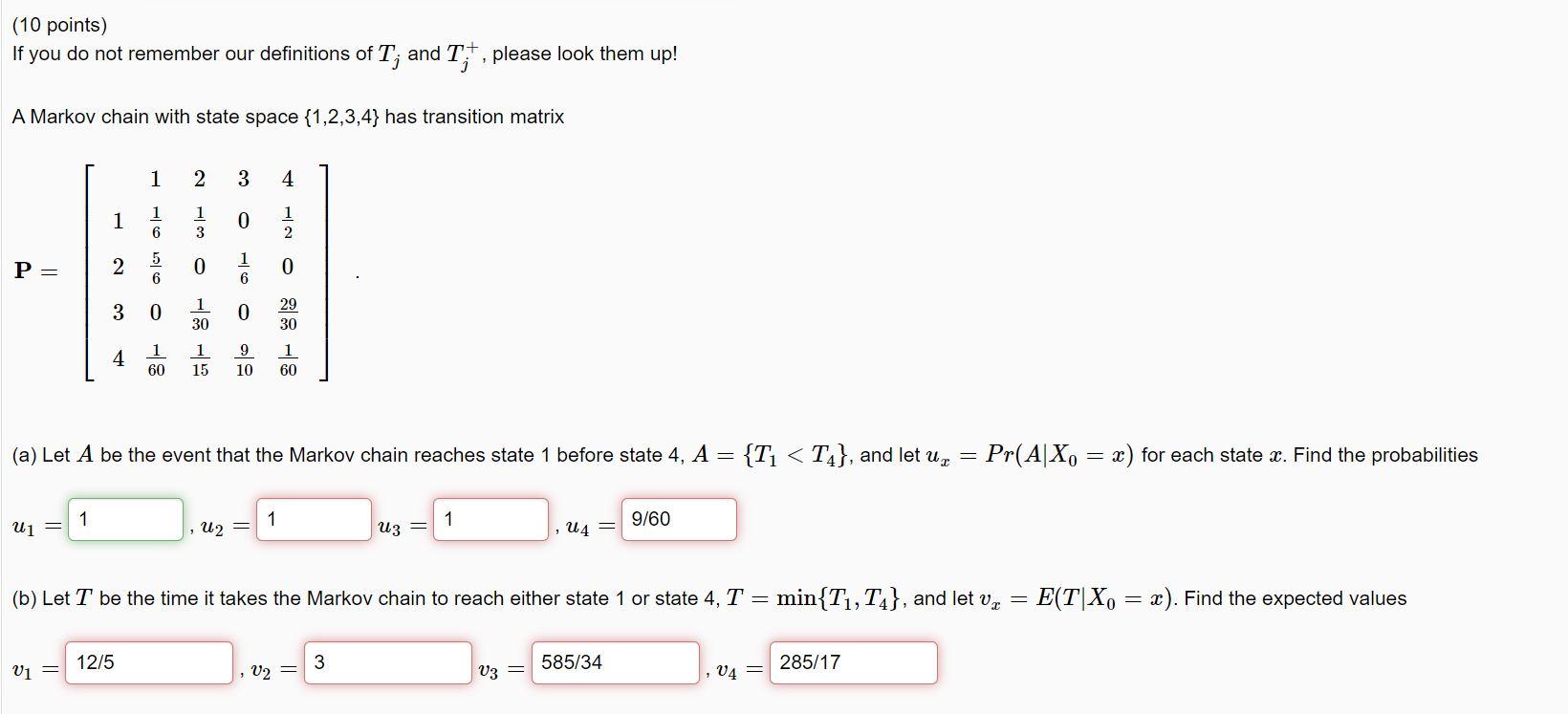

(10 points) If you do not remember our definitions of T, and Tt, please look them up! A Markov chain with state space {1,2,3,4}

(10 points) If you do not remember our definitions of T, and Tt, please look them up! A Markov chain with state space {1,2,3,4} has transition matrix P = U1 = 1 1 V1 = 2 3 4 1 2 3 4 1 1 6 2 0 5 6 0 12/5 - co 0 (a) Let A be the event that the Markov chain reaches state 1 before state 4, A = {T < T}, and let u 1 30 0 1 6 0 1 1 9 1 60 15 10 60 , U 29 30 = 1 , V2 = uz = 3 1 (b) Let T be the time it takes the Markov chain to reach either state 1 or state 4, T = min{T, T}, and let v , U4 = V3 = 9/60 585/34 V4 = = 285/17 Pr(A|Xo = x) for each state x. Find the probabilities E(T|X = x). Find the expected values

Step by Step Solution

3.40 Rating (144 Votes )

There are 3 Steps involved in it

Step: 1

A U2 The probability of the event A T B V The expected value of T given that the Markov ch...

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started