Answered step by step

Verified Expert Solution

Question

1 Approved Answer

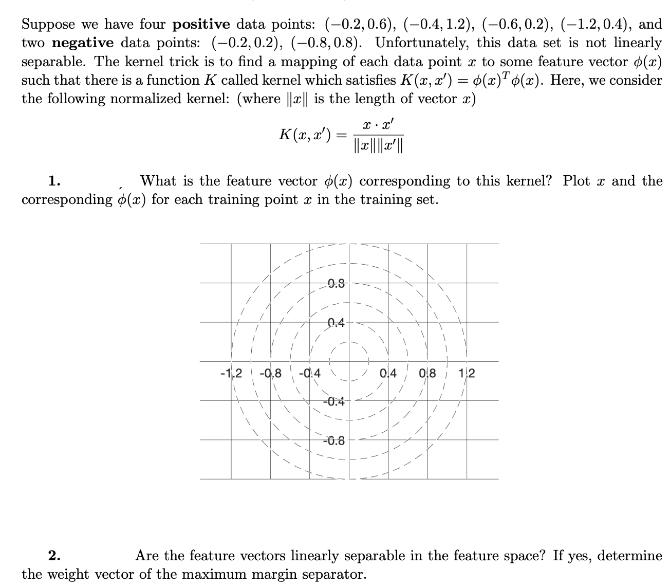

Suppose we have four positive data points: (-0.2,0.6), (-0.4, 1.2), (-0.6, 0.2), (-1.2, 0.4), and two negative data points: (-0.2,0.2), (-0.8,0.8). Unfortunately, this data

Suppose we have four positive data points: (-0.2,0.6), (-0.4, 1.2), (-0.6, 0.2), (-1.2, 0.4), and two negative data points: (-0.2,0.2), (-0.8,0.8). Unfortunately, this data set is not linearly separable. The kernel trick is to find a mapping of each data point x to some feature vector (r) such that there is a function K called kernel which satisfies K(x, x') = o(x)To(x). Here, we consider the following normalized kernel: (where ||z|| is the length of vector ) K(x, r'): = -1,2 -0,8 -0.4 1. What is the feature vector (z) corresponding to this kernel? Plot z and the corresponding p(x) for each training point in the training set. 0.8 0.4 * -0.8 |||||||| 0,4 0.8 1:2 1 2. Are the feature vectors linearly separable in the feature space? If yes, determine the weight vector of the maximum margin separator.

Step by Step Solution

There are 3 Steps involved in it

Step: 1

The kernel given in the question is the normalized kernel Kx x fracx cdot xx x which resembles the cosine similarity measure often used in vector spac...

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started