The article describes numerous instances of potential algo bias. Explain one that resonates with your personal experience and explain why.

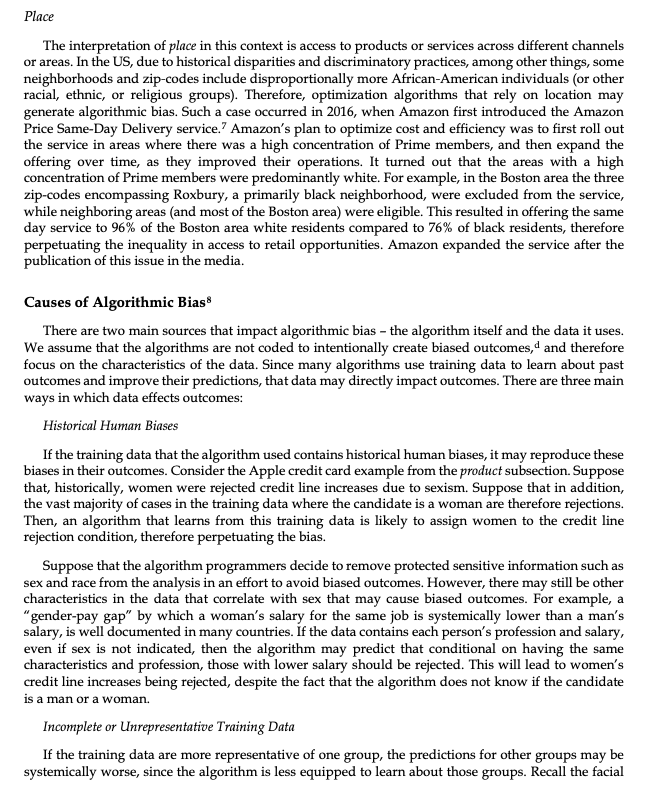

Algorithmic Bias in Marketing As the world becomes more data driven, companies use algorithms to assist in decision making and optimization of a variety of outcomes. Prominent examples of how deeply algorithms affect lives exist in the areas of policing and sentencing, lending, job applications and stability, healthcare, education, political advertising, and election outcomes. Concerns arise when such algorithms produce outcomes that disadvantage (or privilege) a certain group of people based on characteristics such as gender, race, class, sexual orientation, religion, or age, therefore discriminating against that group. While some forms of discrimination are illegal, others are lawful, and laws are not necessarily consistent across countries or states (see Exhibit 1 for a brief overview of the legal environment in the U.S. and the EU). Broadly, algorithmic creation of such unfair outcomes is called "algorithmic bias." Algorithmic bias may occur when an algorithm uses individual or group-level characteristics to determine outcomes. As we will show, even if the characteristics do not include the group identity, the outcome of the algorithm may produce bias, since other characteristics may be indicative of the group or highly correlated with the group's identity. This note focuses on algorithmic bias in marketing. First, it presents a variety of marketing examples in which algorithmic bias may occur. The examples are organized around the 4 P's of marketing - promotion, price, place and product-characterizing the marketing decision that generates the bias and highlighting the consequences of such a bias. Then, it explains the potential causes of algorithmic bias and offers some solutions to mitigate or reduce this bias. Illustrative Examples of Algorithmic Bias in Marketing Algorithmic bias in marketing occurs most commonly in instances in which customization is desired, such as in areas of personalization and targeting, but may also affect product design or access to products and services. We organize the discussion of the examples for these biases by the 4 P's, starting with promotion and pricing, where personalization and targeting algorithms are most common. Promotion In modern marketing, many campaigns target a particular group or individual and/ or are highly personalized based on individual characteristics. Companies optimize their marketing spend, the ad creative that potential consumers are exposed to, and even whether they are exposed to any campaignat all. * More often than not, these decisions are made by algorithms, in order to achieve the best return on investment, via a variety of key performance indicators such as highest conversation rates, or lowest customer acquisition costs. Lambrecht and Tucker (2018)1 document that science, technology, engineering, and math (STEM) career ads on Facebook were displayed more frequently to young men rather than to young women, despite the desire of the advertisers to be sex neutral in delivery of the ad. They show that this was a result of Facebook's cost minimizing algorithm that chose to target more young men because young women's eyeballs are more expensive. It turns out that women are more likely to engage with advertisers and spend money on consumer goods; therefore more advertisers compete for female audiences rather than male audiences, which further increases the price of advertising to women. Since each ad displayed to a consumer is a result of a competitive bidding process, and men's eyeballs are cheaper, it is more likely that the advertiser will win the auction for a man than a woman, resulting in the observed algorithmic bias. Interestingly, if an advertiser wants to show the same ad exclusively to one sex, or run the ads separately for each sex, Facebook will not allow them to do so, due to the federal regulation on employment and protected classes described in Exhibit 1. Algorithmic bias still occurs even if the costs to display ads to different audiences are the same due to Facebook's optimization of ad copies that consumers observe. Suppose that a particular ad creative is extremely appealing to some group but not to others. In that case, an algorithm that optimizes to generate highest ad engagement or click-through-rates will serve that ad disproportionally to users from that group. Price Firms often engage in price discrimination that allows them to charge different prices to different consumers. Note that providing a discount or a coupon to a segment of consumers is also a way to price discriminate between different consumers (for example a senior/student discount or a lady's night special pricing are forms of price discrimination). Price discrimination is typically legal in the US (see Exhibit 1 for discussion). Well-known algorithmic examples are in the field of insurance and lending. For example, minorities and women are known to receive worse lending and credit terms than white men. Car insurance prices often vary based on sex and age. " Some of these examples are often explained by perceived behavioral differences, for example young women drivers are considered to be less risky than young men drivers, leading to lower quotes for insurance prices. Those with higher income are considered more likely to be able to return a loan, but higher income may be correlated with characteristics like sex, race, or ethnicity. Some of the examples descend from historical disparities, for example when lending and credit values are computed based on zip codes. These examples extend across many services and products that are priced differentially based on consumers' characteristics.In a less controversial example, Orbitz, a travel reservation website, discovered that visitors to the website who use Mac computers spend more on a hotel night on average compared to PC users. Using this information, they predicted that future visitors who are Mac users are likely to spend more. Accordingly, Orbitz's algorithms changed their offerings to Mac users and showed them costlier travel options to begin with. In this case, the grouping is based on computer type and not on a protected class of individuals. However, if operating system usage was highly correlated with a protected characteristic this would lead to a bias against Mac users. Finally, firms are increasingly adopting "dynamic pricing" mechanisms whereby prices change continuously as they are adjusted based on current supply and demand. This is a very common practice among ride-hailing companies such as Uber or Lyft, where the fare is calculated based on real-time demand and supply, among other factors. Pandey and Caliskan (2020) analyzed over 100 million trips made around the area of Chicago (IL) from November 2018 to December 2019 and combined that information with US census data. The researchers found that trips with origin or destination in areas with large African-American populations (among other factors) were consistently more expensive than other trips. Product New products often utilize artificial intelligence and machine learning algorithms in their development. Such products are therefore more susceptible to algorithmic bias. For example, several new products rely on new technology that provides facial recognition. These products range from law enforcement tracking and security locking technology to less serious purposes such as photo tagging and a variety of mobile applications. Reports indicate vast group-based misprediction errors that falsely identify African-American and Asian faces more often than Caucasian faces, women more often than men, and older adults compared to middle aged adults. ' The presumed reason for this bias that disadvantages groups that are not middle aged Caucasian men is data. In particular, the concern is that the database of photographs that was used to train the facial recognition software primarily contained photos of white men, which explains why the algorithm is better in identifying and matching their features compared to other groups. Product access may also be constrained due to algorithmic bias. For example, numerous reports suggest that African-American individuals and Black-owned firms are more likely to be rejected for loans. In 2019, a new Apple credit card was deemed "sexist" after it rejected a credit line increase for a wife while approving it for her husband, despite her better credit score and other factors in her favor.* Some of the reasons for these algorithmic biases are due to historic discrimination that perpetuates in data, as we will elaborate in the reason section below. Another way in which algorithms affect product access is through the use of recommendation systems or curation algorithms. The role of both of these types of systems is to display a personalized selection of content to users. Typically, these algorithms' recommendations maximize engagement or selection decisions and are based on users' past behavior and preferences. If a user previously had access only to a limited set of content, the algorithm may maintain that. Another way these algorithms infer a user's preferences is based on consumers with similar characteristics, which again may limit the exposure of that user to different content. For example, it was well documented in the 2016 US presidential elections that curation algorithms on Facebook generated "bubbles" by which each user saw content that was most related to their own preferences, and therefore were not exposed to perspectives of users with different preferences. This practice may generate algorithmic bias if recommendation systems systematically limit access of a group of individuals to certain content.Place The interpretation of place in this context is access to products or services across different channels or areas. In the US, due to historical disparities and discriminatory practices, among other things, some neighborhoods and zip-codes include disproportionally more African-American individuals (or other racial, ethnic, or religious groups). Therefore, optimization algorithms that rely on location may generate algorithmic bias. Such a case occurred in 2016, when Amazon first introduced the Amazon Price Same-Day Delivery service.' Amazon's plan to optimize cost and efficiency was to first roll out the service in areas where there was a high concentration of Prime members, and then expand the offering over time, as they improved their operations. It turned out that the areas with a high concentration of Prime members were predominantly white. For example, in the Boston area the three zip-codes encompassing Roxbury, a primarily black neighborhood, were excluded from the service, while neighboring areas (and most of the Boston area) were eligible. This resulted in offering the same day service to 96% of the Boston area white residents compared to 76% of black residents, therefore perpetuating the inequality in access to retail opportunities. Amazon expanded the service after the publication of this issue in the media. Causes of Algorithmic Bias There are two main sources that impact algorithmic bias - the algorithm itself and the data it uses. We assume that the algorithms are not coded to intentionally create biased outcomes, and therefore focus on the characteristics of the data. Since many algorithms use training data to learn about past outcomes and improve their predictions, that data may directly impact outcomes. There are three main ways in which data effects outcomes: Historical Human Biases If the training data that the algorithm used contains historical human biases, it may reproduce these biases in their outcomes. Consider the Apple credit card example from the product subsection. Suppose that, historically, women were rejected credit line increases due to sexism. Suppose that in addition, the vast majority of cases in the training data where the candidate is a woman are therefore rejections. Then, an algorithm that learns from this training data is likely to assign women to the credit line rejection condition, therefore perpetuating the bias. Suppose that the algorithm programmers decide to remove protected sensitive information such as sex and race from the analysis in an effort to avoid biased outcomes. However, there may still be other characteristics in the data that correlate with sex that may cause biased outcomes. For example, a gender-pay gap" by which a woman's salary for the same job is systemically lower than a man's salary, is well documented in many countries. If the data contains each person's profession and salary, even if sex is not indicated, then the algorithm may predict that conditional on having the same characteristics and profession, those with lower salary should be rejected. This will lead to women's credit line increases being rejected, despite the fact that the algorithm does not know if the candidate is a man or a woman. Incomplete or Unrepresentative Training Data If the training data are more representative of one group, the predictions for other groups may be systemically worse, since the algorithm is less equipped to learn about those groups. Recall the facialrecognition example from the product subsection, where middle aged Caucasian men were over represented in the data. This can happen even if the data is representative of the population but one group is small. Note that historical human biases could exacerbate incomplete or unrepresented data issues. For example, if as in the product lending example, African-Americans are less likely to receive loans, then there will also be insufficient information on their loan payment or default behavior upon receiving those loans. Characteristics that Interact with the Algorithm Code Customer groups may have different characteristics that may impact algorithmic outcomes. Typically, algorithms have certain rules that they optimize based on. Bias may occur if certain group characteristics are more related to these rules. Recall the STEM ad example from the promotion subsection above. The algorithm's optimization relied on cost-minimization (minimizing the advertising expenditure) and conversion-maximization (maximizing the click-through-rate). Because men and women in that context differed in their cost-to-serve (women's views were more expensive), then the algorithm disadvantaged women to minimize cost. Similarly, if a certain group was more likely to convert from an ad to a sale, the algorithm may over prescribe the ad to that group. The 2X2 diagram below summarizes the different ways algorithmic bias may occur, depending on the characteristics of the data. Data that are complete and representative, and that do not include historical biases or pre-exiting patterns and correlations between group preferences and the outcome, are least likely to produce biased outcomes (top-left quadrant). However, as soon as the data are unrepresentative for some segment of the population (top-right quadrant), which is likely to occur for minority groups, or there exist hidden correlations exist between group characteristics and outcomes (bottom-left), which is the case in many marketing examples such as the ones outlined above, algorithmic bias would emerge. As a result, if one wants to prevent uneven outcomes across groups, algorithms should be audited and tested to detect bias. Incomplete or unrepresentative? Data characteristics NO YES Contain pre-existing No expected bias due to data Algorithm may biased pattern or NO disadvantage groups with correlation between insufficient data group preferences and outcome? Algorithm may perpetuate Algorithm may perpetuate YES bias or introduce bias due to bias or produce worse pre-existing correlations outcomes for groups Source: Casewriters. Addressing Algorithmic Bias The role of algorithms is to optimize a certain outcome under a set of rules or constraints, or to generate a more efficient allocation of resources. However, fairness is often not one of the constraints used in these algorithms. Therefore, a desire to reduce bias generates a trade-off between thealgorithm's efficiency and fairness. While there are no foolproof solutions, below is a set of common approaches to address and mitigate bias. Cultural and Strategic Change While some of the algorithmic bias examples above generate outcomes that are illegal, some of the other examples generate differential outcomes across groups and may lead to public outcry but are considered part of doing business. Companies need to take a clear stance on these issues and evaluate how they would like to address potential biases and incorporate that into their corporate values and activities. Audits and Bias Detection In many of the examples described above, the company was not aware of the algorithmic bias before releasing their algorithms. Additionally, given the potential correlations between group characteristics and the algorithm outcomes, it may be especially difficult to realize that a bias exists. As we have demonstrated, removing sensitive information about the group does not necessarily resolve bias. Therefore, a current recommendation for firms is to perform algorithmic audits to detect bias. In particular, the idea is to use representative data and ensure that the algorithm does not produce systematically unfair outcomes for certain groups. Ideally, if we compare identical people who differ only on a protected characteristic, they should have the exact same outcome recommendation by the algorithm. Diverse Teams Because algorithms often replicate existing biases, or generate new biases that algorithm developers are not always aware of, one suggestion is to ensure that the team of developers is diverse. The idea is that more diverse teams with different backgrounds, experiences, and perspectives would be better at anticipating potential biases as well as detecting biases." Further, they may be more invested in ensuring fairness, performing audits, and reducing algorithmic bias. In the facial recognition example above, a more diverse set of programmers may have realized that there are not enough diverse images in the training data. In the credit card example, they may have noticed that women received worse outcomes. Including Fairness in Optimization A recent approach is to treat the issue head-on, and add fairness constraints into the algorithm code itself to optimize for the desired outcome as well as fairness. For example, a research team at Amazon recently published a method that enforces fairness constraints while optimizing a machine learning algorithm." As more and more decisions are delegated to algorithms, we anticipate fairness concerns to be incorporated into algorithms. Collecting Sensitive Protected Data Somewhat counterintuitively, most of the solutions described above rely on the firm's ability to classify individuals into their protected groups. As demonstrated above, removing the group identity from data may make it more difficult to detect the bias, therefore potentially exacerbating discrimination." This is at odds with solutions proposed to reduce human biases, which often suggest to remove the group identity or certain characteristics that can identify the group's identity from the data (such as an individual's name, photo, or education information).Exhibit 1 Discrimination and algorithmic bias protection in the US and the EU In the U.S., a combination of anti-discrimination federal laws protect those who belong to certain 'protected classes' from spedfic forms of discrimination The protected classes are: sex, race or color, religion or creed, national origin, sexual orierdation and gender identity, disability status, familial status, age, veteran status, and genetic information. These laws prohibit discrimination in housing, credit, employment, public accommodations (such as hotel, restaurants, theaters, and parks}, and voting rights. These laws also provide for equitable access to these opportunities, suggesting that advertisers should ensure that they do not exclude protected classes in advertising. Price discrimination, i.e. the practice of charging a different price to different consumers for the same good, is typically lawful unless there is a proven intent to harm competitors Some states instituted additional protections for certain classes or consistently ruled against discrimination For example, California protects against discrimination for services and products for all protected classes (for example, including price discrimination in products and services such as haircuts, dry cleaning, nightdubs, and insurance) Currently, there is no general legislation to address algorithmic bias in the US. In the EU, \"any discrimination based on sex, race, color, ethnic or social origin, genetic features, language, religion or belief, political or any other option, membership of a national minority, property, birth, disability, age or sexual orientation" is prohibited. In addition, the EU's data protection policy, known as the General Data Protection Regulation (GDPR) suggests safeguards to address automated decision making (such as human intervention and transparency)