Question

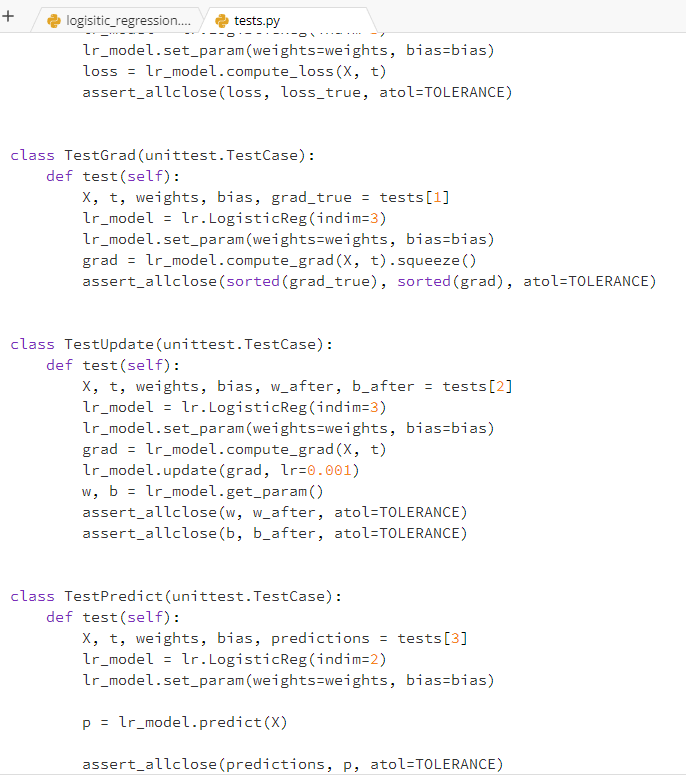

The code is as follows. Please complete the compute_grad, update, predict_probe, and predict functions to pass the test: def compute_grad(self, X, t): # X: feature

The code is as follows. Please complete the "compute_grad", "update", "predict_probe", and "predict" functions to pass the test: def compute_grad(self, X, t): # X: feature matrix of shape [N, d] # grad: shape of [d, 1] # NOTE: return the average gradient, NOT the sum. pass

def update(self, grad, lr=0.001): # update the weights # by the gradient descent rule pass

def fit(self, X, t, lr=0.001, max_iters=1000, eps=1e-7): # implement the .fit() using the gradient descent method. # args: # X: input feature matrix of shape [N, d] # t: input label of shape [N, ] # lr: learning rate # max_iters: maximum number of iterations # eps: tolerance of the loss difference # TO NOTE: # extend the input features before fitting to it. # return the weight matrix of shape [indim+1, 1] N, d = X.shape X = np.c_[X, np.ones((N, 1))] t[t == 0] = -1

loss = 1e10 for epoch in range(max_iters): # compute the loss new_loss = self.compute_loss(X, t)

# compute the gradient grad = self.compute_grad(X, t)

# update the weight self.update(grad, lr=lr)

# decide whether to break the loop if np.abs(new_loss - loss)

def predict_prob(self, X): # implement the .predict_prob() using the parameters learned by .fit() # X: input feature matrix of shape [N, d] # NOTE: make sure you extend the feature matrix first, # the same way as what you did in .fit() method. # returns the prediction (likelihood) of shape [N, ] pass

def predict(self, X, threshold=0.5): # implement the .predict() using the .predict_prob() method # X: input feature matrix of shape [N, d] # returns the prediction of shape [N, ], where each element is -1 or 1. # if the probability p>threshold, we determine t=1, otherwise t=-1 pass

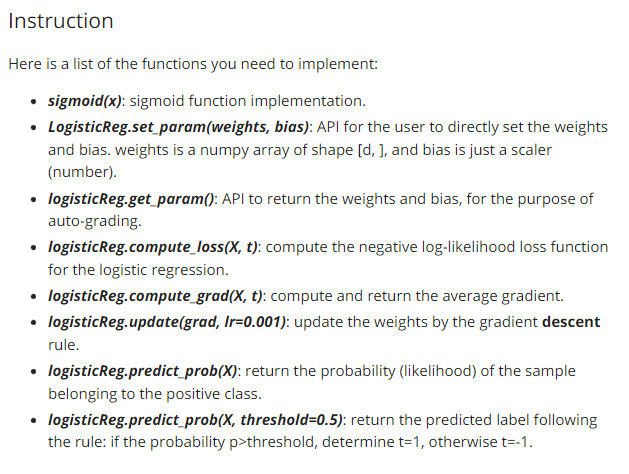

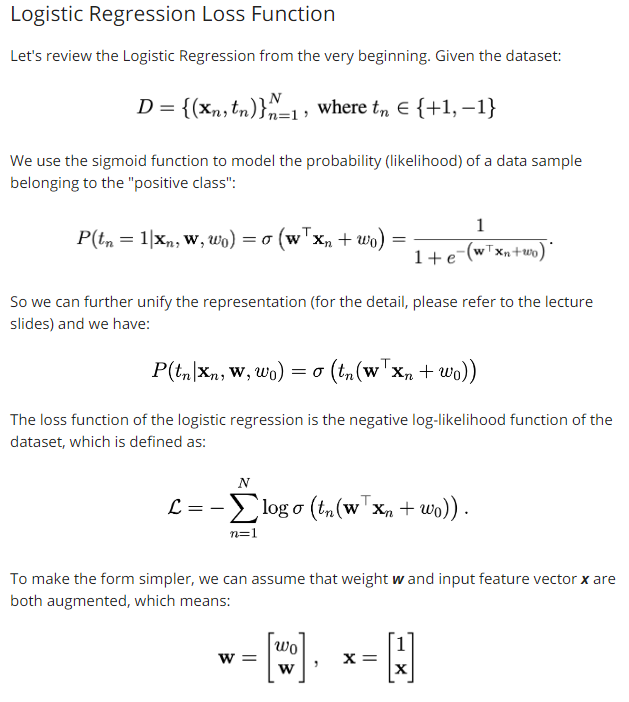

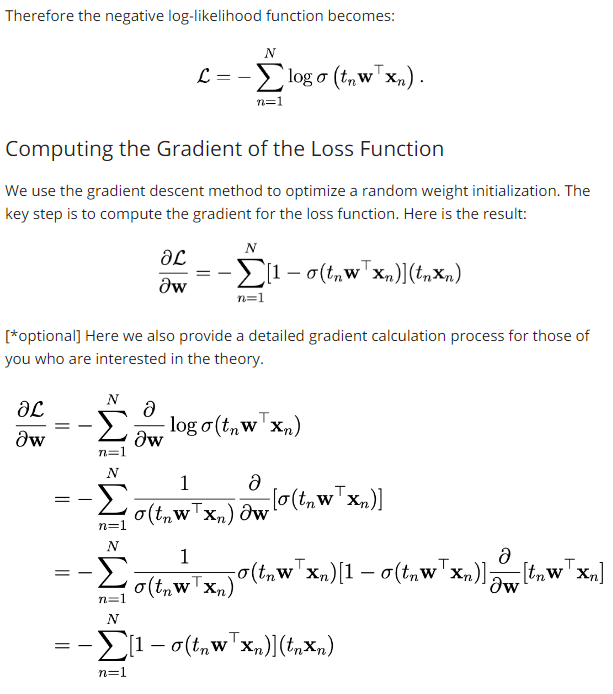

Here is a list of the functions you need to implement: - sigmoid(x) : sigmoid function implementation. - LogisticReg.set_param(weights, bias): API for the user to directly set the weights and bias. weights is a numpy array of shape [d, ], and bias is just a scaler (number). - logisticReg.get_param(): API to return the weights and bias, for the purpose of auto-grading. - logisticReg.compute_loss (X,t) : compute the negative log-likelihood loss function for the logistic regression. - logisticReg.compute_grad (X,t) : compute and return the average gradient. - IogisticReg.update(grad, Ir=0.001): update the weights by the gradient descent rule. - IogisticReg.predict_prob(X): return the probability (likelihood) of the sample belonging to the positive class. - logisticReg.predict_prob( X, threshold=0.5): return the predicted label following the rule: if the probability p> threshold, determine t=1, otherwise t=1. Let's review the Logistic Regression from the very beginning. Given the dataset: D={(xn,tn)}n=1N,wheretn{+1,1} We use the sigmoid function to model the probability (likelihood) of a data sample belonging to the "positive class": P(tn=1xn,w,w0)=(wxn+w0)=1+e(wxn+w0)1 So we can further unify the representation (for the detail, please refer to the lecture slides) and we have: P(tnxn,w,w0)=(tn(wxn+w0)) The loss function of the logistic regression is the negative log-likelihood function of the dataset, which is defined as: L=n=1Nlog(tn(wxn+w0)) To make the form simpler, we can assume that weight w and input feature vector x are both augmented, which means: w=[w0w],x=[1x] Therefore the negative log-likelihood function becomes: L=n=1Nlog(tnwxn) Computing the Gradient of the Loss Function We use the gradient descent method to optimize a random weight initialization. The key step is to compute the gradient for the loss function. Here is the result: wL=n=1N[1(tnwxn)](tnxn) [*optional] Here we also provide a detailed gradient calculation process for those of you who are interested in the theory. wL=n=1Nwlog(tnwxn)=n=1N(tnwxn)1w[(tnwxn)]=n=1N(tnwxn)1(tnwxn)[1(tnwxn)]w[tnwxn]=n=1N[1(tnwxn)](tnxn)Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started