Answered step by step

Verified Expert Solution

Question

1 Approved Answer

The entropy of a discrete random variable X is defined as: H(X) = - p(x) log p(x) I where p(x) = P(X = x),

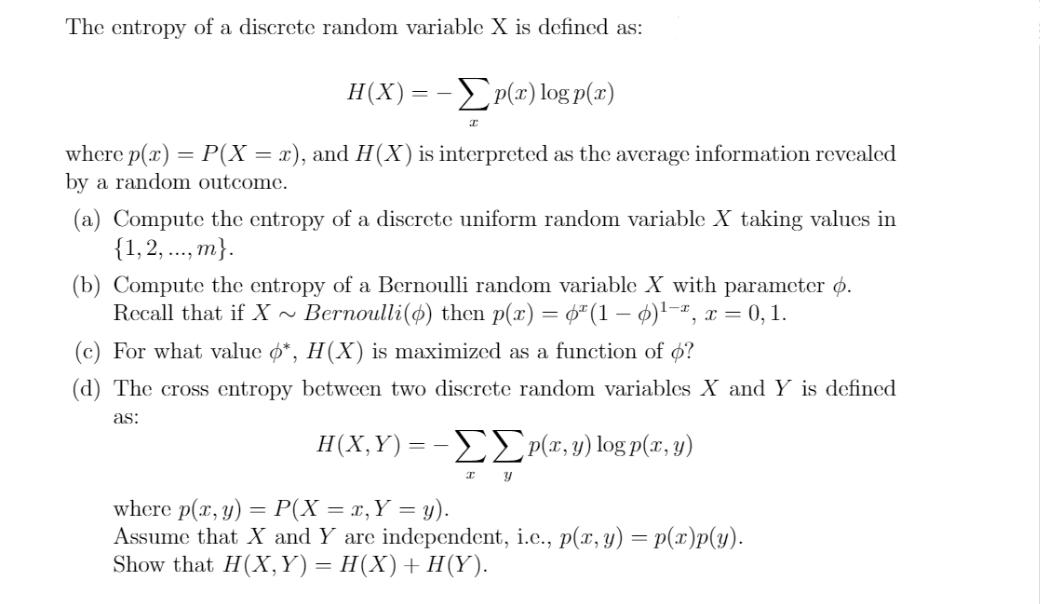

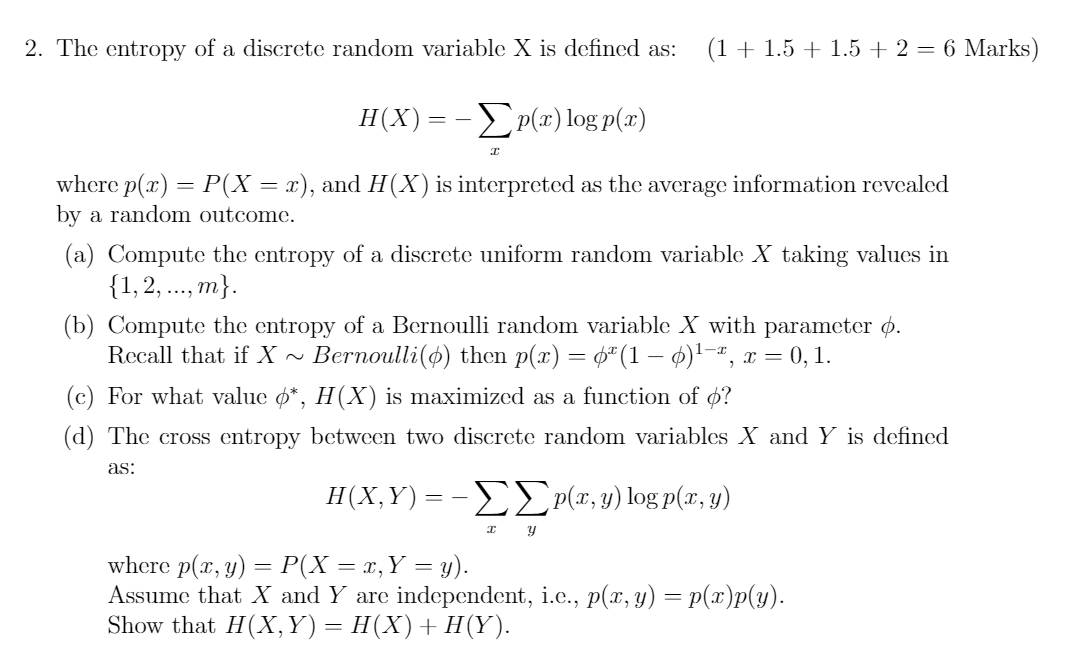

The entropy of a discrete random variable X is defined as: H(X) = - p(x) log p(x) I where p(x) = P(X = x), and H(X) is interpreted as the average information revealed by a random outcome. (a) Compute the entropy of a discrete uniform random variable X taking values in {1,2,...,m}. (b) Compute the entropy of a Bernoulli random variable X with parameter o. Recall that if X~ Bernoulli() then p(x) = (1 - 0) -2, x = 0, 1. For what value o*, H(X) is maximized as a function of o? (d) The cross entropy between two discrete random variables X and Y is defined as: H(X,Y)=-p(x, y) log p(x, y) where p(x, y) = P(X = x, Y = y). Assume that X and Y are independent, i.e., p(x, y) = p(x)p(y). Show that H(X,Y)= H(X) + H(Y). 2. The entropy of a discrete random variable X is defined as: (1 + 1.5 + 1.5 + 2 = 6 Marks) H(X) = -p(x) log p(r) I where p(x) = P(X = x), and H(X) is interpreted as the average information revealed by a random outcome. (a) Compute the entropy of a discrete uniform random variable X taking values in {1, 2,...,m}. (b) Compute the entropy of a Bernoulli random variable X with parameter o. Recall that if X Bernoulli (p) then p(x) = (1 6)-, x = 0, 1. (c) For what value *, H(X) is maximized as a function of o? (d) The cross entropy between two discrete random variables X and Y is defined as: H(X,Y) = -p(x, y) log p(x, y) x Y where p(x, y) = P(X = x, Y = y). Assume that X and Y are independent, i.c., p(x, y) = p(x)p(y). Show that H(X,Y) = H(X) + H(Y).

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started