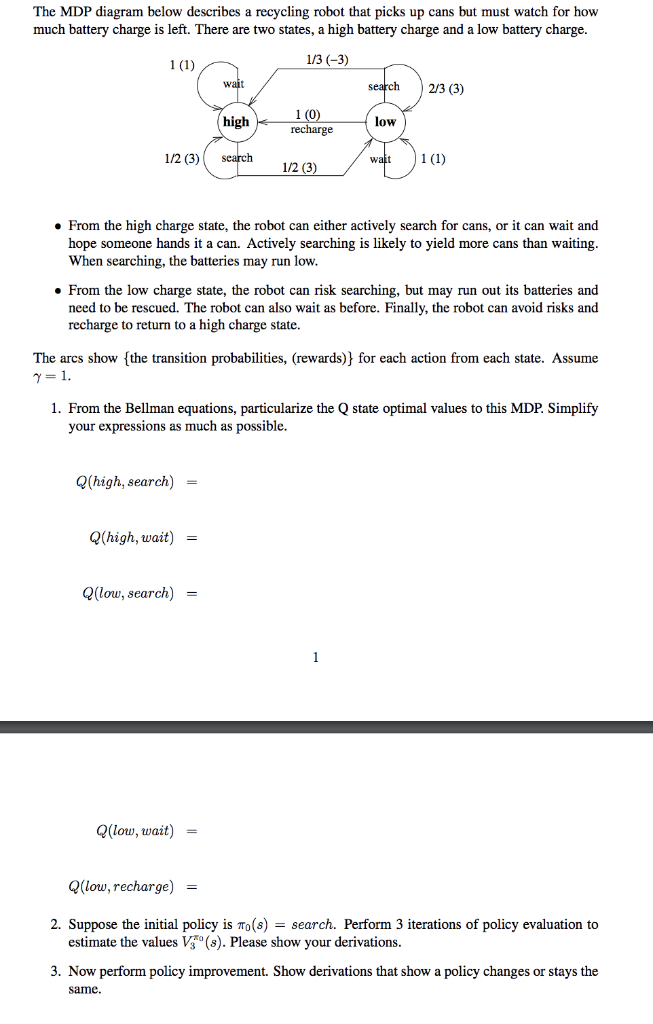

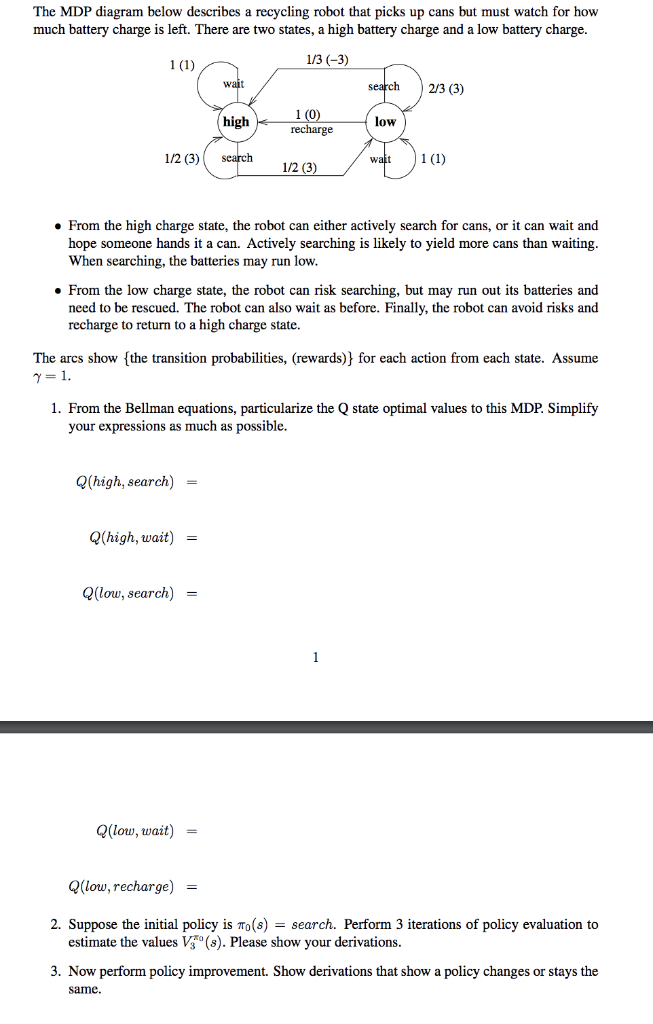

The MDP diagram below describes a recycling robot that picks up cans but must watch for how much battery charge is left. There are two states, a high battery charge and a low battery charge 1/3 (-3) 2/3 (3) high low recharge 1/2 (3) 1/2 (3) From the high charge state, the robot can either actively search for cans, or it can wait and hope someone hands it a can. Actively searching is likely to yield more cans than waiting When searching, the batteries may run low From the low charge state, the robot can risk searching, but may run out its batteries and need to be rescued. The robot can also wait as before. Finally, the robot can avoid risks and recharge to return to a high charge state. The arcs show the transition probabilities, (rewards)} for each action from each state. Assume 1. From the Bellman equations, particularize the Q state optimal values to this MDP. Simplify your expressions as much as possible. Q(high, search) Q(high, wait) - (low, search) - llow, wait) Q(low, recharge) - 2. Suppose the initial policy is To(s) - search. Perform 3 iterations of policy evaluation to estimate the values V (s). Please show your derivations. 3. Now perform policy improvement. Show derivations that show a policy changes or stays the sarne. The MDP diagram below describes a recycling robot that picks up cans but must watch for how much battery charge is left. There are two states, a high battery charge and a low battery charge 1/3 (-3) 2/3 (3) high low recharge 1/2 (3) 1/2 (3) From the high charge state, the robot can either actively search for cans, or it can wait and hope someone hands it a can. Actively searching is likely to yield more cans than waiting When searching, the batteries may run low From the low charge state, the robot can risk searching, but may run out its batteries and need to be rescued. The robot can also wait as before. Finally, the robot can avoid risks and recharge to return to a high charge state. The arcs show the transition probabilities, (rewards)} for each action from each state. Assume 1. From the Bellman equations, particularize the Q state optimal values to this MDP. Simplify your expressions as much as possible. Q(high, search) Q(high, wait) - (low, search) - llow, wait) Q(low, recharge) - 2. Suppose the initial policy is To(s) - search. Perform 3 iterations of policy evaluation to estimate the values V (s). Please show your derivations. 3. Now perform policy improvement. Show derivations that show a policy changes or stays the sarne