Answered step by step

Verified Expert Solution

Question

1 Approved Answer

The question below is about the course Introduction To Deep Learning. We have already constructed a model but would like to check if we have

The question below is about the course Introduction To Deep Learning. We have already constructed a model but would like to check if we have it right. We would appreciate it if you could also explain.

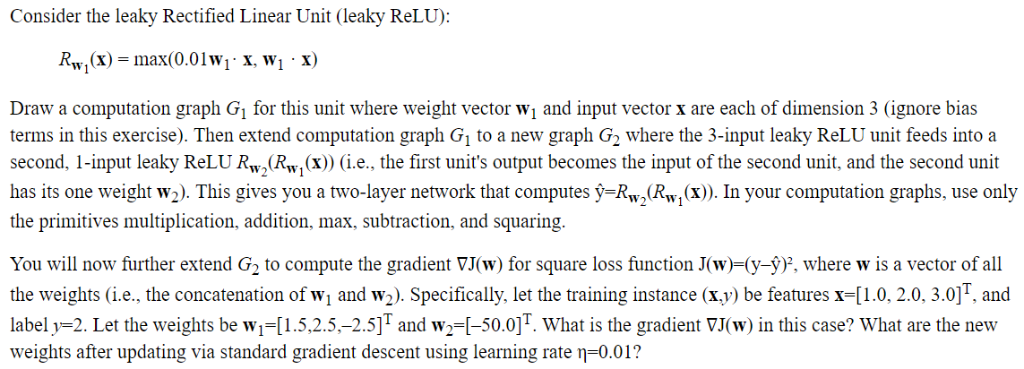

Consider the leaky Rectified Linear Unit (leaky ReLU): Draw a computation graph Gi for this unit where weight vector wi and input vector x are each of dimension 3 (ignore bias terms in this exercise). Then extend computation graph G1 to a new graph G, where the 3-input leaky ReL unit feeds into a second, 1-input leaky ReLU Rw^(RwX)) (i.e., the first unit's output becomes the input of the second unit, and the second unit has its one weight w2). This gives you a two-layer network that computes y-Rw^(Rw,(X). In your computation graphs, use only the primitives multiplication, addition, max, subtraction, and squaring. You will now further extend G2 to compute the gradient J(w) for square loss function J(w-y-9), where w is a vector of all the weights (i.e, the concatenation of wj and w2). Specifically, let the training instance (x.y) be features x [1.0, 2.0, 3.0], and label y-2. Let the weights be w-[1.5.2.5,-2.5]T and w2-I-50.0]T. What is the gradient VJ(w) in this case? What are the new weights after updating via standard gradient descent using learning rate n-0.01? Consider the leaky Rectified Linear Unit (leaky ReLU): Draw a computation graph Gi for this unit where weight vector wi and input vector x are each of dimension 3 (ignore bias terms in this exercise). Then extend computation graph G1 to a new graph G, where the 3-input leaky ReL unit feeds into a second, 1-input leaky ReLU Rw^(RwX)) (i.e., the first unit's output becomes the input of the second unit, and the second unit has its one weight w2). This gives you a two-layer network that computes y-Rw^(Rw,(X). In your computation graphs, use only the primitives multiplication, addition, max, subtraction, and squaring. You will now further extend G2 to compute the gradient J(w) for square loss function J(w-y-9), where w is a vector of all the weights (i.e, the concatenation of wj and w2). Specifically, let the training instance (x.y) be features x [1.0, 2.0, 3.0], and label y-2. Let the weights be w-[1.5.2.5,-2.5]T and w2-I-50.0]T. What is the gradient VJ(w) in this case? What are the new weights after updating via standard gradient descent using learning rate n-0.01

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started