Answered step by step

Verified Expert Solution

Question

1 Approved Answer

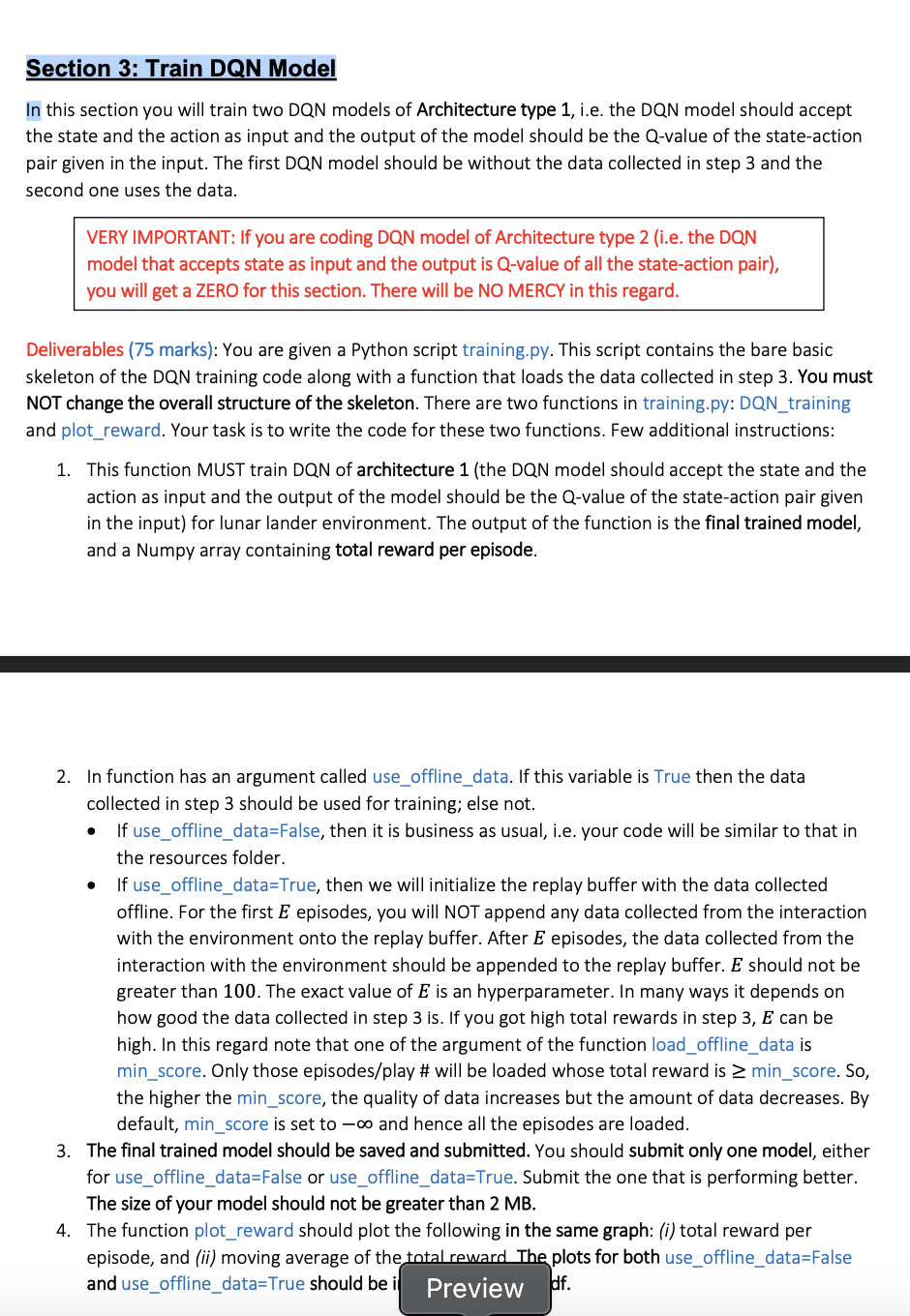

this is the training.py code below give the dqn architecture 1 . which take state action pair as input and gives one q value with

this is the training.py code below give the dqn architecture which take state action pair as input and gives one q value with respect to that action also make sure you get a increasing reward trend as episode increases if rewards are not increasing as episodes are increasing then there in no point.

Code

import numpy as np

import pandas as pd

import gymnasium as gym

def loadofflinedatapath minscore:

statedata

actiondata

rewarddata

nextstatedata

terminateddata

dataset pdreadcsvpath

datasetgroup dataset.groupbyPlay #

for playno df in datasetgroup:

state nparraydfiloc:

state nparraynpfromstringrow: dtypenpfloat sep for row in state

action nparraydfiloc:astypeint

reward nparraydfiloc:astypenpfloat

nextstate nparraydfiloc:

nextstate nparraynpfromstringrow: dtypenpfloat sep for row in nextstate

terminated nparraydfiloc:astypeint

totalreward npsumrewarda

if totalrewardminscore:

statedata.appendstate

actiondata.appendaction

rewarddata.appendreward

nextstatedata.appendnextstate

terminateddata.appendterminated

statedata npconcatenatestatedata

actiondata npconcatenateactiondata

rewarddata npconcatenaterewarddataa

nextstatedata npconcatenatenextstatedata

terminateddata npconcatenateterminateddata

return statedata, actiondata, rewarddata, nextstatedata, terminateddata

def plotrewardtotalrewardperepisode, windowlength:

# This function should display:

# i total reward per episode.

# ii moving average of the total reward. The window for moving average

# should slide by one episode every time.

pass

def DQNtrainingenv offlinedata, useofflinedata:

# The function should return the final trained DQN model and total reward

# of every episode.

pass

# Initiate the lunar lander environment.

# NO RENDERING. It will slow the training process.

env gym.makeLunarLanderv

# Load the offline data collected in step Also, process the dataset.

path 'lunardataset.csv # This should contain the path to the collected dataset.

minscore npInf # The minimum total reward of an episode that should be used for training.

offlinedata loadofflinedatapath minscore

# Train DQN model of Architecture type

useofflinedata True # If True then the offline data will be used. Else, offline data will not be used.

finalmodel, totalrewardperepisode DQNtrainingenv offlinedata, useofflinedata

# Save the final model

finalmodel.savelunarlandermodel.h # This line is for Keras. Replace this appropriate code.

# Plot reward per episode and moving average reward

windowlength # Window length for moving average reward.

plotrewardtotalrewardperepisode, windowlength

env.closeThis

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started