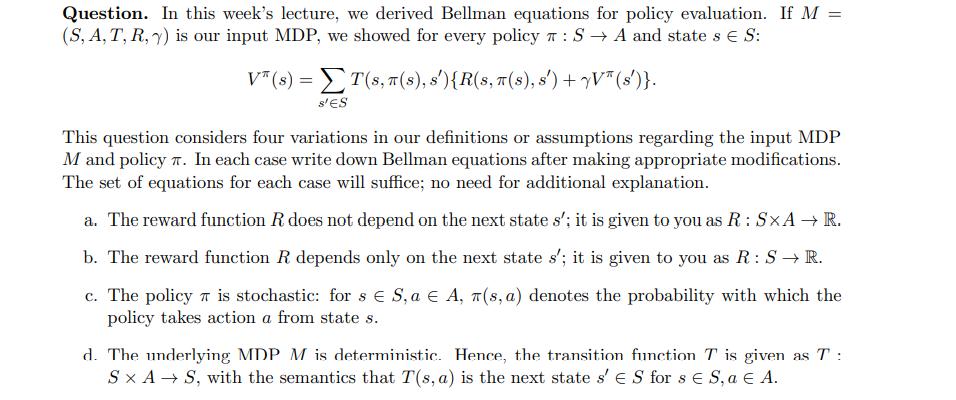

Question: we derived Bellman equations for policy evaluation. If M = (S, A, T, R, ) is our input MDP, we showed for every policy

we derived Bellman equations for policy evaluation. If M = (S, A, T, R, ) is our input MDP, we showed for every policy : SA and state s S: T(S, T(S), s'){R(s, n(s), s') + V (s')}. V* (s) = S'ES This question considers four variations in our definitions or assumptions regarding the input MDP M and policy. In each case write down Bellman equations after making appropriate modifications. The set of equations for each case will suffice; no need for additional explanation. a. The reward function R does not depend on the next state s'; it is given to you as R: SxA R. b. The reward function R depends only on the next state s'; it is given to you as R: S R. c. The policy is stochastic: for s S, a EA, (s, a) denotes the probability with which the policy takes action a from state s. d. The underlying MDP M is deterministic. Hence, the transition function T is given as T SX A S, with the semantics that T(s, a) is the next state s' ES for s E S, a A.

Step by Step Solution

There are 3 Steps involved in it

The detailed ... View full answer

Get step-by-step solutions from verified subject matter experts