Answered step by step

Verified Expert Solution

Question

1 Approved Answer

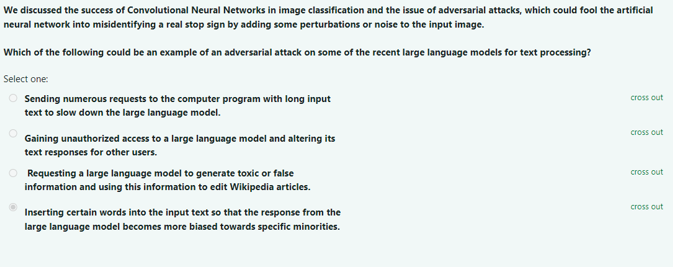

We discussed the success of Convolutional Neural Networks in image classification and the issue of adversarial attacks, which could fool the artificial neural network into

We discussed the success of Convolutional Neural Networks in image classification and the issue of adversarial attacks, which could fool the artificial

neural network into misidentifying a real stop sign by adding some perturbations or noise to the input image.

Which of the following could be an example of an adversarial attack on some of the recent large language models for text processing?

Select one:

Sending numerous requests to the computer program with long input

text to slow down the large language model.

Gaining unauthorized access to a large language model and altering its

cross out

text responses for other users.

Requesting a large language model to generate toxic or false

cross out

information and using this information to edit Wikipedia articles.

Inserting certain words into the input text so that the response from the

cross out

large language model becomes more biased towards specific minorities.

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started