Answered step by step

Verified Expert Solution

Question

1 Approved Answer

what more information do you need? CS 232 Introduction to Cand Unix Project 3: Building a Web Search Engine Crawler is due Sunday, April 9

what more information do you need?

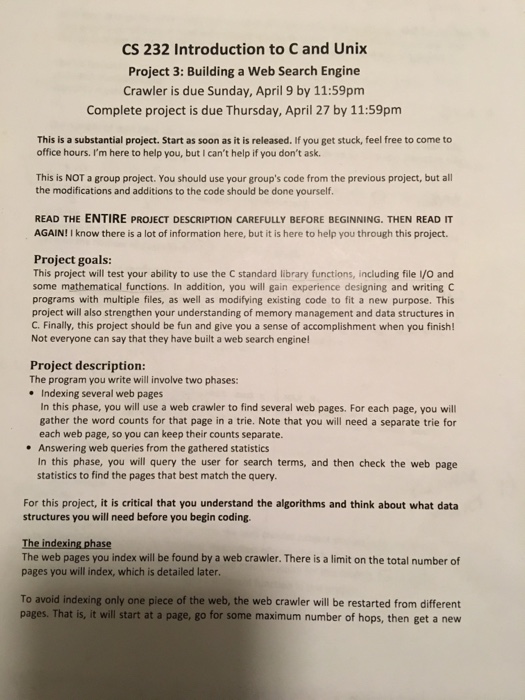

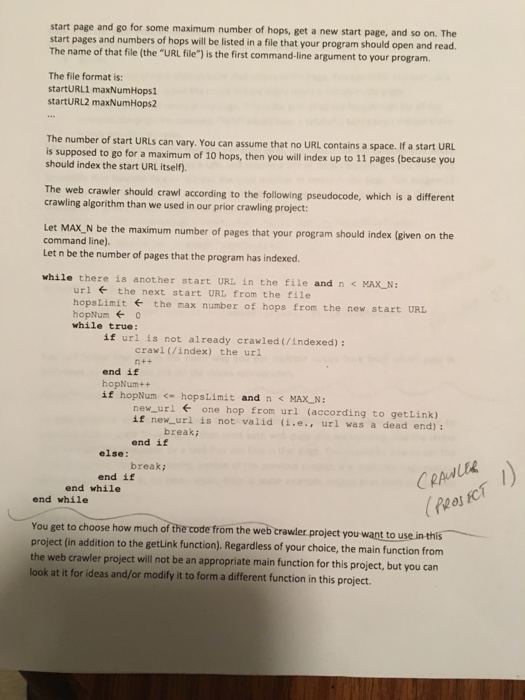

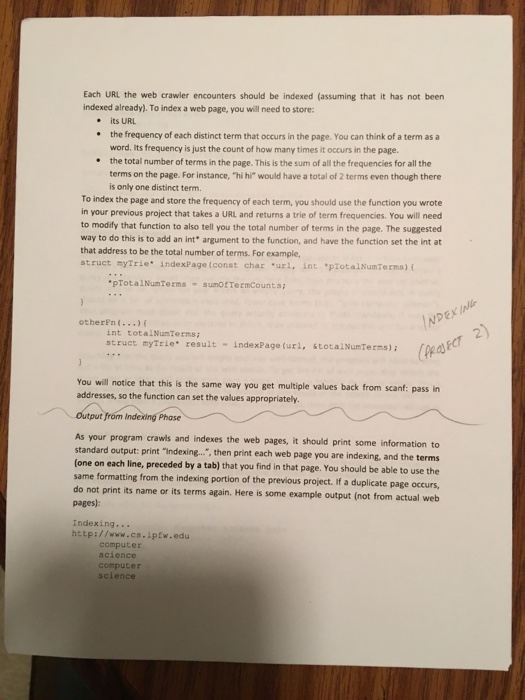

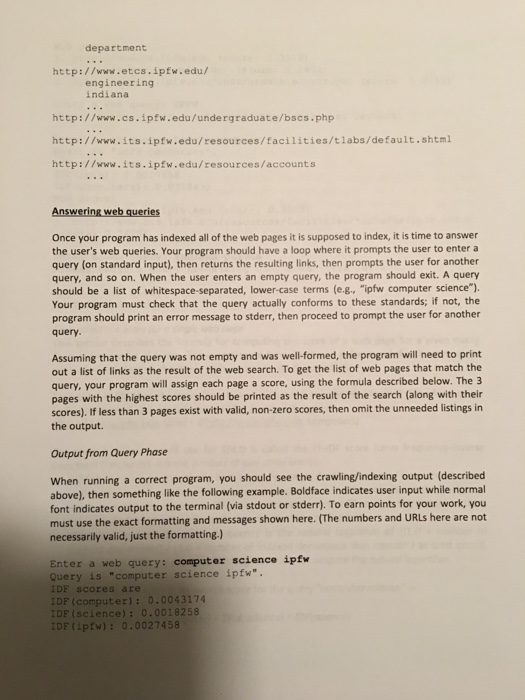

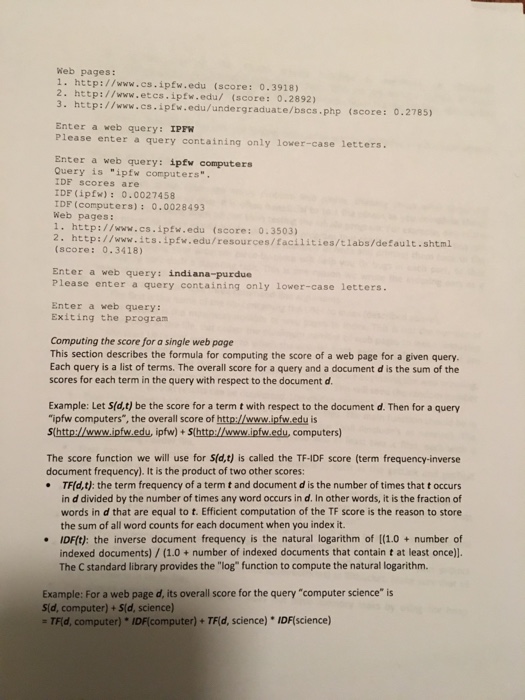

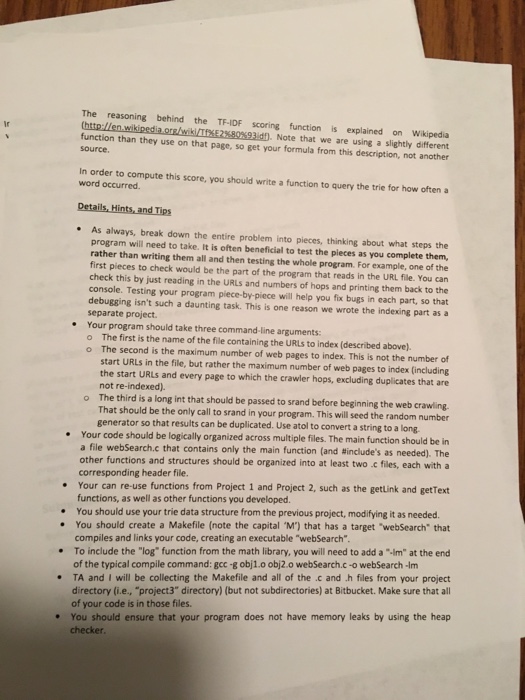

CS 232 Introduction to Cand Unix Project 3: Building a Web Search Engine Crawler is due Sunday, April 9 by 11:59pm Complete project is due Thursday, April 27 by 11:59pm This is a substantial project. Start as soon as it is released. If you get stuck, feel free to come to office hours. I'm here to help you, but I can't help if you don't ask. This is NOT a group project. You should use your group's code from the previous project, but all the modifications and additions to the code should be done yourself. READ THE ENTIRE PROJECT DESCRIPTION CAREFULLY BEFORE BEGINNING. THEN READ IT AGAIN! know there is a lot of information here, but it is here to help you through this project. Project goals: This project will test your ability to use the C standard library functions, including file l/O and some mathematical functions. In addition, you will gain experience designing and writing C programs with multiple files, as well as modifying existing code to fit a new purpose. This project will also strengthen your understanding of memory management and data structures in C. Finally, this project should be fun and give you a sense of accomplishment when you finish! Not everyone can say that they have built a web search engine! Project description: The program you write will involve two phases: Indexing several web pages In this phase, you will use a web crawler to find several web pages. For each page, you will gather the word counts for that page in a trie. Note that you will need a separate trie for each web page, so you can keep their counts separate. Answering web queries from the gathered statistics In this phase, you will query the user for search terms, and then check the web page statistics to find the pages that best match the query. For this project, it is critical that you understand the algorithms and think about what data structures you will need before you begin coding. The indexing phase The web pages you index will be found by a web crawler. There is a limit on the total number of pages you will index, which is detailed later. To avoid indexing only one piece of the web, the web crawler will be restarted from different pages. That is, it will s at a page, go for some maximum number of hops, then get a new CS 232 Introduction to Cand Unix Project 3: Building a Web Search Engine Crawler is due Sunday, April 9 by 11:59pm Complete project is due Thursday, April 27 by 11:59pm This is a substantial project. Start as soon as it is released. If you get stuck, feel free to come to office hours. I'm here to help you, but I can't help if you don't ask. This is NOT a group project. You should use your group's code from the previous project, but all the modifications and additions to the code should be done yourself. READ THE ENTIRE PROJECT DESCRIPTION CAREFULLY BEFORE BEGINNING. THEN READ IT AGAIN! know there is a lot of information here, but it is here to help you through this project. Project goals: This project will test your ability to use the C standard library functions, including file l/O and some mathematical functions. In addition, you will gain experience designing and writing C programs with multiple files, as well as modifying existing code to fit a new purpose. This project will also strengthen your understanding of memory management and data structures in C. Finally, this project should be fun and give you a sense of accomplishment when you finish! Not everyone can say that they have built a web search engine! Project description: The program you write will involve two phases: Indexing several web pages In this phase, you will use a web crawler to find several web pages. For each page, you will gather the word counts for that page in a trie. Note that you will need a separate trie for each web page, so you can keep their counts separate. Answering web queries from the gathered statistics In this phase, you will query the user for search terms, and then check the web page statistics to find the pages that best match the query. For this project, it is critical that you understand the algorithms and think about what data structures you will need before you begin coding. The indexing phase The web pages you index will be found by a web crawler. There is a limit on the total number of pages you will index, which is detailed later. To avoid indexing only one piece of the web, the web crawler will be restarted from different pages. That is, it will s at a page, go for some maximum number of hops, then get a newStep by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started