Question: Which statements about the self - attention mechanism are correct? Verbleibende Zeit 0 : 5 0 : 2 3 a . Similar to the hidden

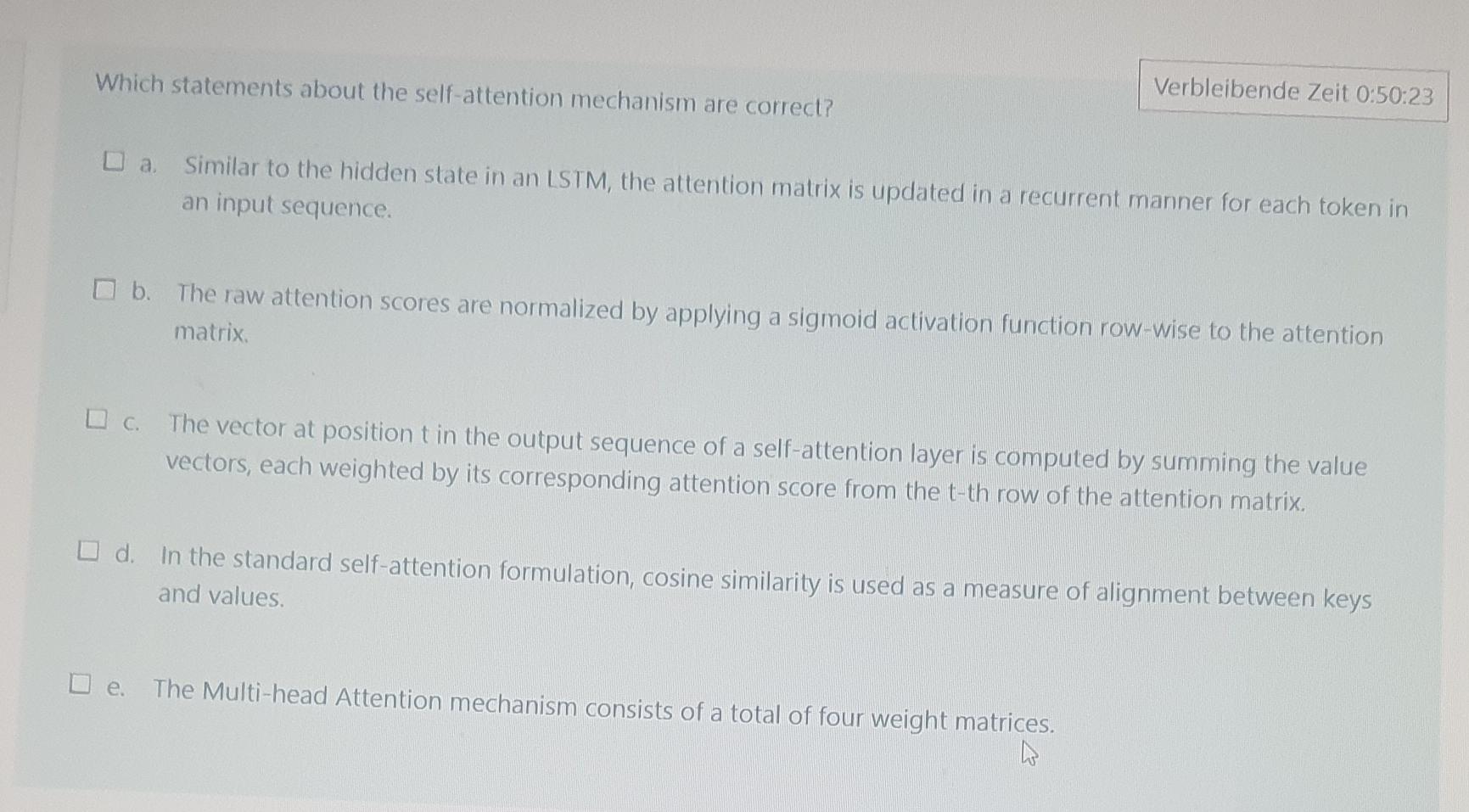

Which statements about the selfattention mechanism are correct?

Verbleibende Zeit ::

a Similar to the hidden state in an LSTM the attention matrix is updated in a recurrent manner for each token in an input sequence.

b The raw attention scores are normalized by applying a sigmoid activation function rowwise to the attention matrix.

c The vector at position in the output sequence of a selfattention layer is computed by summing the value vectors, each weighted by its corresponding attention score from the th row of the attention matrix.

d In the standard selfattention formulation, cosine similarity is used as a measure of alignment between keys and values.

e The Multihead Attention mechanism consists of a total of four weight matrices.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock