4. Consider a process {Xn, n = 0, 1, . . .}, which takes on the values...

Question:

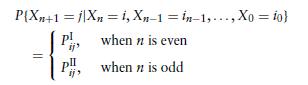

4. Consider a process {Xn, n = 0, 1, . . .}, which takes on the values 0, 1, or 2. Suppose

where 2j =0 PI ij = 2j =0PII ij = 1, i = 0, 1, 2. Is {Xn, n 0} a Markov chain? If not, then show how, by enlarging the state space, we may transform it into a Markov chain.

Fantastic news! We've Found the answer you've been seeking!

Step by Step Answer:

Related Book For

Question Posted: