Consider a Markov chain with transition probabilities qi,j , i, j 0. Let N0,k, k =

Question:

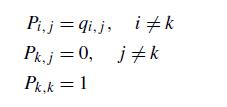

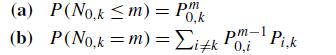

Consider a Markov chain with transition probabilities qi,j , i, j ≥ 0. Let N0,k, k = 0, be the number of transitions, starting in state 0, until this Markov chain enters state k. Consider another Markov chain with transition probabilities Pi,j , i, j ≥ 0, where

Give explanations as to whether the following identities are true or false.

Fantastic news! We've Found the answer you've been seeking!

Step by Step Answer:

Related Book For

Question Posted: