Go back

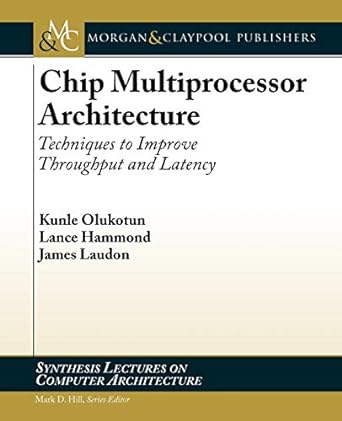

Chip Multiprocessor Architecture Techniques To Improve Throughput And Latency(1st Edition)

Authors:

Kunle Olukotun

Cover Type:Hardcover

Condition:Used

In Stock

Shipment time

Expected shipping within 2 DaysPopular items with books

Access to 30 Million+ solutions

Free ✝

Ask 50 Questions from expert

AI-Powered Answers

✝ 7 days-trial

Total Price:

$0

List Price: $25.50

Savings: $25.5(100%)

Solution Manual Includes

Access to 30 Million+ solutions

Ask 50 Questions from expert

AI-Powered Answers

24/7 Tutor Help

Detailed solutions for Chip Multiprocessor Architecture Techniques To Improve Throughput And Latency

Price:

$9.99

/month

Book details

ISBN: 159829122X, 978-1598291223

Book publisher: Morgan And Claypool Publishers

Get your hands on the best-selling book Chip Multiprocessor Architecture Techniques To Improve Throughput And Latency 1st Edition for free. Feed your curiosity and let your imagination soar with the best stories coming out to you without hefty price tags. Browse SolutionInn to discover a treasure trove of fiction and non-fiction books where every page leads the reader to an undiscovered world. Start your literary adventure right away and also enjoy free shipping of these complimentary books to your door.