Question: Consider a single Boolean random variable Y (the classification). Let the prior probability P(Y =true) be . Lets try to find , given a training

Consider a single Boolean random variable Y (the “classification”). Let the prior probability P(Y =true) be π. Let’s try to find π, given a training set D=(y1, . . . , yN) with N independent samples of Y . Furthermore, suppose p of the N are positive and n of the N are negative.

a. Write down an expression for the likelihood of D (i.e., the probability of seeing this particular sequence of examples, given a fixed value of π) in terms of π, p, and n.

b. By differentiating the log likelihood L, find the value of π that maximizes the likelihood.

c. Now suppose we add in k Boolean random variables X1,X2, . . . ,Xk (the “attributes”)

that describe each sample, and suppose we assume that the attributes are conditionally independent of each other given the goal Y . Draw the Bayes net corresponding to this assumption.

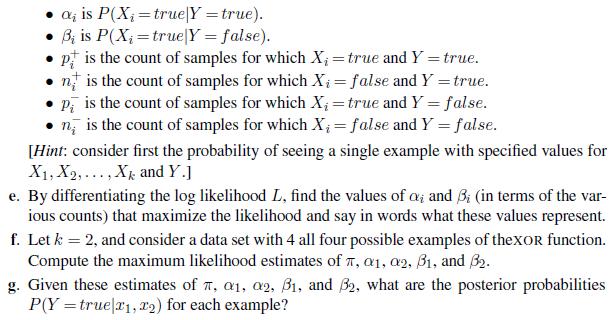

d. Write down the likelihood for the data including the attributes, using the following additional notation:

a is P(X=true|Y = true). Bi is P(X=true|Y = false). P is the count of samples for which X = true and Y = true. n is the count of samples for which X = false and Y = true. P is the count of samples for which X = true and Y = false. n, is the count of samples for which X;= false and Y = false. [Hint: consider first the probability of seeing a single example with specified values for X1, X2,..., X and Y.] e. By differentiating the log likelihood L, find the values of a; and i (in terms of the var- ious counts) that maximize the likelihood and say in words what these values represent. f. Let k = 2, and consider a data set with 4 all four possible examples of theXOR function. Compute the maximum likelihood estimates of , a1, a2, B1, and B2. g. Given these estimates of , a1, a2, 31, and 32, what are the posterior probabilities P(Y true x1, x2) for each example?

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts