Consider the continuous Markov chain of Example 11.17: A chain with two states S = {0, 1}

Question:

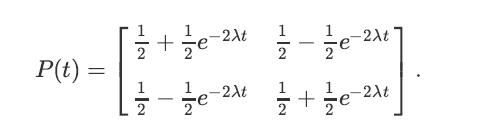

Consider the continuous Markov chain of Example 11.17: A chain with two states S = {0, 1} and λ0 = λ1 = λ > 0. In that example, we found that the transition matrix for any t ≥ 0 is given by

Find the stationary distribution π for this chain.

Example 11.17

Consider a continuous Markov chain with two states S = {0, 1}. Assume the holding time parameters are given by λ0 = λ1 = λ > 0. That is, the time that the chain spends in each state before going to the other state has an Exponential(λ) distribution.

Fantastic news! We've Found the answer you've been seeking!

Step by Step Answer:

Related Book For

Introduction To Probability Statistics And Random Processes

ISBN: 9780990637202

1st Edition

Authors: Hossein Pishro-Nik

Question Posted: