Question: 2. A Markov chain with transition probability matrix P = (pij) is called regular, if for some positive integer n, pn ij > 0 for

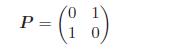

2. A Markov chain with transition probability matrix P = (pij) is called regular, if for some positive integer n, pn ij > 0 for all i and j. Let {Xn : n = 0, 1, . . .} be a Markov chain with state space {0, 1} and transition probability matrix

Is {Xn : n = 0, 1, . . .} regular?Why or why not?

P= 0 1 = (1 =)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts