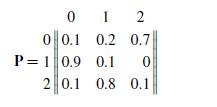

3.1.1 A Markov chain X0;X1; : : : on states 0, 1, 2 has the transition probability...

Question:

3.1.1 A Markov chain X0;X1; : : : on states 0, 1, 2 has the transition probability matrix

and initial distribution p0 D PrfX0 D 0g D 0:3;p1 D PrfX0 D 1g D 0:4, and p2 D PrfX0 D 2g D 0:3. Determine PrfX0 D 0;X1 D 1;X2 D 2g.

Fantastic news! We've Found the answer you've been seeking!

Step by Step Answer:

Related Book For

An Introduction To Stochastic Modeling

ISBN: 9780233814162

4th Edition

Authors: Mark A. Pinsky, Samuel Karlin

Question Posted: