Answered step by step

Verified Expert Solution

Question

1 Approved Answer

3 . The switched interconnect increases the performance of a snooping cache - coherent multiprocessor by allowing multiple requests to be overlapped. Because the controllers

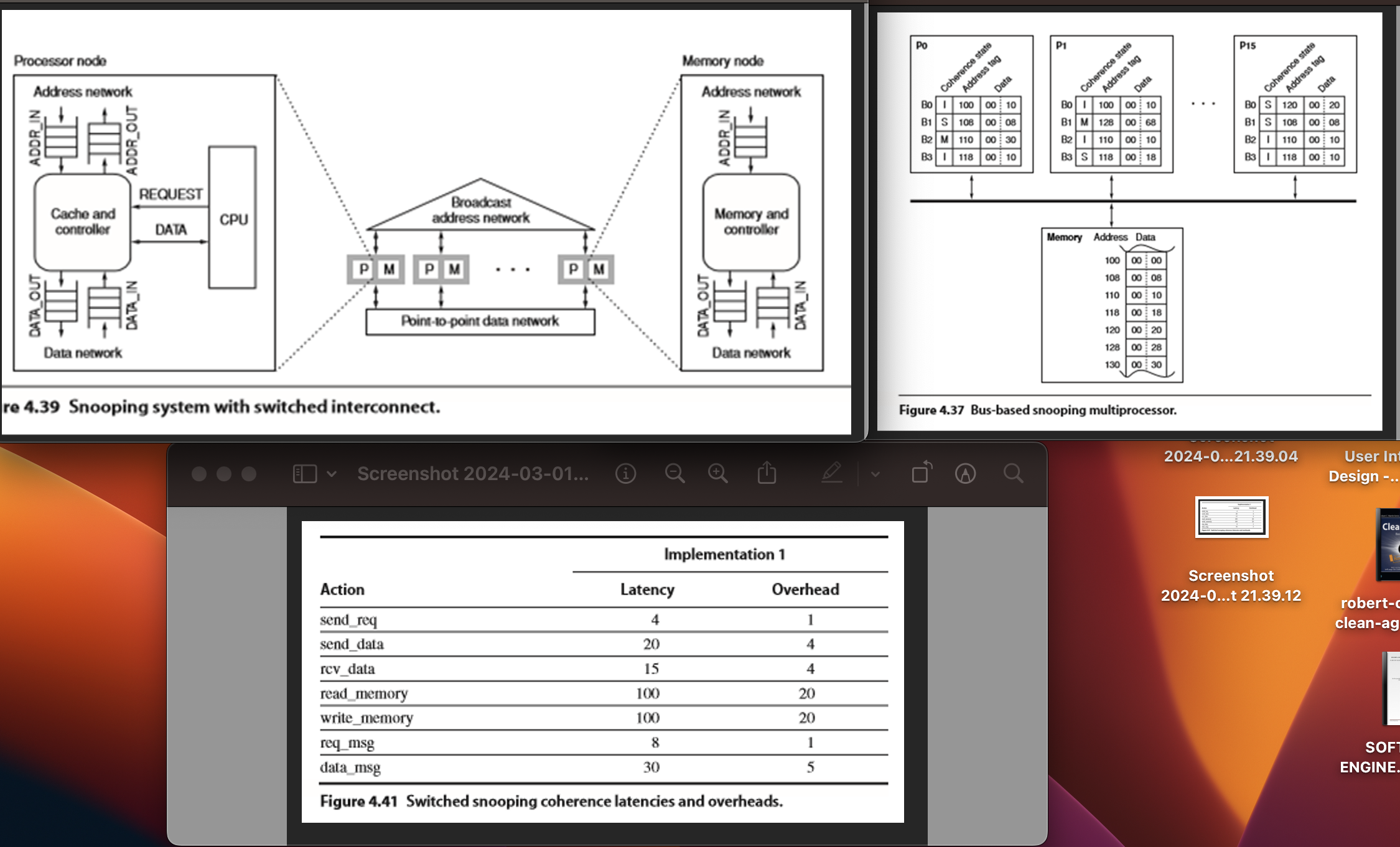

The switched interconnect increases the performance of a snooping cachecoherent multiprocessor by allowing multiple requests to be overlapped. Because the controllers and the networks are pipelined, there is a difference between an operation's latency ie cycles to complete the operation and overhead ie cycles until the next operation can begin For the multiprocessor illustrated in Figure assume the following latencies and overheads:

CPU read and write hits generate no stall cycles.

A CPU read or write that generates a replacement event issues the corresponding GetShared or GetModified message before the PutModified message eg using a writeback buffer

A cache controller event that sends a request message eg GetShared has latency send reg and blocks the controller from processing other events for send req cycles.

A cache controller event that reads the cache and sends a data message has latency Lend data and overhead Osend data cycles.

A cache controller event that receives a data message and updates the cache has latency Lrcv data and overhead Orc data.

A memory controller has latency Lead memory and overhead Oread memory cycles to read memory and send a data message

A memory controller has latency write memory and overhead Owrite memory cycles to write a data message to memory.

In the absence of contention, a request message has network latency reg msg and overhead Oregmsg cycles.

In the absence of contention, a data message has network latency Ldata msg and overhead Odata msg cycles.

Consider an implementation with the performance characteristics summarized in Figure For the following sequences of operations and the cache contents from Figure and the implementation parameters in Figure how many stall cycles does each processor incur for each memory request? Similarly, for how many cycles are the different controllers occupied?

For simplicity, assume each processor can have only one memory operation outstanding at a time, if two nodes make requests in the same cycle and the one listed first "wins," the later node must stall for the request message overhead, and all requests map to the same memory controller.

a PO: read

b PO: write

c P: write

d P: read

e PO: read

P: read

f PO: read

P: write

g PO: write

P: write

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started