Answered step by step

Verified Expert Solution

Question

1 Approved Answer

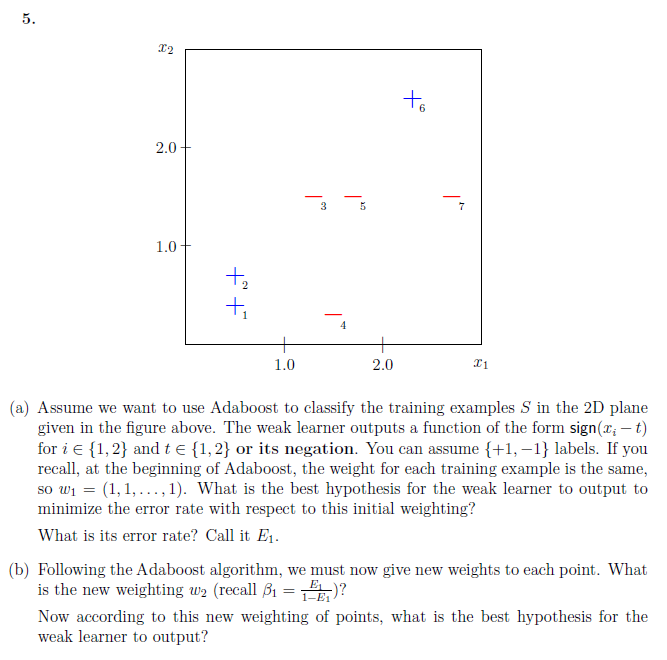

5. X2 2.0 1.0- t + 1.0 3 4 5 2.0 to 7 x1 (a) Assume we want to use Adaboost to classify the

5. X2 2.0 1.0- t + 1.0 3 4 5 2.0 to 7 x1 (a) Assume we want to use Adaboost to classify the training examples S in the 2D plane given in the figure above. The weak learner outputs a function of the form sign(x; -t) fori {1,2} and t {1,2} or its negation. You can assume {+1, -1} labels. If you recall, at the beginning of Adaboost, the weight for each training example is the same, so w = (1, 1,..., 1). What is the best hypothesis for the weak learner to output to minimize the error rate with respect to this initial weighting? What is its error rate? Call it E. (b) Following the Adaboost algorithm, we must now give new weights to each point. What is the new weighting w (recall = )? 1-E Now according to this new weighting of points, what is the best hypothesis for the weak learner to output?

Step by Step Solution

★★★★★

3.49 Rating (152 Votes )

There are 3 Steps involved in it

Step: 1

a The best hypothesis for the weak learner to output to minimize the error rate with respect to the initial weighting W1 111 is the hypothesis that co...

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started