Question

Building an Adaboost Classifier to classify MNIST digits 3 and 8 (training and validation set of threes and eights from the MNIST dataset it's at

Building an Adaboost Classifier to classify MNIST digits 3 and 8 (training and validation set of threes and eights from the MNIST dataset it's at the bottom of the page).

TO DO - COMPLETE DE CODE BELOW. Follow the instructions exactly to create an adaboost class:

class AdaBoost: def __init__(self, n_learners=20, base=DecisionTreeClassifier(max_depth=3), random_state=1234): """ Create a new adaboost classifier. Args: N (int, optional): Number of weak learners in classifier. base (BaseEstimator, optional): Your general weak learner random_state (int, optional): set random generator. needed for unit testing.

Attributes: base (estimator): Your general weak learner n_learners (int): Number of weak learners in classifier. alpha (ndarray): Coefficients on weak learners. learners (list): List of weak learner instances. """ np.random.seed(random_state) self.n_learners = n_learners self.base = base self.alpha = np.zeros(self.n_learners) self.learners = [] def fit(self, X_train, y_train): """ Train AdaBoost classifier on data. Sets alphas and learners. Args: X_train (ndarray): [n_samples x n_features] ndarray of training data y_train (ndarray): [n_samples] ndarray of data """

# ================================================================= # TODO

# Note: You can create and train a new instantiation # of your sklearn decision tree as follows # you don't have to use sklearn's fit function, # but it is probably the easiest way

# w = np.ones(len(y_train)) # h = clone(self.base) # h.fit(X_train, y_train, sample_weight=w) # ================================================================= # complete your code here return self def error_rate(self, y_true, y_pred, weights): # ================================================================= # TODO

# Implement the weighted error rate # ================================================================= # complete your code here def predict(self, X): """ Adaboost prediction for new data X. Args: X (ndarray): [n_samples x n_features] ndarray of data Returns: yhat (ndarray): [n_samples] ndarray of predicted labels {-1,1} """

# ================================================================= # TODO # ================================================================= yhat = np.zeros(X.shape[0]) # complete your code here def score(self, X, y): """ Computes prediction accuracy of classifier. Args: X (ndarray): [n_samples x n_features] ndarray of data y (ndarray): [n_samples] ndarray of true labels Returns: Prediction accuracy (between 0.0 and 1.0). """ # complete your code here def staged_score(self, X, y): """ Computes the ensemble score after each iteration of boosting for monitoring purposes, such as to determine the score on a test set after each boost. Args: X (ndarray): [n_samples x n_features] ndarray of data y (ndarray): [n_samples] ndarray of true labels Returns: scores (ndarary): [n_learners] ndarray of scores """

scores = [] # complete your code here return np.array(scores)

HERE'S THE CODE with the training and validation set of threes and eights from the MNIST dataset to be classified:

class ThreesandEights: """ Class to store MNIST 3s and 8s data """

def __init__(self, location):

import pickle, gzip

# Load the dataset f = gzip.open(location, 'rb')

# Split the data set x_train, y_train, x_test, y_test = pickle.load(f) # Extract only 3's and 8's for training set self.x_train = x_train[np.logical_or(y_train== 3, y_train == 8), :] self.y_train = y_train[np.logical_or(y_train== 3, y_train == 8)] self.y_train = np.array([1 if y == 8 else -1 for y in self.y_train]) # Shuffle the training data shuff = np.arange(self.x_train.shape[0]) np.random.shuffle(shuff) self.x_train = self.x_train[shuff,:] self.y_train = self.y_train[shuff]

# Extract only 3's and 8's for validation set self.x_test = x_test[np.logical_or(y_test== 3, y_test == 8), :] self.y_test = y_test[np.logical_or(y_test== 3, y_test == 8)] self.y_test = np.array([1 if y == 8 else -1 for y in self.y_test]) f.close()

def view_digit(ex, label=None, feature=None): """ function to plot digit examples """ if label: print("true label: {:d}".format(label)) img = ex.reshape(21,21) col = np.dstack((img, img, img)) if feature is not None: col[feature[0]//21, feature[0]%21, :] = [1, 0, 0] plt.imshow(col) plt.xticks([]), plt.yticks([]) data = ThreesandEights("data/mnist21x21_3789.pklz")

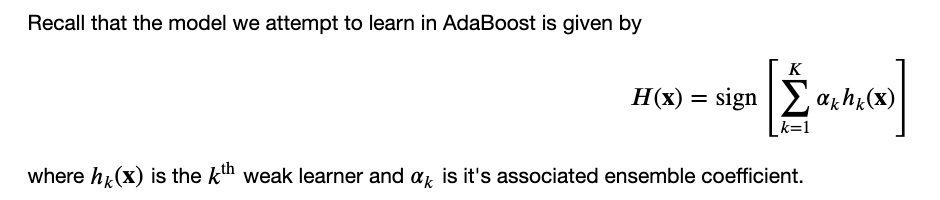

Recall that the model we attempt to learn in AdaBoost is given by H(x)=sign[k=1Kkhk(x)] where hk(x) is the kth weak learner and k is it's associated ensemble coefficientStep by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started