Answered step by step

Verified Expert Solution

Question

1 Approved Answer

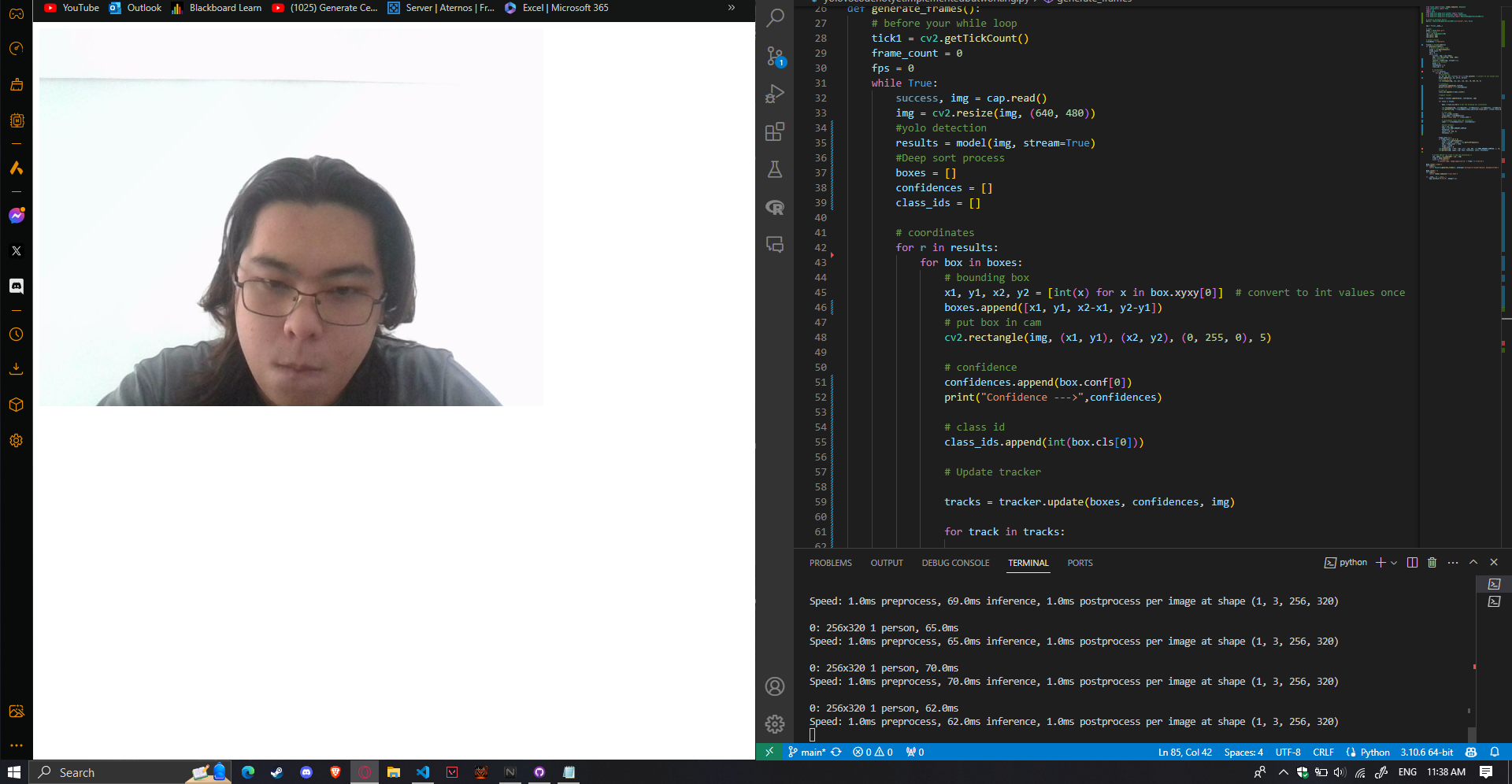

Can you please help me how to fix the bounding box based on the picture below using the flask code? Please provide the code. Thanks

Can you please help me how to fix the bounding box based on the picture below using the flask code? Please provide the code. Thanks

Here is the code.

import cv

import math

from deepsort import buildtracker # Assuming DeepSORT is installed and this is the correct import

app Flaskname

# model

model YOLObestpt

# start webcam

cap cvVideoCapture

cap.set

cap.set

# object classes

classNames person

# Initialize DeepSORT tracker

tracker buildtracker

def generateframes:

framecount

fps

tick cvgetTickCount

while True:

success, img cap.read

img cvresizeimg

# Detect objects using YOLO

results model.trackimg streamTrue, persistTrue, tracker"bytetrack.yaml"

# Process detections for DeepSORT

boxes

confidences

classids

for r in results:

for box in rboxes:

x y x yintx for x in box.xyxy

boxes.appendx y xx yy # Format: x y w h

confidences.appendboxconf

classids.appendintboxcls

# Update tracker

tracks tracker.updateboxes confidences, img

for track in tracks:

bbox track.totlbr # Get the bounding box coordinates

cvrectangleimgintbbox intbboxintbbox intbbox

cvputTextimg fclassNamesclassidstracktrackid: tracktrackid

intbbox intbbox cvFONTHERSHEYSIMPLEX,

# Remaining code for FPS calculation and frame generation

#

@app.routevideo

def video:

return Responsegenerateframes mimetype'multipartxmixedreplace; boundaryframe'

@app.route

def index:

return rendertemplatetrialhtml

if name main:

app.runhost debugTrue

# before your while loop

tick cv getTickCount

framecount theta

fps

while True:

success, img cap.read

img cvresizeimg

#yolo detection

results model img streamTrue

#Deep sort process

boxes

confidences

classids

# coordinates

for r in results:

for box in boxes:

# bounding box

for x in box.xyxy # convert to int values once

boxes.append xyxxyy

# put box in cam

cv rectangleimgx yx y

# confidence

confidences append box conftheta

printConfidence confidences

# class id

classids.appendintboxcls

# Update tracker

tracks tracker. updateboxes confidences, img

for track in tracks:

Speed: ms preprocess, ms inference, ms postprocess per image at shape

: times person, ms

Speed: ms preprocess, ms inference, Oms postprocess per image at shape

: times person, ms

Speed: ms preprocess, ms inference, Oms postprocess per image at shape

: times person, ms

Speed: ms preprocess, ms inference, Oms postprocess per image at shape # before your while loop

tick cv getTickCount

framecount

fps

while True:

success, img cap.read

img resize

#yolo detection

results model img streamTrue

#Deep sort process

boxes

confidences

classids

# coordinates

for in results:

for box in boxes:

# bounding box

for in box.xyxy # convert to int values once

boxes.append

# put box in cam

cv rectangleimgx yx y

# confidence

confidences append box conf

printConfidence confidences

# class id

classids.appendintboxcls

# Update tracker

tracks tracker. updateboxes confidences, img

for track in tracks:

Speed: ms preprocess, ms inference, ms postprocess per image at shape

: person,

Speed: preprocess, inference, Oms postprocess per image at shape

: person,

Speed: ms preprocess, inference, Oms postprocess per image at shape

: person,

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started