Question

Classification question ( Segmentation via entropy information gain) Use the example on pg 48 of our Data Science for Business text, Fig. 3.2 A set

Classification question ( Segmentation via entropy information gain)

Classification question ( Segmentation via entropy information gain)

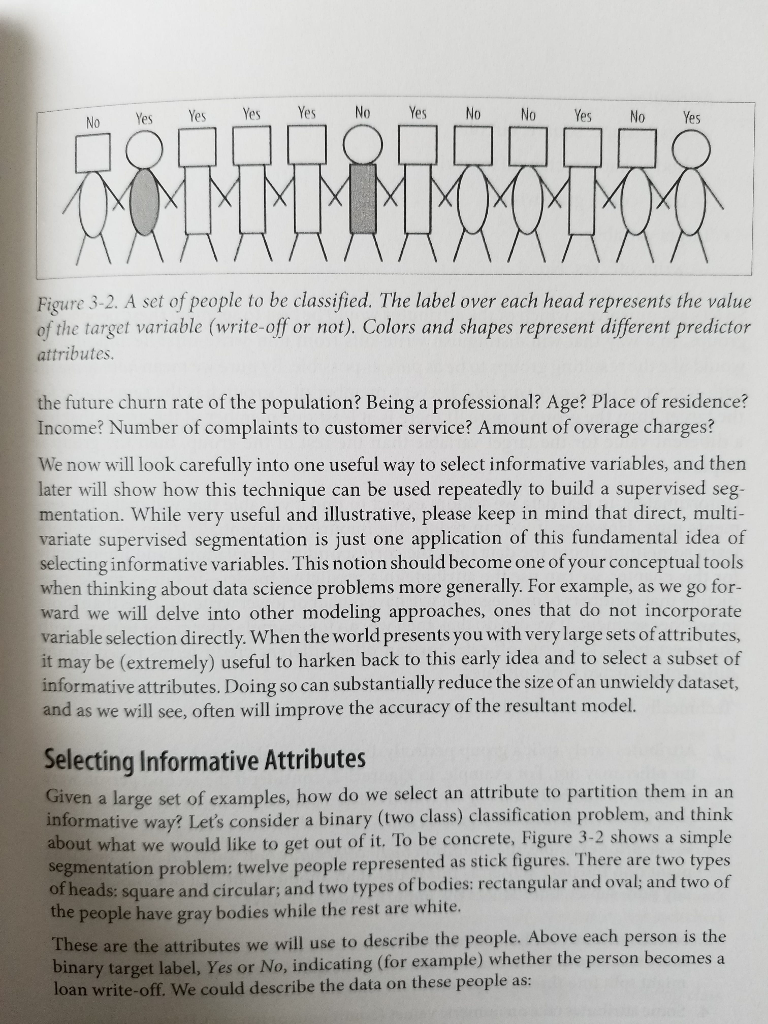

Use the example on pg 48 of our Data Science for Business text, Fig. 3.2 A set of people to be classified.

Use Scala to do your calculations: ( see notes on doing this for the first two segmentations)

a. Calculate the overall entropy of this set

b. Calculate the IG information gain by splitting on head shape

c. Calculate the IG information gain by splitting on body shape

d. Calculate the IG information gain by splitting on color.

d. Of these three, which is the best attribute to do segmentation with, why ?

Yes Yes Yes No Yes No No Yes No Yes No Figure 3-2. A set of people to be classified. The label over each head represents the value f the target variable (write-off or not). Colors and shapes represent different predictor attributes the future churn rate of the population? Being a professional? Age? Place of residence? Income? Number of complaints to customer service? Amount of overage charges? now will look carefully into one useful way to select informative variables, and then ater will show how this technique can be used repeatedly to build a supervised seg mentation. While very useful and illustrative, please keep in mind that direct, multi variate supervised segmentation is just one application of this fundamental idea of selecting informative variables. This notion should become one of your conceptual tools hen thinking about data science problems more generally. For example, as we go for ward we will delve into other modeling approaches, ones that do not incorporate ariable selection directly. When the world presents you with very large sets of attributes, may be (extremely) useful to harken back to this early idea and to select a subset of informative attributes. Doing so can substantially reduce the size ofan unwieldy dataset, and as we will see, often will improve the accuracy of the resultant model. Selecting Informative Attributes Given a large set of examples, how do we select an attribute to partition them in an informative way? Let's consider a binar (two class) classification problem, and think about what we would like to get out of it. To be concrete, Figure 3-2 shows a simple segmentation problem: of heads: square and circular; and two types of bodies: rectangular and oval; and two of the people have gray bodies while the rest are white twelve people represented as stick figures. There are two types will use to describe the people. Above each person is the ese are the attributes we nary target label, Yes or No, indicating (for example) whether the person beco oan write-off. We could describe the data on these people as biStep by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started