Answered step by step

Verified Expert Solution

Question

1 Approved Answer

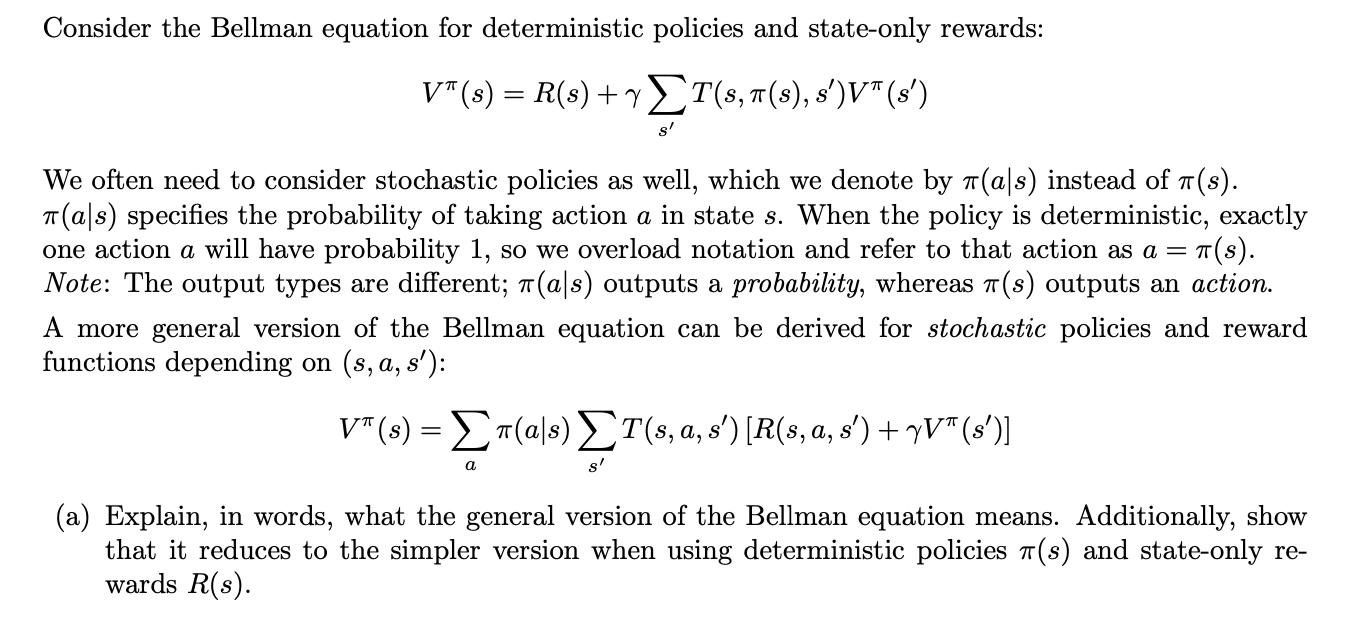

Consider the Bellman equation for deterministic policies and state-only rewards: VT (s) = R(s) + yT(s, (s), s')V (s') s' We often need to

Consider the Bellman equation for deterministic policies and state-only rewards: VT (s) = R(s) + yT(s, (s), s')V (s') s' We often need to consider stochastic policies as well, which we denote by (als) instead of (s). T(als) specifies the probability of taking action a in state s. When the policy is deterministic, exactly one action a will have probability 1, so we overload notation and refer to that action as a = (s). Note: The output types are different; 7(als) outputs a probability, whereas T(s) outputs an action. A more general version of the Bellman equation can be derived for stochastic policies and reward functions depending on (s, a, s'): V" (s) = (a|s) T(s, a, s') [R(s, a, s') + yV (s')] a (a) Explain, in words, what the general version of the Bellman equation means. Additionally, show that it reduces to the simpler version when using deterministic policies 7(s) and state-only re- wards R(s).

Step by Step Solution

★★★★★

3.35 Rating (158 Votes )

There are 3 Steps involved in it

Step: 1

This is boundlessness We can address this with the assistance of the rebate factor previously presen...

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started