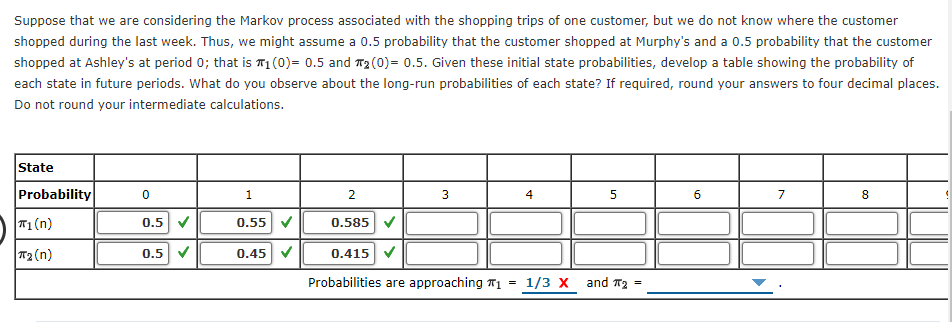

Having some real trouble figuring this out. I understand that there is a matrix with the [0.9 0.2 and 0.1 0.8]. The first two columns make sense but I'm not sure how they are getting the values in column three. I'm getting .605 and .405 for the values in column three which is incorrect. I am using the same method I did to solve the second equation. (i.e. .55 x 0.9, .55 x .1). Not sure why that doesn't work.

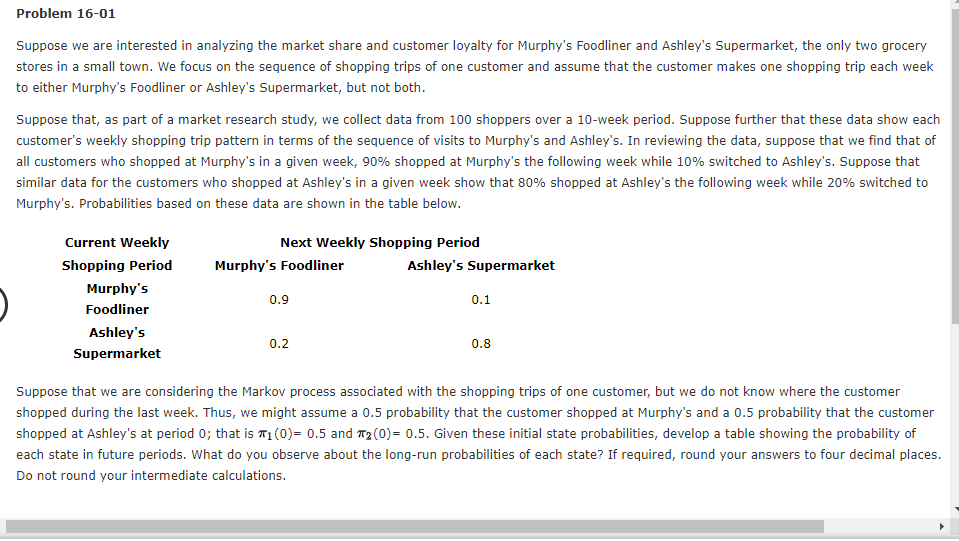

Problem 1601 Suppose we are interested in analyzing 'd'1e market share and customer loyalty for Murphy's Foodliner and Ashley's Supermarket, the only two grocery stores in a small town. We focus on the sequence of shopping tn'ps of one customer and assume that the customer makes one shopping trip each week to either Murphy's Foodliner or Ashley's Supermarket, but not both. Suppose that, as part of a market research study, we collect data from 100 shoppers over a 10week period. Suppose further that these data show each customer's weekly shopping trip pattern in terms of the sequence of visits to Murphy's and Ashley's. In reviewing the data, suppose that we nd that of all customers who shopped at Murphy's in a given week, 90% shopped at Murphy's the following week while 10% switched to Ashley's. Suppose that similar data for the customers who shopped at Ashley's in a given week show that 80% shopped at Ashley's the following week while 20% switched to Murphy's. Probabilities based on these data are shown in the table below. Current Weekly Next Weekly Shopping Period Shopping Period Murphy's Foodliner Ashley's Supermarket Murphy's 0.9 0.1 ) Foodiiner Ashley's 0.2 0.8 Supermarket Suppose that we are considering the Markov process associated with the shopping tn'ps of one customer, but we do not know where the customer shopped during the last week. Thus, we might assume a 0.5 probability that the customer shopped at Murphy's and a 0.5 probability that the customer shopped at Ashley's at period 0: that is 1r1(0)= 0.5 and Tr: (0)= 0.5. Given these initial state probabilities, develop a table showing the probability of each state in future periods. what do you observe about the longrun probabilities of each state? If required, round your answers to four decimal places. Do not round your intermediate calculations. D Suppose that we are considering the Markov process associated with the shopping trips of one customer, but we do not know where the customer shopped during 'd'ie last week. 111us, we might assume a 0.5 probability that the customer shopped at Murphy's and a 0.5 probability that the customer shopped at Ashley's at period 0; that is 1(0)= 0.5 and Tr; (0)= 0.5. Given these initial state probabilities. develop a table showing the probability of each state in future periods. what do you observe about the long-run probabilities of each state? If required, round your answers to four decimal places. Do not round your intermediate calculations. m--- mm: ------ Probabilities are approaching 1=1f3 X and 1T2= J