Question

I am trying to write a program to carry out a steepest descent algorithm incorporating Golden Section Search to find the step size alpha (a)

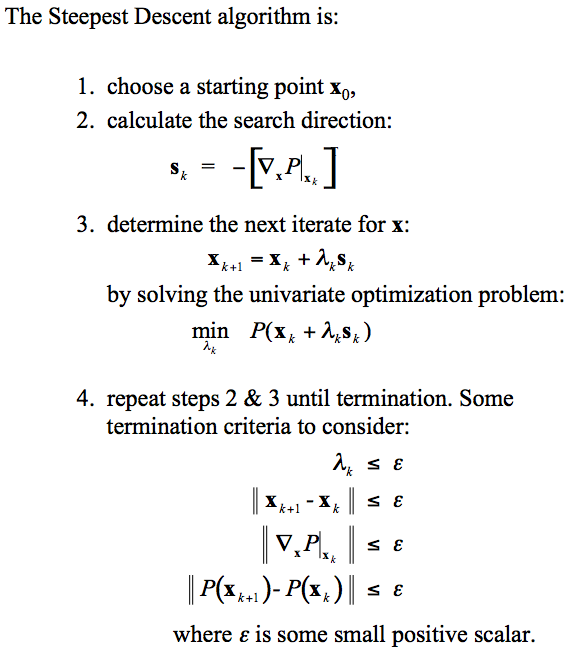

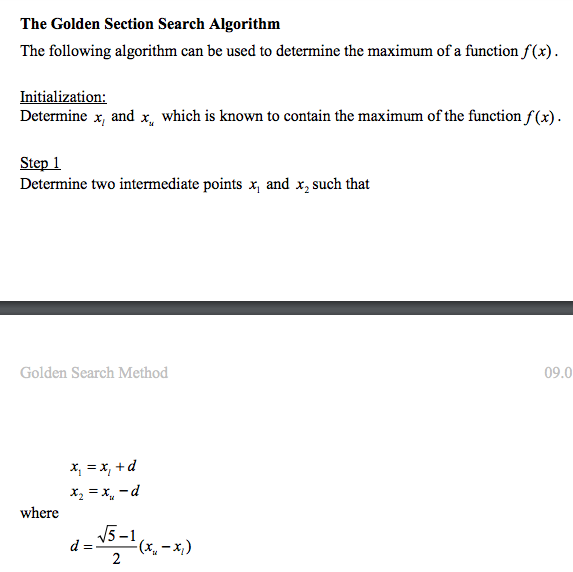

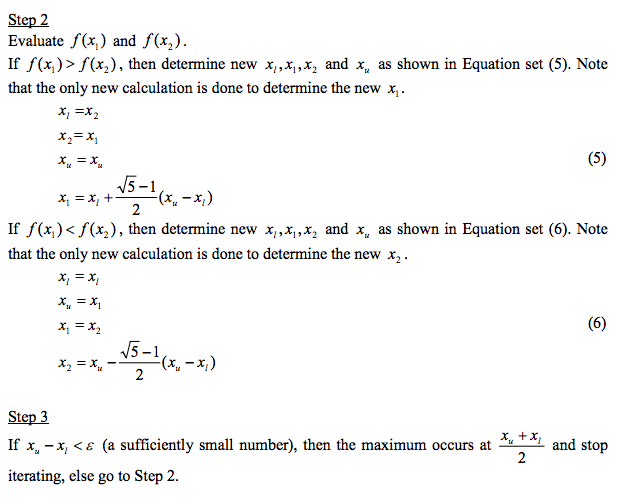

I am trying to write a program to carry out a steepest descent algorithm incorporating Golden Section Search to find the step size alpha (a) and would like assistance in correcting my code so it does its job correctly. I have posted pictures which should aid in understanding what it is I am trying to accomplish as well as providing my own explnation. Golden Section Search is used to solve for step size lambda (same thing as my alpha, just different labeling). "P" is the multivariate equation we are attempting to solve and so we plug in the following for: x_1 = initial_guess's_x_component - lambda*gradient_at_initial_guess's_x_component , x_2 = initial_guess's_y_component - lambda*gradient_at_initial_guess's_y_component. This results in a univariate equation in terms of lambda which is to be solved using Golden Section Search.

Modify the code below so that the following happens until the given end condition is safistied:

1) The components of the generic gradient are defined

2) An initial guess (the guess's components are labeled as such) is made

3) Evaluate the generic gradient at the initial guess

4) Use the GoldenSectionSearch method to compute the step size alpha (a). The "if, else" statement in lines 50 - 63 can be considered seperate from the rest of the GoldenSectionSearch method as it is just my attempt at getting the GoldenSectionSearch method to reassign initial_guess_1 and initial_guess_2 and have the method call itself until the terminating condition specified in the while loop in line 50 is satisfied. Steps 2-4 are to be repeated until the terminating condition is satisfied. Once the terminating condition is satisfied, the final values for x_1 and x_2 are to be printed on the same line.

1 import java.util.*; 2 import java.util.ArrayList; 3 4 public class OptimizationProject_1_Re_Do_1{ 5 6 public static void main(String []args){ 7 8 double initial_guess_1=1, initial_guess_2=0 , gradient_x = (400*initial_guess_1*(initial_guess_1*initial_guess_1 - initial_guess_2) + 2*(initial_guess_1 -1)), gradient_y = (200*(initial_guess_2 - initial_guess_1*initial_guess_1)), a = 4.99999485000546*Math.pow(10,-7), p=(0.50)*(3-Math.pow(5,0.5)), N=1; 9 10 double x_1 = initial_guess_1 - (gradient_x * a), x_2 = initial_guess_2 - (gradient_y * a); 11 12 double b_0 = 10000,a_0=0, a_1=a_0+(p)*(b_0-a_0),a_2 =a_0+(p)*(b_0-a_0) ,b_1=a_0+(1-p)*(b_0-a_0); 13 14 GoldenSectionSearch(initial_guess_1,initial_guess_2,x_1, x_2, gradient_x, gradient_y, a, a_0, a_1, a_2, b_0, b_1, p,N); 15 16 } 17 18 19 20 21 public static double GoldenSectionSearch(double x_1, double x_2, double initial_guess_1,double initial_guess_2 ,double gradient_x, double gradient_y, double a,double a_0,double a_1,double a_2,double b_0,double b_1,double p,double N){ 22 23 while(Math.pow(1-p,N)>=(0.50)*(Math.pow(10,-6))){ 24 25 double f_a_0 = 100*(initial_guess_2 - (gradient_y * a_0) - initial_guess_1 - (gradient_x * a_0)*initial_guess_1 - (gradient_x * a_0))*(initial_guess_2 - (gradient_y * a_0) - initial_guess_1 - (gradient_x * a_0)*initial_guess_1 - (gradient_x * a_0)) + (1-initial_guess_1 - (gradient_x * a_0))*(1-initial_guess_1 - (gradient_x * a_0)); 26 double f_b_0 = 100*(initial_guess_2 - (gradient_y * b_0) - initial_guess_1 - (gradient_x * b_0)*initial_guess_1 - (gradient_x * b_0))*(initial_guess_2 - (gradient_y * b_0) - initial_guess_1 - (gradient_x * b_0)*initial_guess_1 - (gradient_x * b_0)) + (1-initial_guess_1 - (gradient_x * b_0))*(1-initial_guess_1 - (gradient_x * b_0)); 27 28 if(f_a_0 =0.50*Math.pow(10,-6)){ 51 52 initial_guess_1=x_1; 53 initial_guess_2=x_2; 54 gradient_x = (400*initial_guess_1*(initial_guess_1*initial_guess_1 - initial_guess_2) + 2*(initial_guess_1 -1)); 55 gradient_y = (200*(initial_guess_2 - initial_guess_1*initial_guess_1)); 56 57 GoldenSectionSearch(initial_guess_1,initial_guess_2,x_1, x_2, gradient_x, gradient_y, a, a_0, a_1, a_2, b_0, b_1, p,N); 58 59 60 } else{ 61 62 System.out.print(x_2); 63 } 64 65 66 //System.out.println(a); 67 68 // Double Boxed_a = new Double(a); 69 70 71 return x_2; 72 73 } 74 75 76 77 78 }

The Steepest Descent algorithm is: 1. choose a starting point x0, 2. calculate the search direction: V.P, 3. determine the next iterate for x: k+1 by solving the univariate optimization problem: min PX, + 44 ) 4. repeat steps 2 & 3 until termination. Some termination criteria to consider: k +1 where is some small positive scalar. The Steepest Descent algorithm is: 1. choose a starting point x0, 2. calculate the search direction: V.P, 3. determine the next iterate for x: k+1 by solving the univariate optimization problem: min PX, + 44 ) 4. repeat steps 2 & 3 until termination. Some termination criteria to consider: k +1 where is some small positive scalarStep by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started