Question

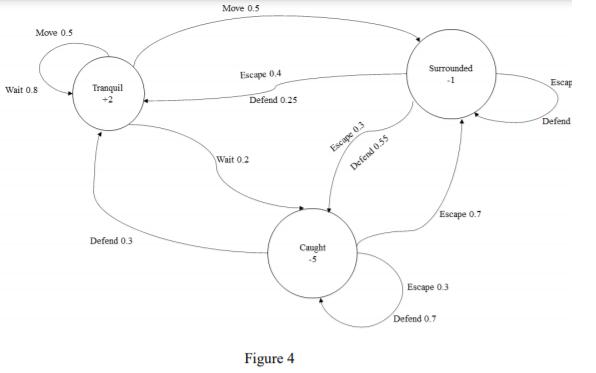

Let be the following Markovian decision process of Figure 4, which transitions are labeled by the names of the actions and the probabilities of transitions,

Let be the following Markovian decision process of Figure 4, which transitions are labeled by the names of the actions and the probabilities of transitions, the states are labeled with the corresponding rewards. It models the possible actions of a character being chased by others. In the Tranquil State, nothing happens - no enemies on the horizon. In this state he receives a reward of +2. If it waits and does nothing, it risks being located and caught by the enemy (probability 0.2). If caught he has a -5 penalty. In the Tranquil state, if it moves, it has a 50% chance to ambush and be surrounded by the enemy. Once surrounded or caught, it may attempt to flee or defend itself.

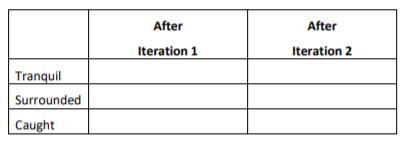

In the following table, indicate the utility values of the states at the end of each of the first two iterations of the value-iteration algorithm, assuming that an attenuation factor is used (discount factor) of 0.9 and starting initially (iteration 0) with state values all equal to 0. Indicate only the values, do not detail the calculations.

2. Give the action plan (policy) resulting from iteration 2.

Move 0.5 Wait 0.8 Tranquil Defend 0.3 Move 0.5 Escape 0.4 Defend 0.25 Wait 0.2 Figure 4 Caught -5 Escape 0.3 Defend 0.55 Surrounded -1 Escape 0.7 Escape 0.3 Defend 0.7 ECRE Defend

Step by Step Solution

3.51 Rating (158 Votes )

There are 3 Steps involved in it

Step: 1

State Utility Value after Iteration 1 Utility Value after Iteration 2 Tranquil 18 194 Surrounded 075 109 Caught 45 45 The action plan policy resulting from iteration 2 is to move from the Tranquil sta...

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started