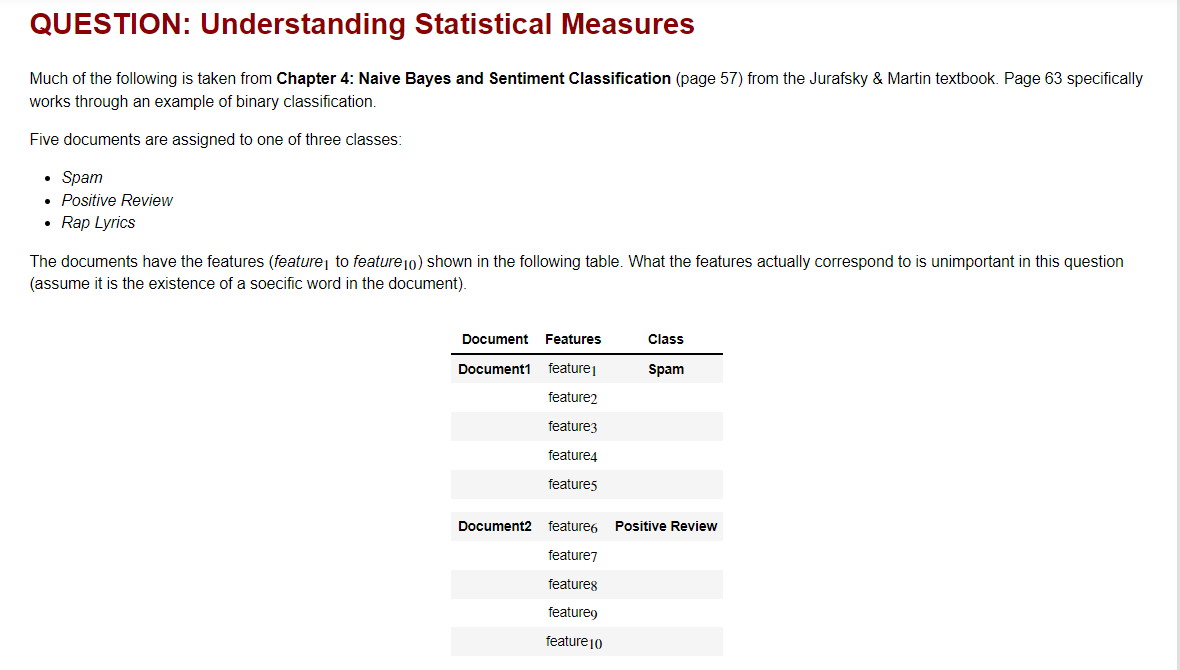

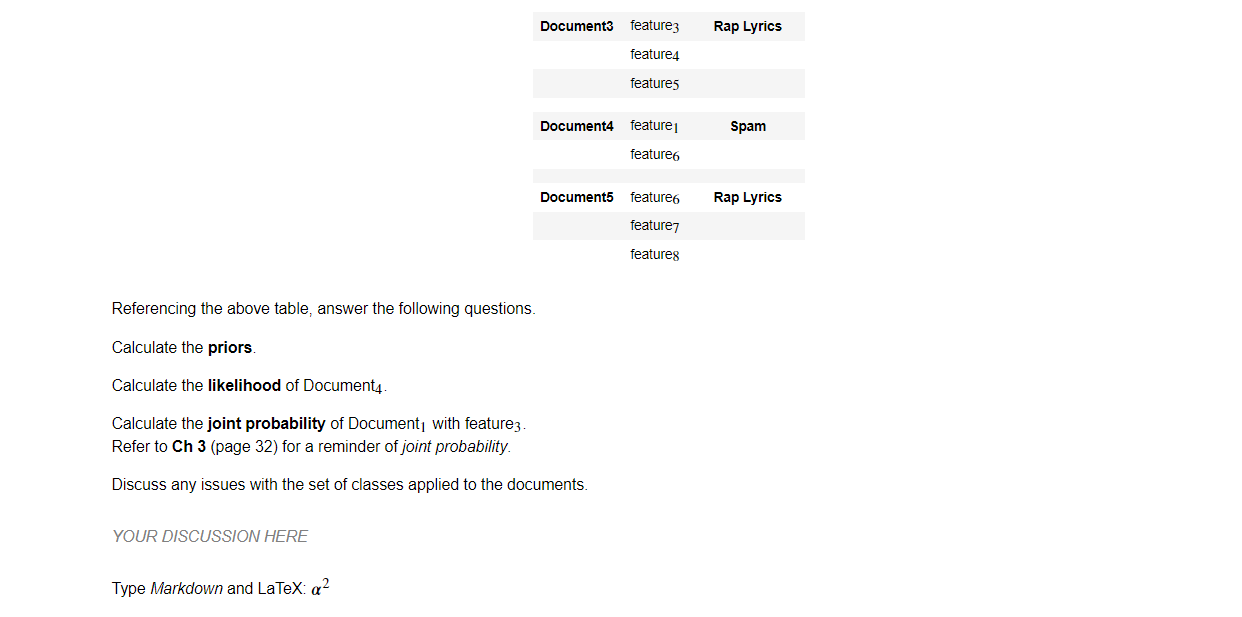

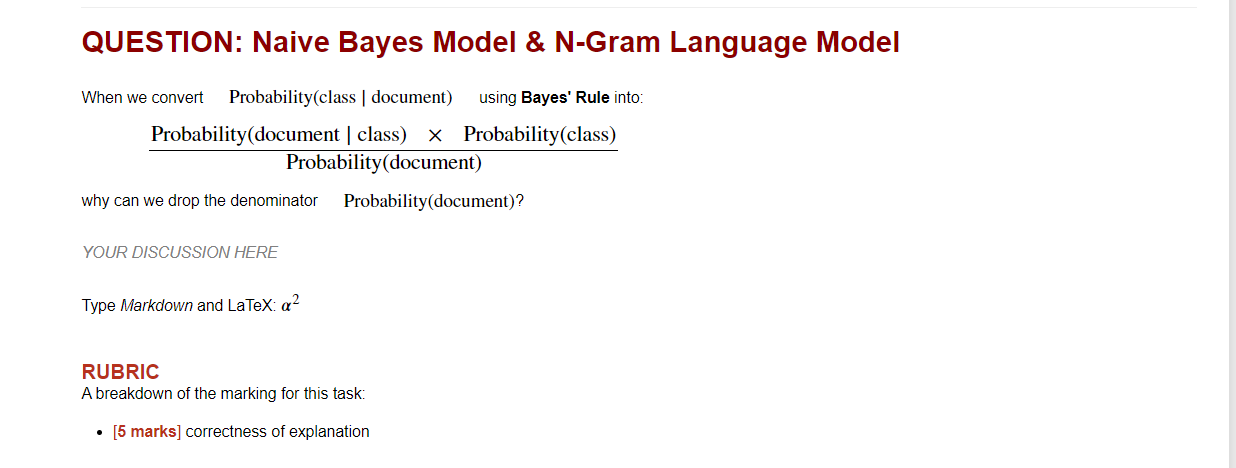

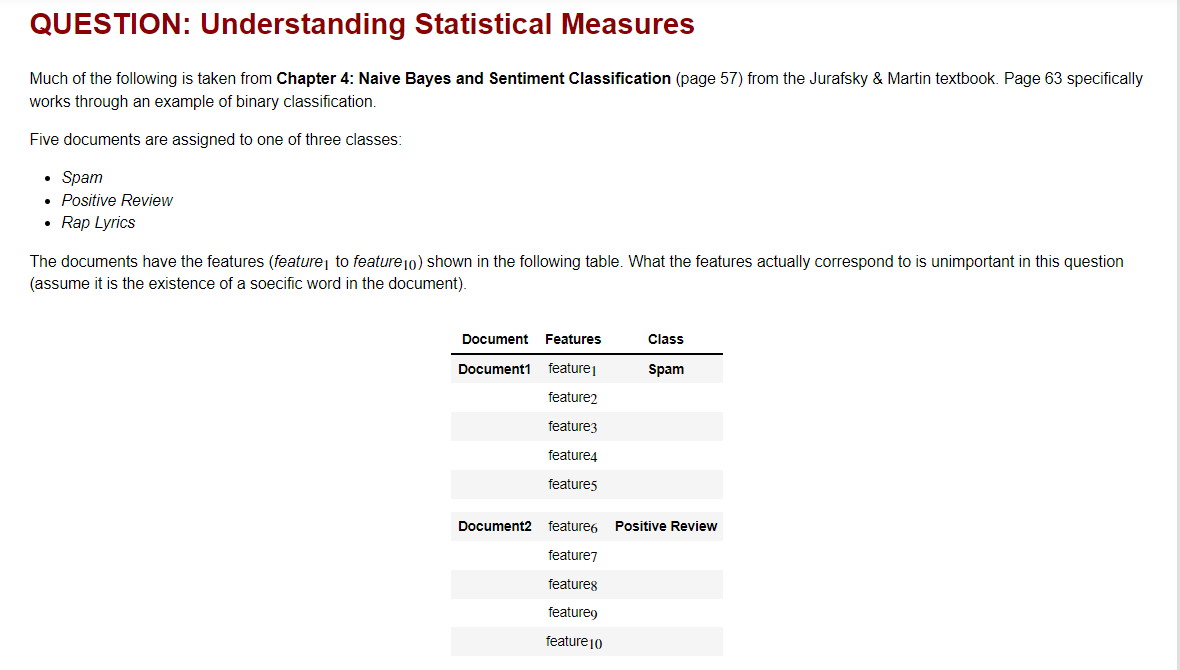

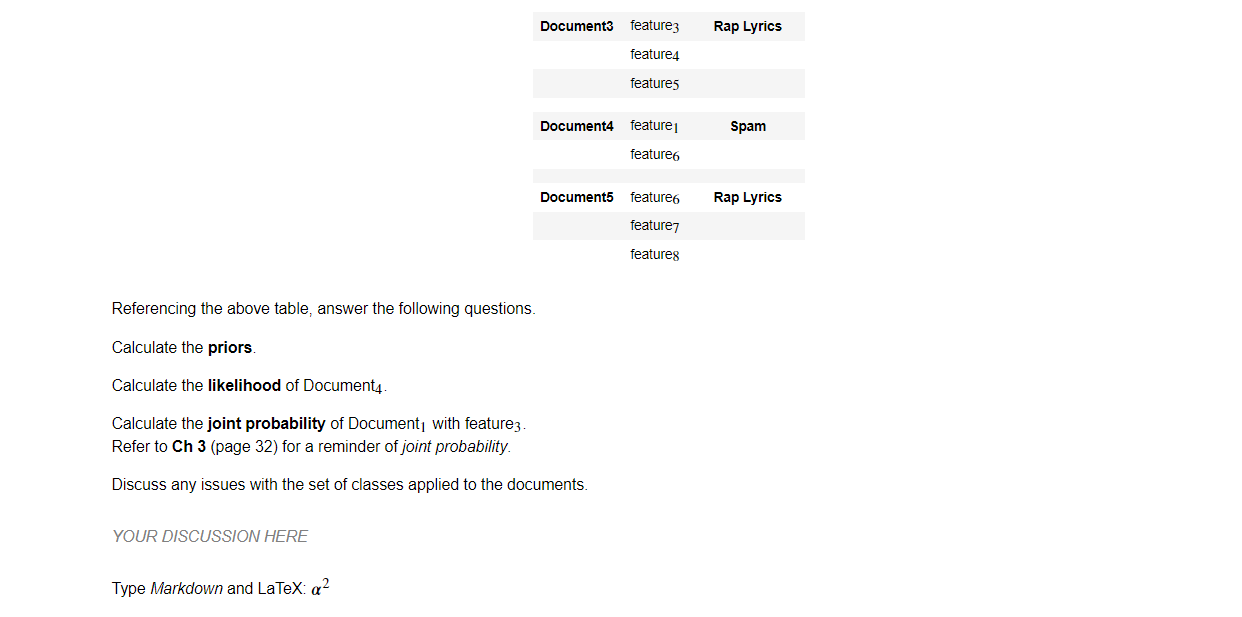

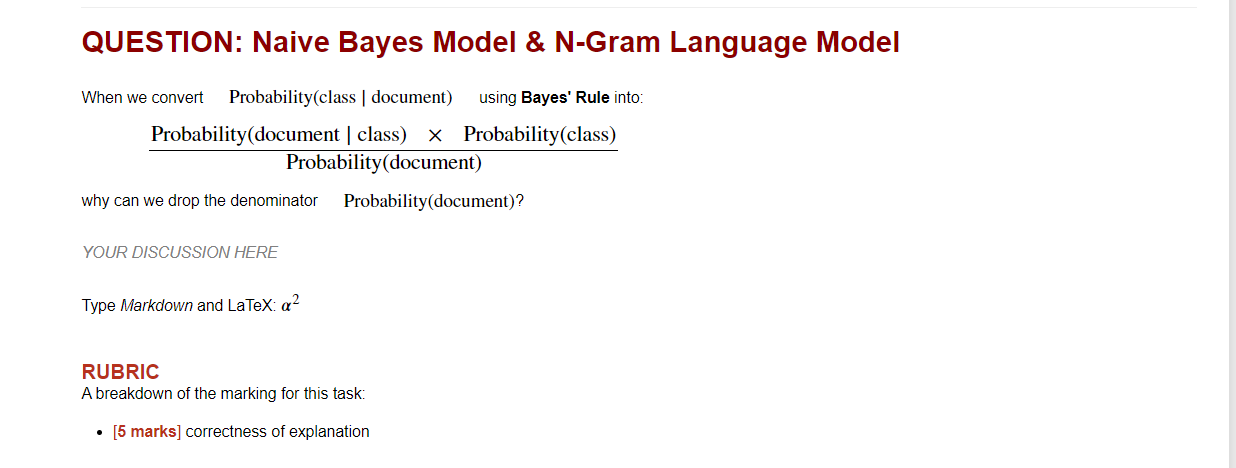

Much of the following is taken from Chapter 4: Naive Bayes and Sentiment Classification (page 57) from the Jurafsky \& Martin textbook. Page 63 specifically works through an example of binary classification. Five documents are assigned to one of three classes: - Spam - Positive Review - Rap Lyrics The documents have the features (feature to feature 10 ) shown in the following table. What the features actually correspond to is unimportant in this question (assume it is the existence of a soecific word in the document). Referencing the above table, answer the following questions. Calculate the priors. Calculate the likelihood of Document 4 . Calculate the joint probability of Document 1 with feature 3. Refer to Ch 3 (page 32) for a reminder of joint probability. Discuss any issues with the set of classes applied to the documents. YOUR DISCUSSION HERE Type Markdown and LaTeX: 2 QUESTION: Naive Bayes Model \& N-Gram Language Model When we convert Probability(class | document) using Bayes' Rule into: Probability(document)Probability(documentclass)Probability(class) why can we drop the denominator Probability(document)? YOUR DISCUSSION HERE Type Markdown and LaTeX: 2 RUBRIC A breakdown of the marking for this task: - [5 marks] correctness of explanation Much of the following is taken from Chapter 4: Naive Bayes and Sentiment Classification (page 57) from the Jurafsky \& Martin textbook. Page 63 specifically works through an example of binary classification. Five documents are assigned to one of three classes: - Spam - Positive Review - Rap Lyrics The documents have the features (feature to feature 10 ) shown in the following table. What the features actually correspond to is unimportant in this question (assume it is the existence of a soecific word in the document). Referencing the above table, answer the following questions. Calculate the priors. Calculate the likelihood of Document 4 . Calculate the joint probability of Document 1 with feature 3. Refer to Ch 3 (page 32) for a reminder of joint probability. Discuss any issues with the set of classes applied to the documents. YOUR DISCUSSION HERE Type Markdown and LaTeX: 2 QUESTION: Naive Bayes Model \& N-Gram Language Model When we convert Probability(class | document) using Bayes' Rule into: Probability(document)Probability(documentclass)Probability(class) why can we drop the denominator Probability(document)? YOUR DISCUSSION HERE Type Markdown and LaTeX: 2 RUBRIC A breakdown of the marking for this task: - [5 marks] correctness of explanation