Answered step by step

Verified Expert Solution

Question

1 Approved Answer

package lexer; /** * The Lexer class is responsible for scanning the source file * which is a stream of characters and returning a stream

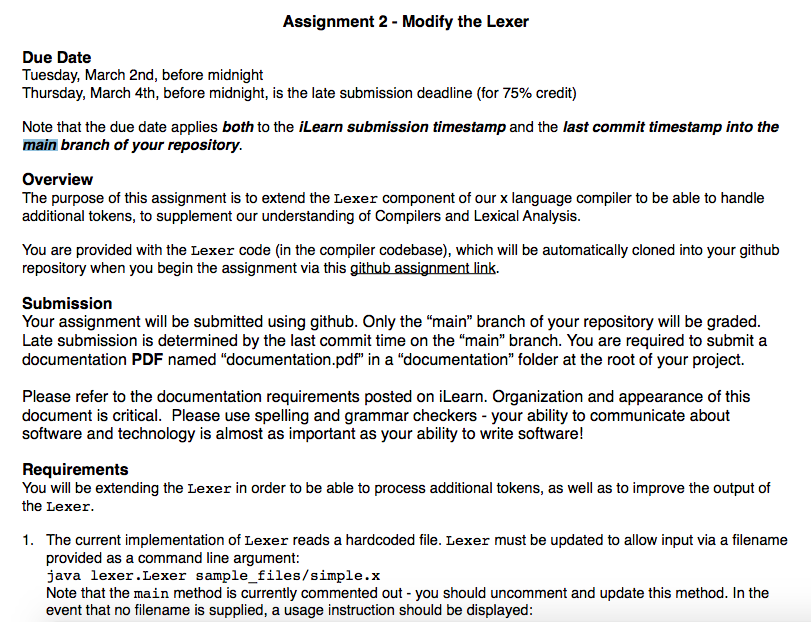

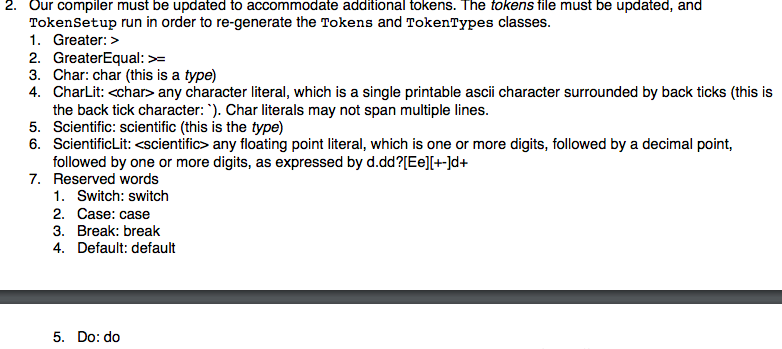

package lexer; /** * The Lexer class is responsible for scanning the source file * which is a stream of characters and returning a stream of * tokens; each token object will contain the string (or access * to the string) that describes the token along with an * indication of its location in the source program to be used * for error reporting; we are tracking line numbers; white spaces * are space, tab, newlines */ public class Lexer { private boolean atEOF = false; // next character to process private char ch; private SourceReader source; // positions in line of current token private int startPosition, endPosition; /** * Lexer constructor * @param sourceFile is the name of the File to read the program source from */ public Lexer( String sourceFile ) throws Exception { // init token table new TokenType(); source = new SourceReader( sourceFile ); ch = source.read(); } /** * newIdTokens are either ids or reserved words; new id's will be inserted * in the symbol table with an indication that they are id's * @param id is the String just scanned - it's either an id or reserved word * @param startPosition is the column in the source file where the token begins * @param endPosition is the column in the source file where the token ends * @return the Token; either an id or one for the reserved words */ public Token newIdToken( String id, int startPosition, int endPosition ) { return new Token( startPosition, endPosition, Symbol.symbol( id, Tokens.Identifier ) ); } /** * number tokens are inserted in the symbol table; we don't convert the * numeric strings to numbers until we load the bytecodes for interpreting; * this ensures that any machine numeric dependencies are deferred * until we actually run the program; i.e. the numeric constraints of the * hardware used to compile the source program are not used * @param number is the int String just scanned * @param startPosition is the column in the source file where the int begins * @param endPosition is the column in the source file where the int ends * @return the int Token */ public Token newNumberToken( String number, int startPosition, int endPosition) { return new Token( startPosition, endPosition, Symbol.symbol( number, Tokens.INTeger ) ); } /** * build the token for operators (+ -) or separators (parens, braces) * filter out comments which begin with two slashes * @param s is the String representing the token * @param startPosition is the column in the source file where the token begins * @param endPosition is the column in the source file where the token ends * @return the Token just found */ public Token makeToken( String s, int startPosition, int endPosition ) { // filter comments if( s.equals("//") ) { try { int oldLine = source.getLineno(); do { ch = source.read(); } while( oldLine == source.getLineno() ); } catch (Exception e) { atEOF = true; } return nextToken(); } // ensure it's a valid token Symbol sym = Symbol.symbol( s, Tokens.BogusToken ); if( sym == null ) { System.out.println( "******** illegal character: " + s ); atEOF = true; return nextToken(); } return new Token( startPosition, endPosition, sym ); } /** * @return the next Token found in the source file */ public Token nextToken() { // ch is always the next char to process if( atEOF ) { if( source != null ) { source.close(); source = null; } return null; } try { // scan past whitespace while( Character.isWhitespace( ch )) { ch = source.read(); } } catch( Exception e ) { atEOF = true; return nextToken(); } startPosition = source.getPosition(); endPosition = startPosition - 1; if( Character.isJavaIdentifierStart( ch )) { // return tokens for ids and reserved words String id = ""; try { do { endPosition++; id += ch; ch = source.read(); } while( Character.isJavaIdentifierPart( ch )); } catch( Exception e ) { atEOF = true; } return newIdToken( id, startPosition, endPosition ); } if( Character.isDigit( ch )) { // return number tokens String number = ""; try { do { endPosition++; number += ch; ch = source.read(); } while( Character.isDigit( ch )); } catch( Exception e ) { atEOF = true; } return newNumberToken( number, startPosition, endPosition ); } // At this point the only tokens to check for are one or two // characters; we must also check for comments that begin with // 2 slashes String charOld = "" + ch; String op = charOld; Symbol sym; try { endPosition++; ch = source.read(); op += ch; // check if valid 2 char operator; if it's not in the symbol // table then don't insert it since we really have a one char // token sym = Symbol.symbol( op, Tokens.BogusToken ); if (sym == null) { // it must be a one char token return makeToken( charOld, startPosition, endPosition ); } endPosition++; ch = source.read(); return makeToken( op, startPosition, endPosition ); } catch( Exception e ) { /* no-op */ } atEOF = true; if( startPosition == endPosition ) { op = charOld; } return makeToken( op, startPosition, endPosition ); } public static void main(String args[]) { Token token; try { Lexer lex = new Lexer( "simple.x" ); while( true ) { token = lex.nextToken(); String p = "L: " + token.getLeftPosition() + " R: " + token.getRightPosition() + " " + TokenType.tokens.get(token.getKind()) + " "; if ((token.getKind() == Tokens.Identifier) || (token.getKind() == Tokens.INTeger)) { p += token.toString(); } System.out.println( p + ": " + lex.source.getLineno() ); } } catch (Exception e) {} } } Assignment 2 - Modify the Lexer Due Date Tuesday, March 2nd, before midnight Thursday, March 4th, before midnight, is the late submission deadline (for 75% credit) Note that the due date applies both to the iLearn submission timestamp and the last commit timestamp into the main branch of your repository. Overview The purpose of this assignment is to extend the Lexer component of our x language compiler to be able to handle additional tokens, to supplement our understanding of Compilers and Lexical Analysis. You are provided with the Lexer code (in the compiler codebase), which will be automatically cloned into your github repository when you begin the assignment via this github assignment link. Submission Your assignment will be submitted using github. Only the "main" branch of your repository will be graded. Late submission is determined by the last commit time on the "main" branch. You are required to submit a documentation PDF named "documentation.pdf" in a "documentation" folder at the root of your project. Please refer to the documentation requirements posted on iLearn. Organization and appearance of this document is critical. Please use spelling and grammar checkers - your ability to communicate about software and technology is almost as important as your ability to write software! Requirements You will be extending the Lexer in order to be able to process additional tokens, as well as to improve the output of the Lexer. 1. The current implementation of Lexer reads a hardcoded file. Lexer must be updated to allow input via a filename provided as a command line argument: java lexer.Lexer sample_files/simple.x Note that the main method is currently commented out - you should uncomment and update this method. In the event that no filename is supplied, a usage instruction should be displayed: 2. Our compiler must be updated to accommodate additional tokens. The tokens file must be updated, and TokenSetup run in order to re-generate the Tokens and TokenTypes classes. 1. Greater: > 2. Greater Equal: >= 3. Char: char (this is a type) 4. Charlit: Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started