Answered step by step

Verified Expert Solution

Question

1 Approved Answer

parts a-d. No online copypaste of article pls = 2. In this exercise, we will explore how vanishing and exploding gradients affect the learning process.

parts a-d. No online copypaste of article pls

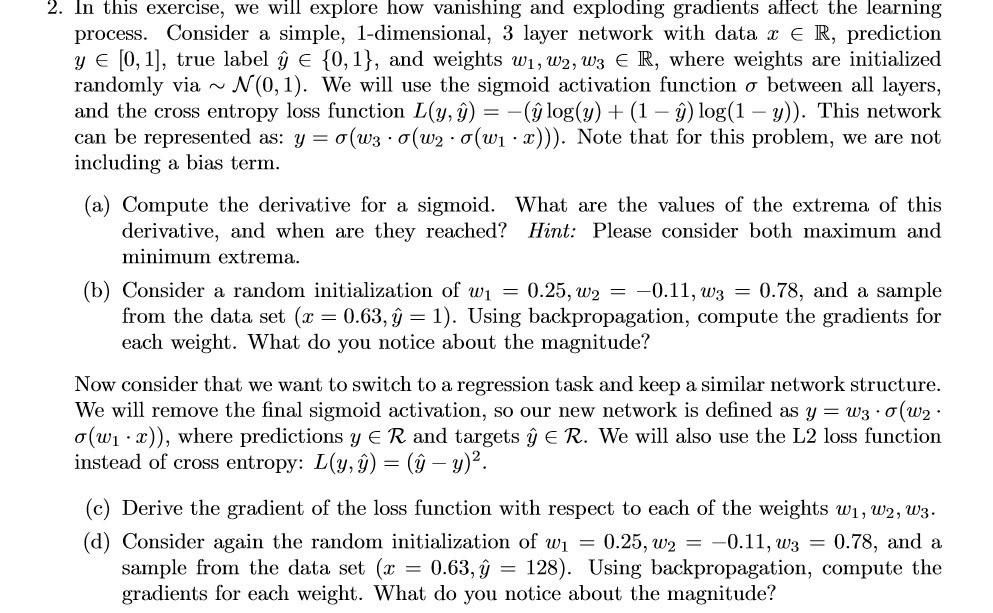

= 2. In this exercise, we will explore how vanishing and exploding gradients affect the learning process. Consider a simple, 1-dimensional, 3 layer network with data x E R, prediction y E (0,1), true label {0,1}, and weights w1, W2, W3 R, where weights are initialized randomly via ~ N(0,1). We will use the sigmoid activation function o between all layers, and the cross entropy loss function L(y, ) = - log(y) + (1 - ) log(1 - y)). This network can be represented as: y = 0(W30(W2.0(w1 2))). Note that for this problem, we are not including a bias term. (a) Compute the derivative for a sigmoid. What are the values of the extrema of this derivative, and when are they reached? Hint: Please consider both maximum and minimum extrema. (b) Consider a random initialization of w1 = 0.25, W2 = -0.11, w3 = 0.78, and a sample from the data set (x = 0.63, = 1). Using backpropagation, compute the gradients for each weight. What do you notice about the magnitude? Now consider that we want to switch to a regression task and keep a similar network structure. We will remove the final sigmoid activation, so our new network is defined as y = W30(W2 : o(W1 x)), where predictions ye R and targets E R. We will also use the L2 loss function instead of cross entropy: L(y, ) = ( - y)2. (c) Derive the gradient of the loss function with respect to each of the weights w1, W2, W3. (d) Consider again the random initialization of wi = 0.25, W2 = -0.11, w3 0.78, and a sample from the data set (x = 0.63, = 128). Using backpropagation, compute the gradients for each weight. What do you notice about the magnitude? = = 2. In this exercise, we will explore how vanishing and exploding gradients affect the learning process. Consider a simple, 1-dimensional, 3 layer network with data x E R, prediction y E (0,1), true label {0,1}, and weights w1, W2, W3 R, where weights are initialized randomly via ~ N(0,1). We will use the sigmoid activation function o between all layers, and the cross entropy loss function L(y, ) = - log(y) + (1 - ) log(1 - y)). This network can be represented as: y = 0(W30(W2.0(w1 2))). Note that for this problem, we are not including a bias term. (a) Compute the derivative for a sigmoid. What are the values of the extrema of this derivative, and when are they reached? Hint: Please consider both maximum and minimum extrema. (b) Consider a random initialization of w1 = 0.25, W2 = -0.11, w3 = 0.78, and a sample from the data set (x = 0.63, = 1). Using backpropagation, compute the gradients for each weight. What do you notice about the magnitude? Now consider that we want to switch to a regression task and keep a similar network structure. We will remove the final sigmoid activation, so our new network is defined as y = W30(W2 : o(W1 x)), where predictions ye R and targets E R. We will also use the L2 loss function instead of cross entropy: L(y, ) = ( - y)2. (c) Derive the gradient of the loss function with respect to each of the weights w1, W2, W3. (d) Consider again the random initialization of wi = 0.25, W2 = -0.11, w3 0.78, and a sample from the data set (x = 0.63, = 128). Using backpropagation, compute the gradients for each weight. What do you notice about the magnitude? =Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started