Answered step by step

Verified Expert Solution

Question

1 Approved Answer

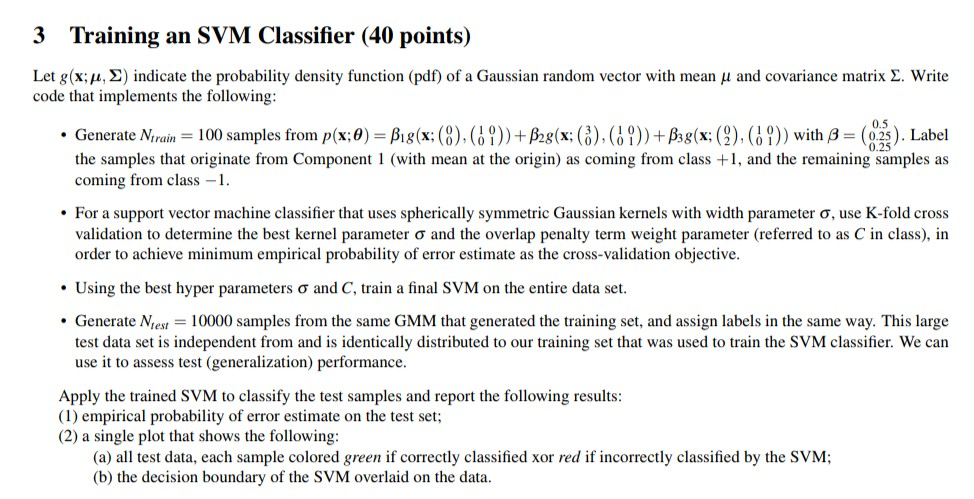

Please answer in MATLAB 3 Training an SVM Classifier (40 points) Let g(x;, ) indicate the probability density function (pdf) of a Gaussian random vector

Please answer in MATLAB

3 Training an SVM Classifier (40 points) Let g(x;, ) indicate the probability density function (pdf) of a Gaussian random vector with mean and covariance matrix . Write code that implements the following 0.5 . Generate N;ran 100 samples from p(x:0)-Adx; (8), (00)) +Ag(x; (3), (11)) + 3g(x; (1) (H) ) with (0 25). Label 0.25 the samples that originate from Component 1 (with mean at the origin) as coming from class +1, and the remaining samples as coming from class -1 For a support vector machine classifier that uses spherically symmetric Gaussian kernels with width parameter , use K-fold cross validation to determine the best kernel parameter and the overlap penalty term weight parameter (referred to as C in class), in order to achieve minimum empirical probability of error estimate as the cross-validation objective Using the best hyper parameters and C, train a final SVM on the entire data set . Generate Niest10000 samples from the same GMM that generated the training set, and assign labels in the same way. This large test data set is independent from and is identically distributed to our training set that was used to train the SVM classifier. We can use it to assess test (generalization) performance Apply the trained SVM to classify the test samples and report the following results (1) empirical probability of error estimate on the test set; (2) a single plot that shows the following (a) all test data, each sample colored green if correctly classified xor red if incorrectly classified by the SVM; (b) the decision boundary of the SVM overlaid on the data. 3 Training an SVM Classifier (40 points) Let g(x;, ) indicate the probability density function (pdf) of a Gaussian random vector with mean and covariance matrix . Write code that implements the following 0.5 . Generate N;ran 100 samples from p(x:0)-Adx; (8), (00)) +Ag(x; (3), (11)) + 3g(x; (1) (H) ) with (0 25). Label 0.25 the samples that originate from Component 1 (with mean at the origin) as coming from class +1, and the remaining samples as coming from class -1 For a support vector machine classifier that uses spherically symmetric Gaussian kernels with width parameter , use K-fold cross validation to determine the best kernel parameter and the overlap penalty term weight parameter (referred to as C in class), in order to achieve minimum empirical probability of error estimate as the cross-validation objective Using the best hyper parameters and C, train a final SVM on the entire data set . Generate Niest10000 samples from the same GMM that generated the training set, and assign labels in the same way. This large test data set is independent from and is identically distributed to our training set that was used to train the SVM classifier. We can use it to assess test (generalization) performance Apply the trained SVM to classify the test samples and report the following results (1) empirical probability of error estimate on the test set; (2) a single plot that shows the following (a) all test data, each sample colored green if correctly classified xor red if incorrectly classified by the SVM; (b) the decision boundary of the SVM overlaid on the dataStep by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started