PLEASE USE PYTHON SO I CAN FOLLOW ALONG THANK YOU VERY MUCH!

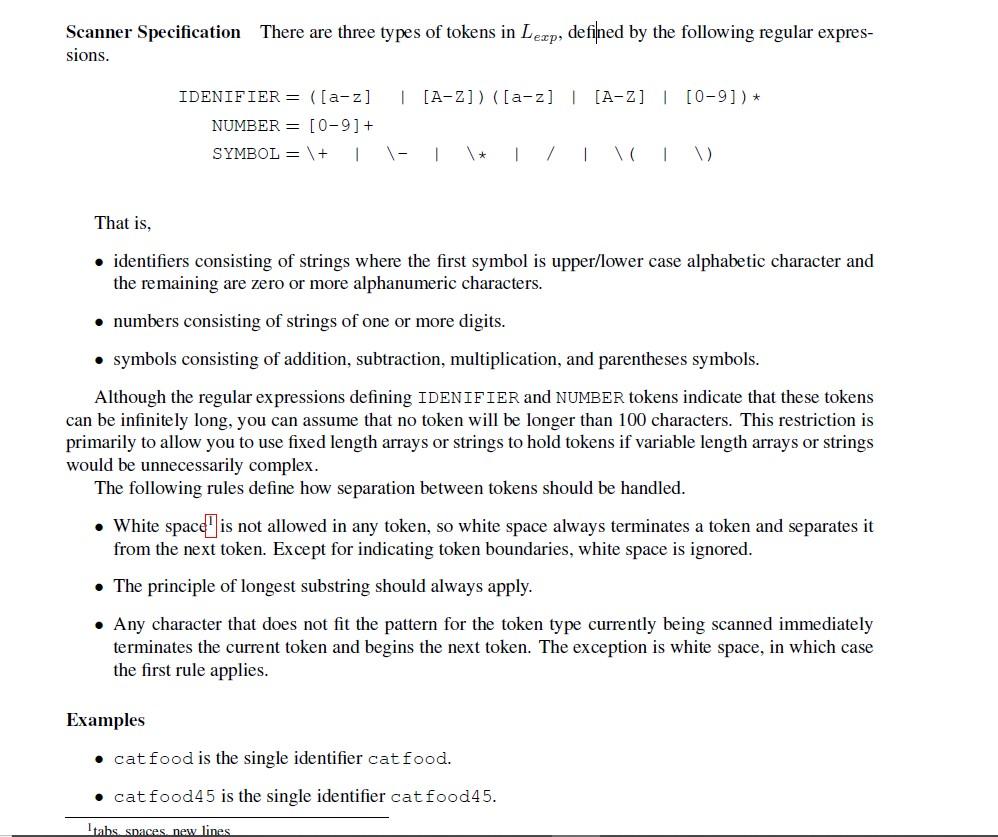

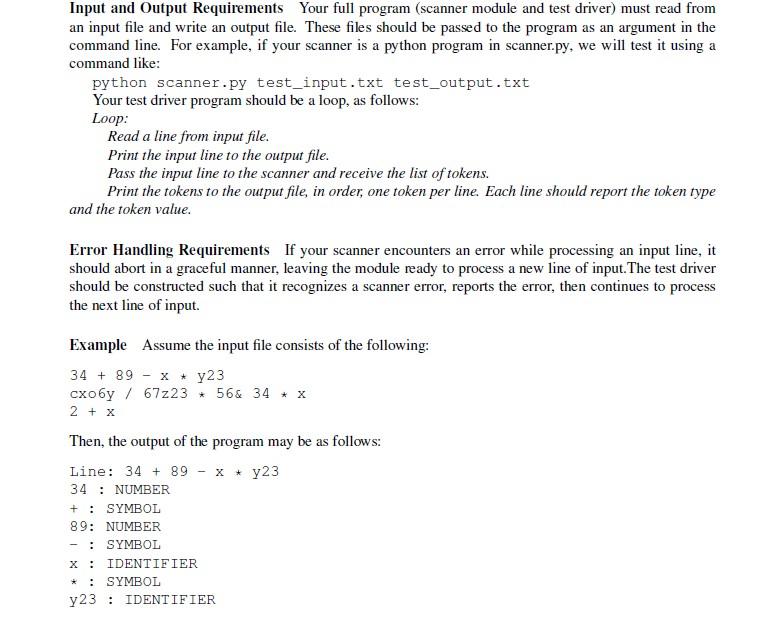

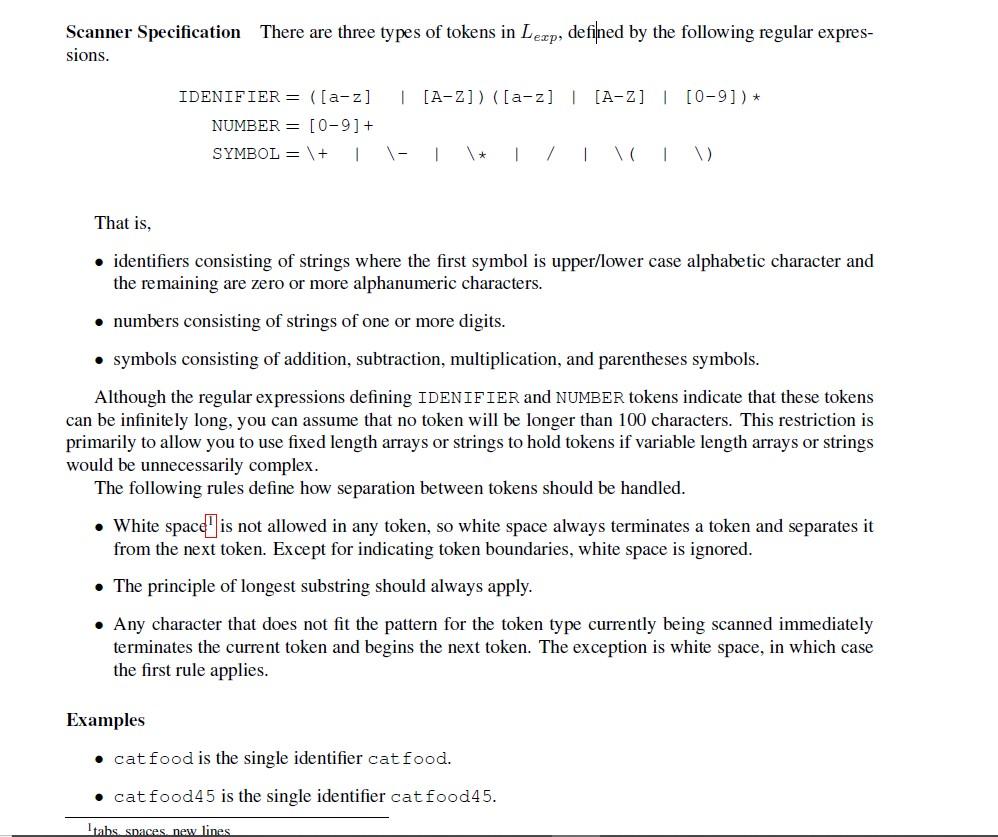

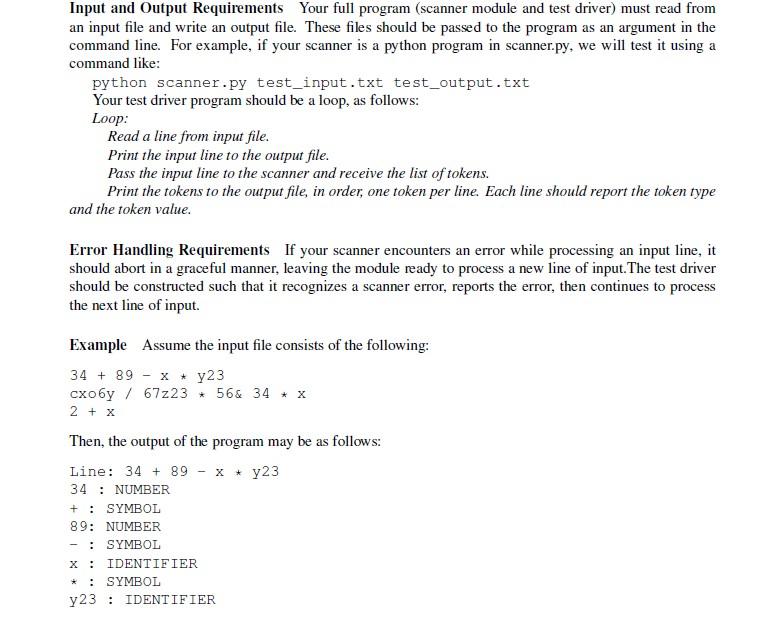

Scanner Specification There are three types of tokens in Lexp, defined by the following regular expres- sions. | [A-Z]) ([a-z] | [A-Z] | [0-9]) * IDENIFIER= ([a-z] NUMBER= [0-9]+ SYMBOL = + 1 That is, identifiers consisting of strings where the first symbol is upper/lower case alphabetic character and the remaining are zero or more alphanumeric characters. numbers consisting of strings of one or more digits. symbols consisting of addition, subtraction, multiplication, and parentheses symbols. Although the regular expressions defining IDENIFIER and NUMBER tokens indicate that these tokens can be infinitely long, you can assume that no token will be longer than 100 characters. This restriction is primarily to allow you to use fixed length arrays or strings to hold tokens if variable length arrays or strings would be unnecessarily complex. The following rules define how separation between tokens should be handled. White space" is not allowed in any token, so white space always terminates a token and separates it from the next token. Except for indicating token boundaries, white space is ignored. The principle of longest substring should always apply. Any character that does not fit the pattern for the token type currently being scanned immediately terminates the current token and begins the next token. The exception is white space, in which case the first rule applies. Examples catfood is the single identifier catfood. catfood45 is the single identifier catfood45. Itabs spaces new lines Input and Output Requirements Your full program (scanner module and test driver) must read from an input file and write an output file. These files should be passed to the program as an argument in the command line. For example, if your scanner is a python program in scanner.py, we will test it using a command like: python scanner.py test_input.txt test_output.txt Your test driver program should be a loop, as follows: Loop: Read a line from input file. Print the input line to the output file. Pass the input line to the scanner and receive the list of tokens. Print the tokens to the output file, in order, one token per line. Each line should report the token type and the token value. Error Handling Requirements If your scanner encounters an error while processing an input line, it should abort in a graceful manner, leaving the module ready to process a new line of input. The test driver should be constructed such that it recognizes a scanner error, reports the error, then continues to process the next line of input Example Assume the input file consists of the following: 34 + 89 - x + y23 cxo6y/ 67z23 * 56& 34 * x 2 + x Then, the output of the program may be as follows: Line: 34 + 89 - x + y23 34 : NUMBER SYMBOL 89: NUMBER SYMBOL IDENTIFIER SYMBOL y23 : IDENTIFIER : Scanner Specification There are three types of tokens in Lexp, defined by the following regular expres- sions. | [A-Z]) ([a-z] | [A-Z] | [0-9]) * IDENIFIER= ([a-z] NUMBER= [0-9]+ SYMBOL = + 1 That is, identifiers consisting of strings where the first symbol is upper/lower case alphabetic character and the remaining are zero or more alphanumeric characters. numbers consisting of strings of one or more digits. symbols consisting of addition, subtraction, multiplication, and parentheses symbols. Although the regular expressions defining IDENIFIER and NUMBER tokens indicate that these tokens can be infinitely long, you can assume that no token will be longer than 100 characters. This restriction is primarily to allow you to use fixed length arrays or strings to hold tokens if variable length arrays or strings would be unnecessarily complex. The following rules define how separation between tokens should be handled. White space" is not allowed in any token, so white space always terminates a token and separates it from the next token. Except for indicating token boundaries, white space is ignored. The principle of longest substring should always apply. Any character that does not fit the pattern for the token type currently being scanned immediately terminates the current token and begins the next token. The exception is white space, in which case the first rule applies. Examples catfood is the single identifier catfood. catfood45 is the single identifier catfood45. Itabs spaces new lines Input and Output Requirements Your full program (scanner module and test driver) must read from an input file and write an output file. These files should be passed to the program as an argument in the command line. For example, if your scanner is a python program in scanner.py, we will test it using a command like: python scanner.py test_input.txt test_output.txt Your test driver program should be a loop, as follows: Loop: Read a line from input file. Print the input line to the output file. Pass the input line to the scanner and receive the list of tokens. Print the tokens to the output file, in order, one token per line. Each line should report the token type and the token value. Error Handling Requirements If your scanner encounters an error while processing an input line, it should abort in a graceful manner, leaving the module ready to process a new line of input. The test driver should be constructed such that it recognizes a scanner error, reports the error, then continues to process the next line of input Example Assume the input file consists of the following: 34 + 89 - x + y23 cxo6y/ 67z23 * 56& 34 * x 2 + x Then, the output of the program may be as follows: Line: 34 + 89 - x + y23 34 : NUMBER SYMBOL 89: NUMBER SYMBOL IDENTIFIER SYMBOL y23 : IDENTIFIER