Answered step by step

Verified Expert Solution

Question

1 Approved Answer

Question 3. Consider a fully connected feed-forward neural network with two layers: inputs (x1,x2) and output y, i.e. no hidden units. Assume that each unit

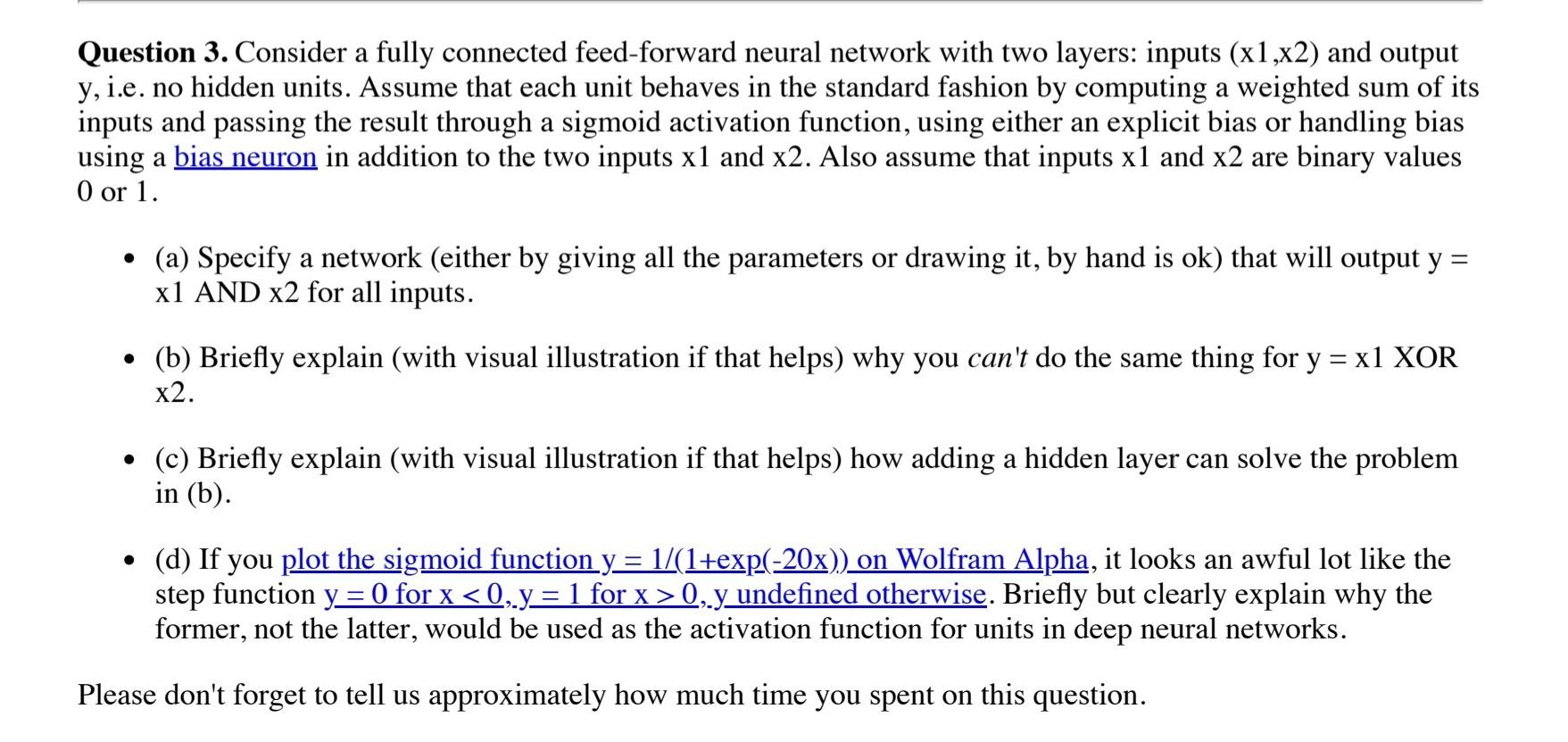

Question 3. Consider a fully connected feed-forward neural network with two layers: inputs (x1,x2) and output y, i.e. no hidden units. Assume that each unit behaves in the standard fashion by computing a weighted sum of its inputs and passing the result through a sigmoid activation function, using either an explicit bias or handling bias using a bias neuron in addition to the two inputs x1 and x2. Also assume that inputs x1 and x2 are binary values 0 or 1 . - (a) Specify a network (either by giving all the parameters or drawing it, by hand is ok) that will output y= x1 AND x2 for all inputs. - (b) Briefly explain (with visual illustration if that helps) why you can't do the same thing for y=x1XOR x2. - (c) Briefly explain (with visual illustration if that helps) how adding a hidden layer can solve the problem in (b). - (d) If you plot the sigmoid function y=1/(1+exp(20x)) on Wolfram Alpha, it looks an awful lot like the step function y=0 for x0, y y undefined otherwise. Briefly but clearly explain why the former, not the latter, would be used as the activation function for units in deep neural networks. Please don't forget to tell us approximately how much time you spent on this

Step by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started