Question

Students in 2400 often think that floating point number formats are as old as dirt and about as interesting. However, the last five years have

Students in 2400 often think that floating point number formats are as old as dirt and about as interesting. However, the last five years have seen an explosion of new floating point formats, mainly because of deep learning ( https://en.wikipedia.org/wiki/Deep_learning ) or neural networks. This is the technology behind everything from self-driving cars to automatically identifying cat pictures.

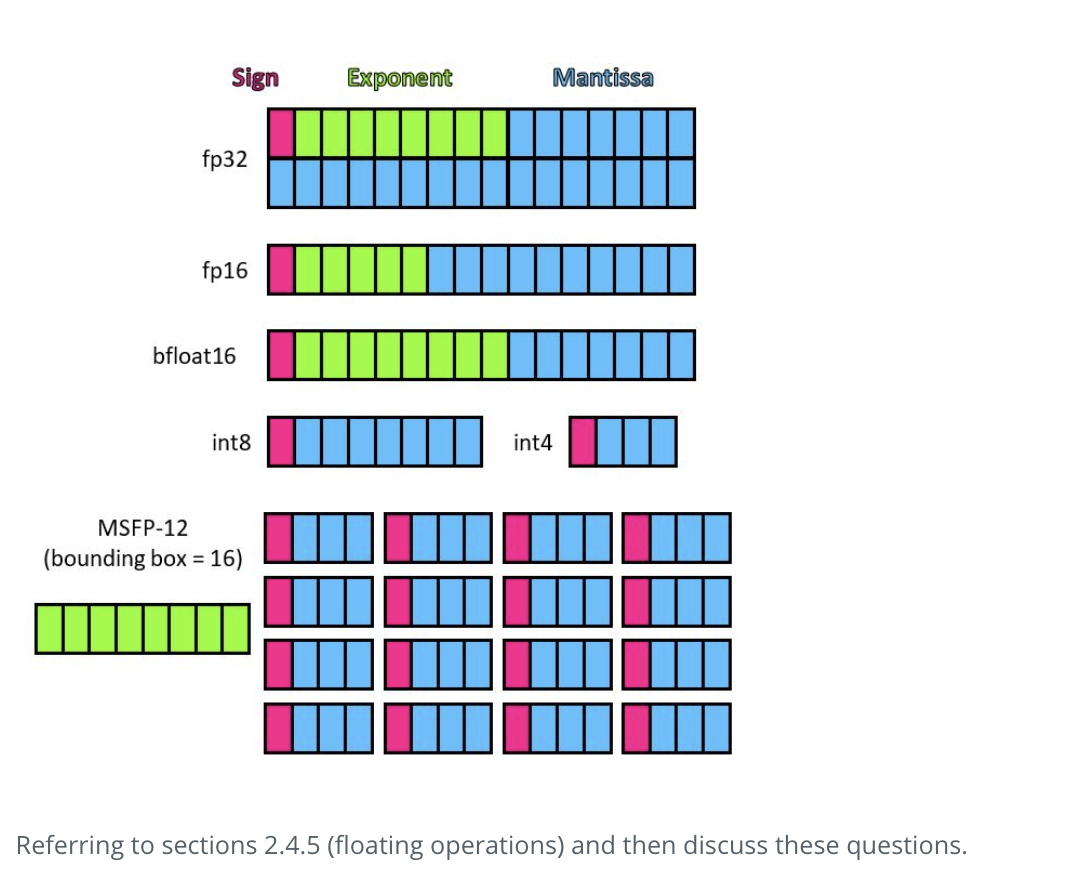

In 2017, Google developed Brain Float16, a 16-bit floating point format that has the same 8-bit exponent as the 32-bit C float to speed deep-learning. Google found deep learning needed to sum lots of values that are close to zero (e.g. 0.00001 + 0.00001), needed a wide dynamic range (e.g. 10e-38 to 10e+38) but did not need much precision (e.g. 0.123 rather than 0.1234567 was fine).

In 2020, Microsoft introduced MSFP which is a 12-bit floating point format that shares the exponent across multiple values. This figure from the MSFP link shows the sign bit (red), exponents (green) and mantissa (blue) of the 32-bit float, an older 16-bit format NVIDIA used for video games, the brain float16 and then the MSFP-12 float.

Referring to sections 2.4.5 (floating operations) and then discuss these questions.

-

Google built special hardware for deep learning (the TPU) very quickly but software developers had limited access. What similarity between bfloat16 and float would make it easier for developers to use their existing CPUs to develop code for the TPU? How would those developers emulate bfloat16?

-

Assuming that summing up many numbers close to zero is important, what is the advantage of MSFP-12? Think of the steps needed to add two floating point number (align exponents, then sum, then adjust exponent)

Section 2.4.5 points out that FP operations arent associative -- in other words a + (b + c) may not be the same as (a+b)+c. Given 2.4.5, do you think programmers would need to have more, less or the same awareness of their algorithms given the bfloat16 or MSFT-12 representations? Why?

Sign Exponent Mantissa fp32 fp16 bfloat16 int8 int4 MSFP-12 (bounding box = 16) Referring to sections 2.4.5 (floating operations) and then discuss these questions. Sign Exponent Mantissa fp32 fp16 bfloat16 int8 int4 MSFP-12 (bounding box = 16) Referring to sections 2.4.5 (floating operations) and then discuss these questionsStep by Step Solution

There are 3 Steps involved in it

Step: 1

Get Instant Access to Expert-Tailored Solutions

See step-by-step solutions with expert insights and AI powered tools for academic success

Step: 2

Step: 3

Ace Your Homework with AI

Get the answers you need in no time with our AI-driven, step-by-step assistance

Get Started